My full video analysis can be seen here:

The generative AI frenzy is in full swing, fueled by massive language models (LLMs) boasting trillions of parameters. But as the dust settles, a new reality is emerging: smaller, more efficient AI models are poised to dominate the landscape, especially at the edge. This is the core message that I got from the Qualcomm AI Day for analysts & media in San Diego a couple of weeks ago. First of all, I was a little surprised to see the amount of AI work that Qualcomm is doing. Secondly, when it comes to AI model inference decentralization, they are poised to corner the market.

The following are my top five takeaways from the Qualcomm AI Day event.

Takeaway 1: Smaller, Smarter Models Win

Let's be clear: massive LLMs are impressive feats of engineering, but they're not always practical. Their sheer size makes them computationally expensive and slow to respond, hindering real-time applications. Just because those models can do everything that doesn’t always mean they are the most efficient solution for your needs. This is where Qualcomm, along with a few other vendors, is pitching the idea of "right-sized" models.

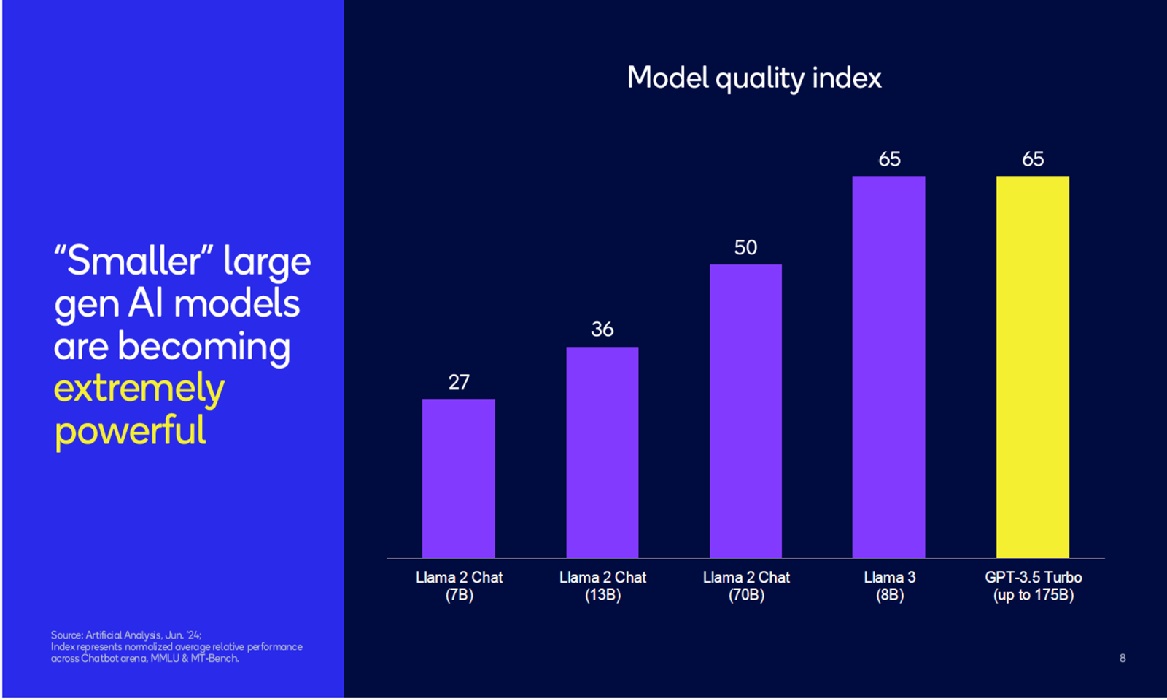

By employing techniques like quantization, distillation, and specialized training, one should be able to create models that are leaner and faster, optimized for specific tasks. Qualcomm's showcase of Llama 3, demonstrating comparable accuracy and quality of an 8 billion parameter model versus the 175 billion, parameter model GPT-3.5 Turbo is a prime example. The model quality index measure showed similar results for both models. In addition, an efficient architecture to process the right models, in the right place, at the right time will always win over bloated large models that might become way more expensive to process over time.

The takeaway for CXOs is clear: don't get caught up in the race for the biggest model. Prioritize efficiency and performance to unlock the true potential of generative AI.

Image Source: Qualcomm

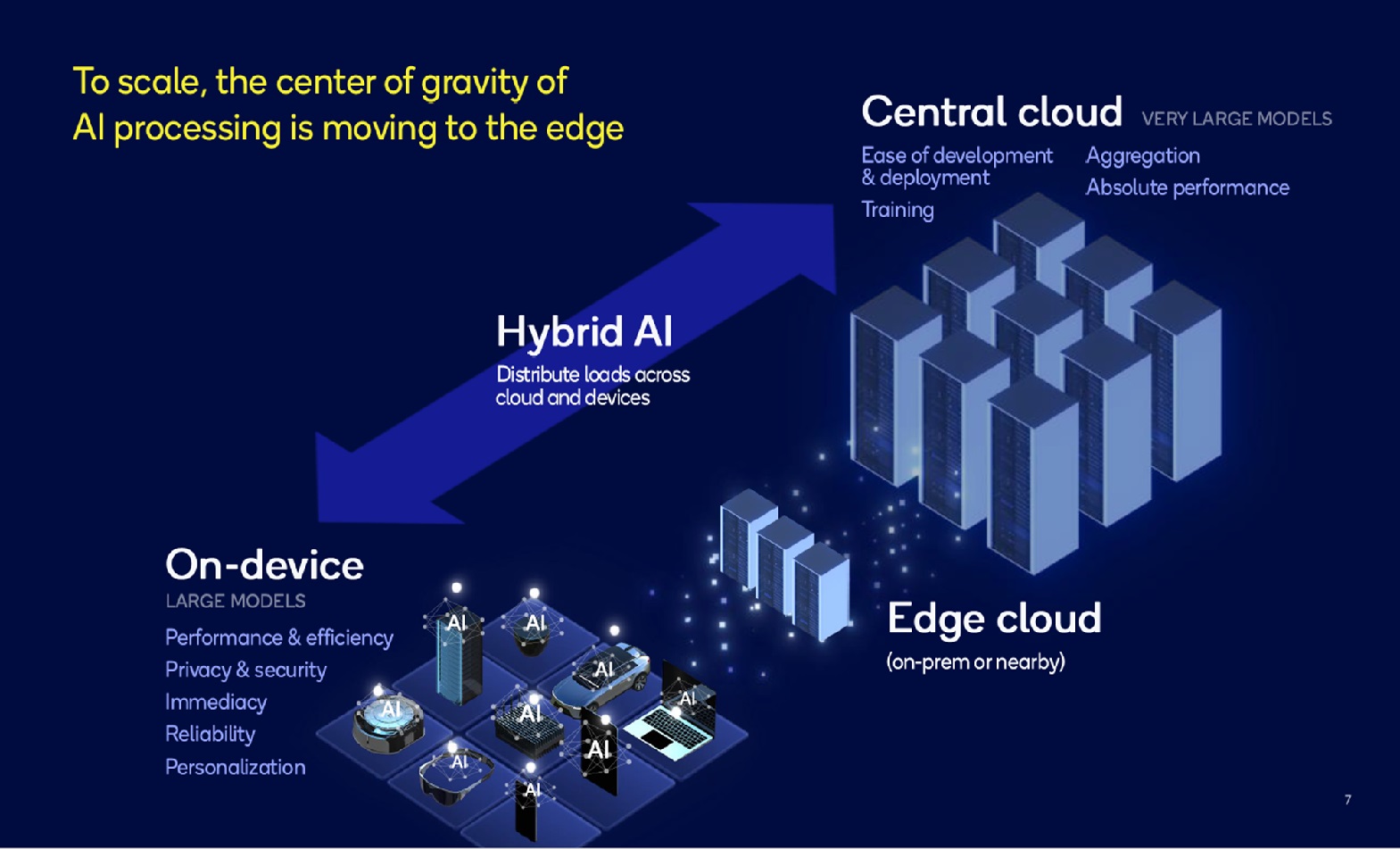

Takeaway 2: The Edge Is Faster & More Scalable

The future of AI lies at the edge, not solely in the cloud. On-device AI inference eliminates the need to send data to the cloud, reducing latency and enabling real-time decision-making. This shift is critical for applications that demand rapid responses, such as autonomous vehicles, industrial automation, and smart healthcare devices. CXOs should view the edge as a strategic advantage, enabling scalable AI deployments without the limitations of cloud connectivity.

Image Source: Qualcomm

Takeaway 3: On-device AI is Private & Personalized

Smaller, personalized AI models running on-device offer a compelling advantage: data privacy. With LLMs, data is often sent to the cloud for processing, raising concerns about security and control. On-device AI keeps sensitive information local, empowering users with personalized experiences while mitigating risks. As CXOs increasingly prioritize data privacy and security, on-device AI becomes a critical differentiator.

Takeaway 4: Qualcomm's AI Ecosystem is More Than Chips

Qualcomm's foray into the AI space extends beyond their powerful Snapdragon chips. Their AI Stack and Model Zoo offer a comprehensive suite of tools and pre-optimized models, simplifying the development and deployment of on-device AI. This is a game-changer for organizations looking to harness AI without building everything from scratch. Qualcomm's focus on model optimization and validation further solidifies its position as a key player in the on-device AI ecosystem.

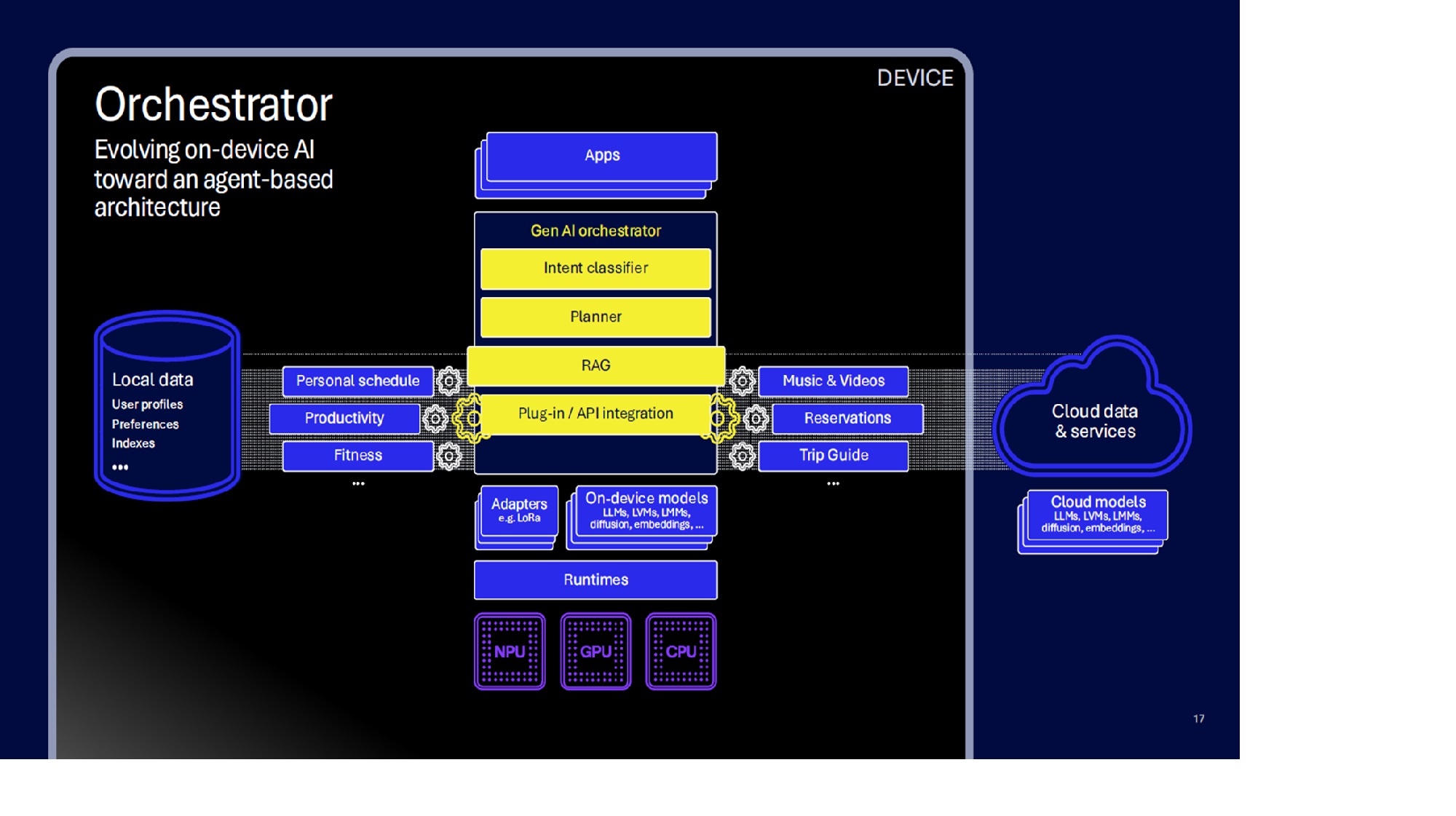

Takeaway 5: Qualcomm's AI Hub Accelerates On-Device AI Deployment

Qualcomm's AI Hub takes on-device AI development to the next level. It provides a streamlined platform for quantizing, optimizing, and validating AI models in any localized edge environment. With the ability to select from a variety of pre-optimized models or bring your own, choose your target platform and runtime, and seamlessly download and test the entire environment on the device, the AI Hub drastically reduces development time and complexity. Additionally, the Orchestrator module enables the evolution of on-device AI towards cutting-edge agent-based architecture, a key trend in the industry.

Image Source: Qualcomm

My Final Thoughts

While NVIDIA reigns supreme in the cloud AI chip market, the on-device AI landscape is ripe for disruption. Qualcomm's Snapdragon processors, coupled with their expanding AI ecosystem, position them as a formidable contender. Their ability to deliver powerful, efficient AI on consumer devices could reshape the industry.

As CXOs chart their AI strategies, they must look beyond the hype of massive models and embrace the potential of smaller, more efficient AI at the edge. This is where the true transformative power of generative AI will be unleashed.