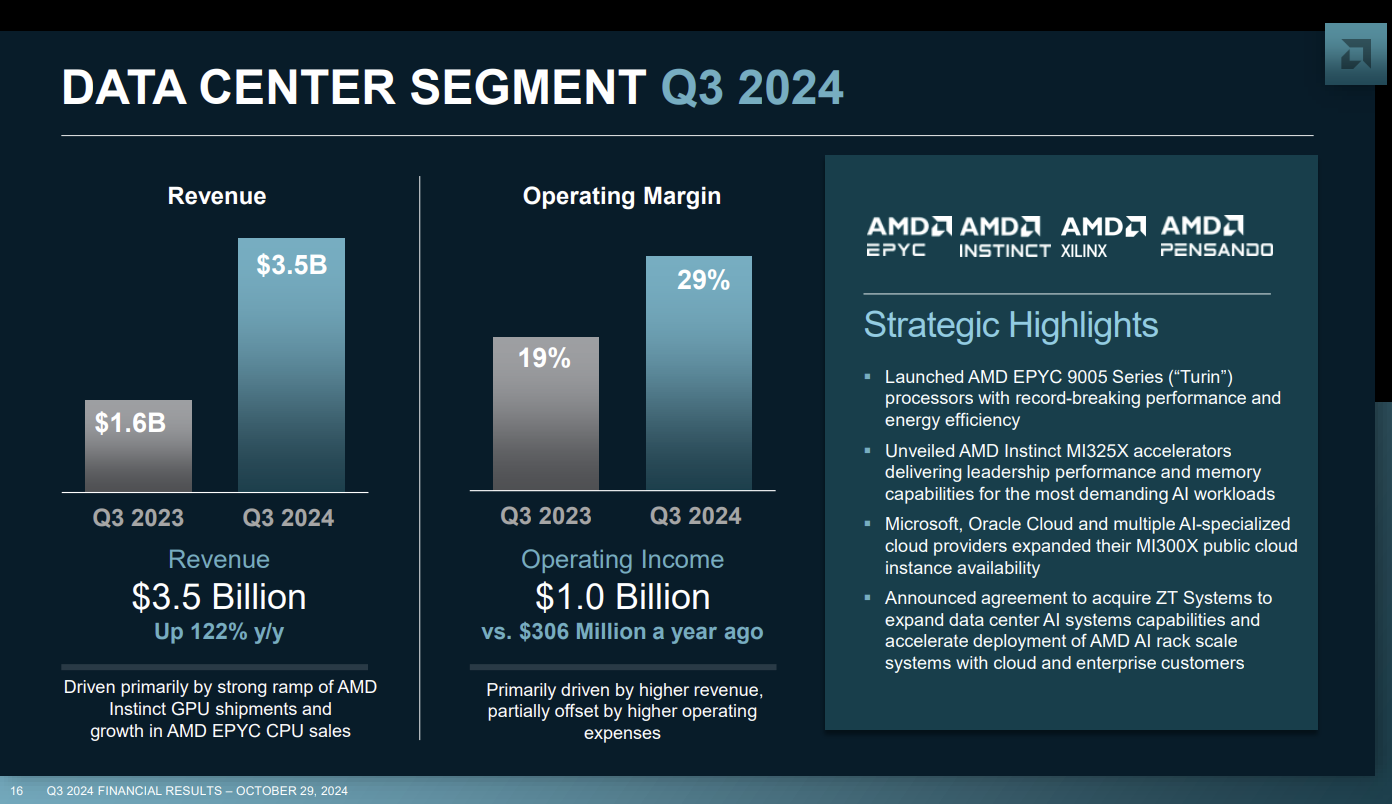

AMD continues to see its revenue surge due to its data center unit, which posted sales growth of 122% from a year ago.

The company reported third quarter net income of $771 million, or 47 cents a share, on revenue of $6.8 billion. Non-GAAP earnings were 92 cents a share.

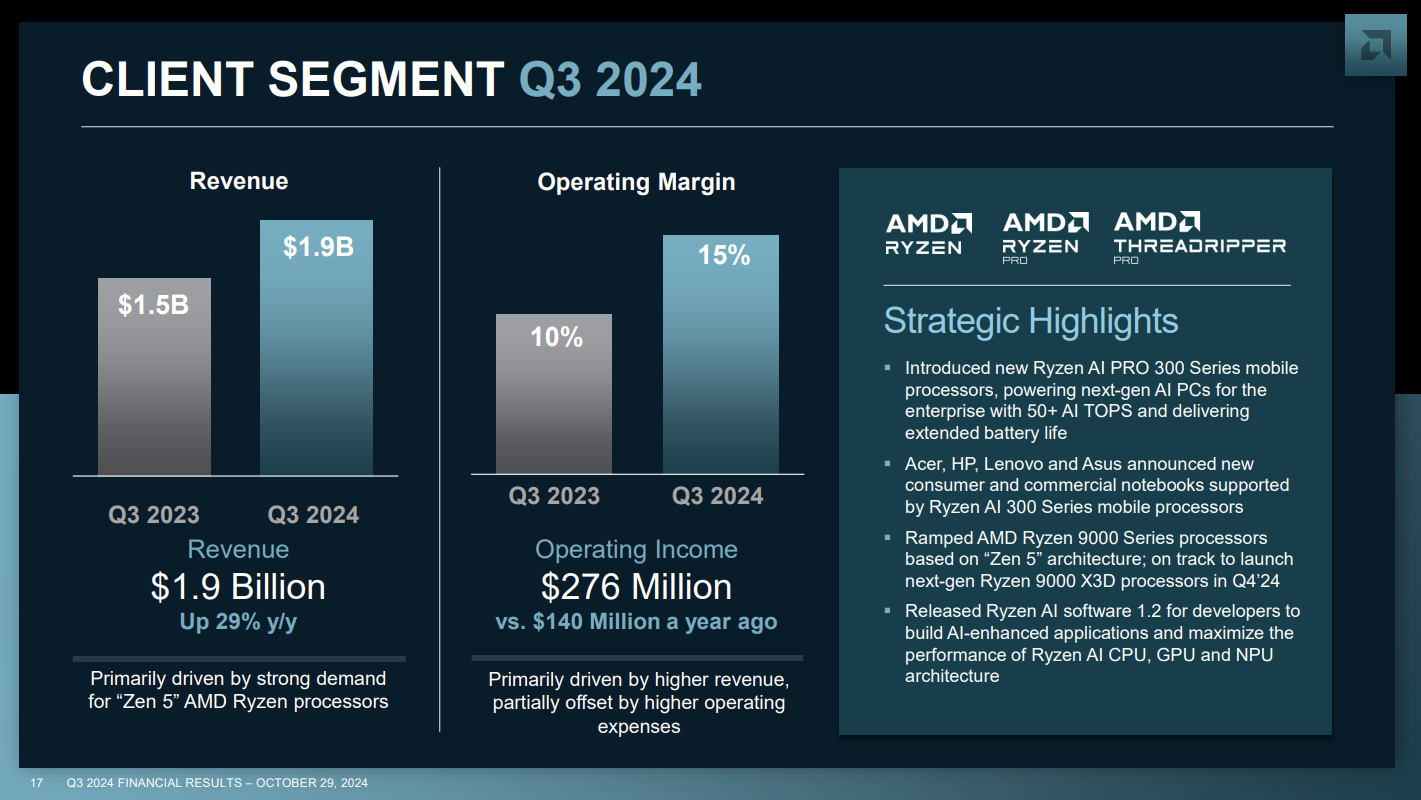

AMD CEO Dr. Lisa Su said: "record revenue was led by higher sales of EPYC and Instinct data center products and robust demand for our Ryzen PC processors."

- Data center revenue was $3.5 billion due to AMD Instinct GPU shipments as well as AMD EPYC CPUs.

- PC revenue was $1.9 billion, up 29% from a year ago.

- Embedded unit revenue was $927 million, down 25% from a year ago, and gaming revenue fell 69% from a year ago to $462 million.

As for the outlook, AMD projected fourth quarter revenue of $7.5 billion, give or take $300 million.

- AMD launches next-gen Instinct AI accelerators, 5th gen EPYC as it fortifies position as Nvidia counterweight

- AMD acquires Silo AI for $665M as it builds out AI ecosystem, genAI stack

- AMD expands its data center, AI infrastructure push with $4.9 billion purchase of ZT Systems

On a conference call, Su said:

- "Data Center GPU revenue ramped as MI300X adoption expanded with cloud, OEM and AI customers. Microsoft and Meta expanded their use of MI 300X accelerators to power their internal workloads in the quarter. Microsoft is now using MI 300X broadly for multiple co-pilot services powered by the family of GPT 4 models."

- "Development on our MI400 series based on the CDNA Next architecture is also progressing very well towards a 2026 launch. We have built significant momentum across our data center AI business with deployments increasing across an expanding set of Cloud, Enterprise and AI customers. As a result, we now expect Data Center GPU revenue to exceed $5 billion in 2024, up from $4.5 billion we guided in July and our expectation of $2 billion when we started the year."

- "In the Data Center alone, we expect the AI accelerator TAM will grow at more than 60% annually to $500 billion in 2028. To put that in context, this is roughly equivalent to annual sales for the entire semiconductor industry in 2023."

- "We feel very good about the market from everything that we see, talking to customers, there's significant investment in trying to build out the infrastructure required across all of the AI workloads."