Anthropic upgraded Claude 3.5 Sonnet with the ability to use your computer, looking at your screen, moving cursors, clicking and typing. The company also launched Claude 3.5 Haiku.

As large language model (LLM) vendors keep upping the training ante, Anthropic continues to think through features for collaboration and now computer use.

- Anthropic launches Claude Enterprise with 500K context window, GitHub integration, enterprise security

- Anthropic adds more collaboration features to Claude for Pro, Team customers

- Anthropic launches Claude 3.5 Sonnet, Artifacts as a way to collaborate

- Anthropic launches Claude Team plan, iOS app

- Anthropic CEO Amodei on where LLMs are headed, enterprise use cases, scaling

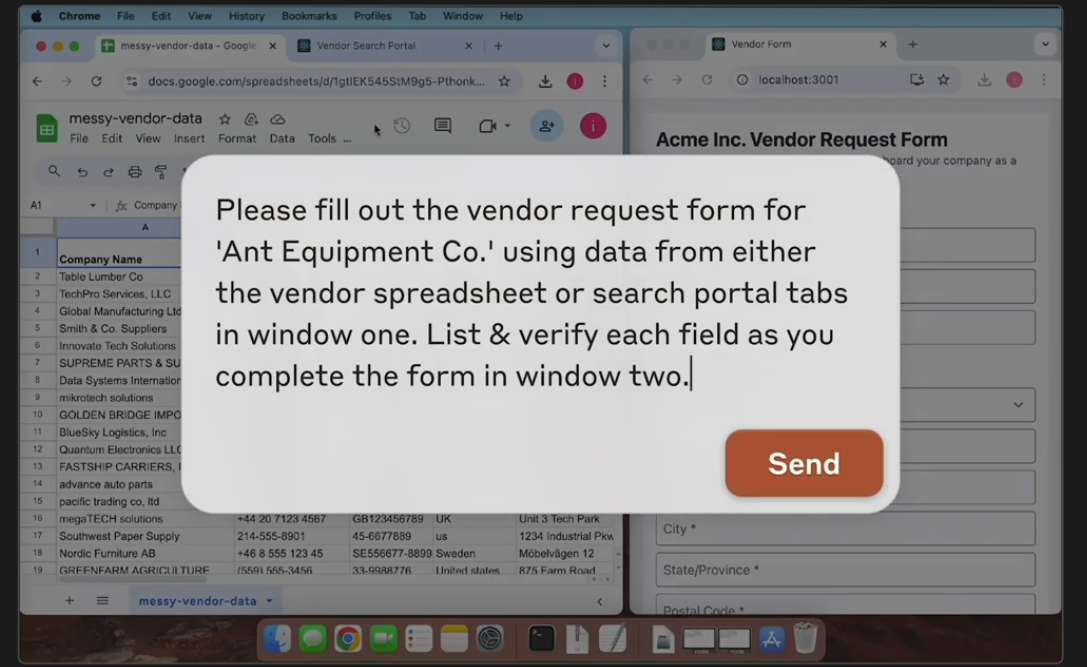

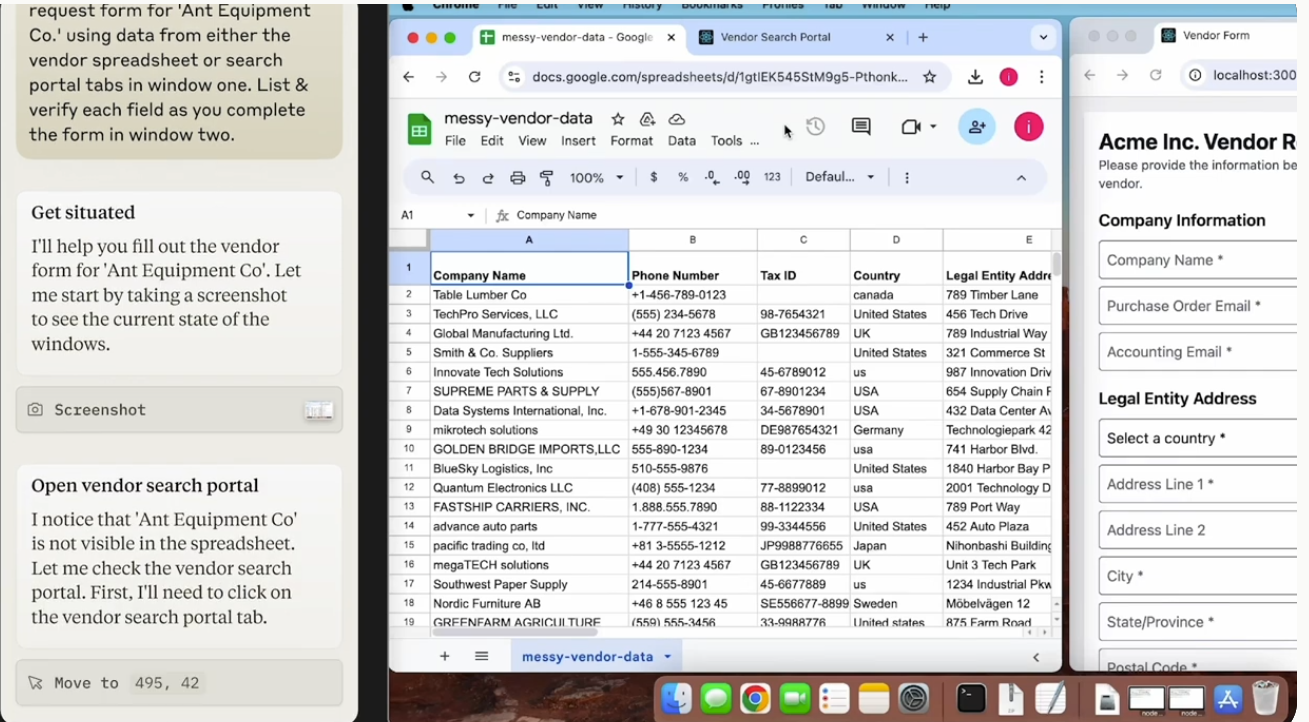

The company said computer use on Claude 3.5 Sonnet is available in public beta via API. Developers will be able to direct Claude to use computers and take on some of the tedious work of filling out forms and combing through files. Claude 3.5 Sonnet will be able to use any application on your computer.

Anthropic noted that computer use is still experimental and error prone, but expects rapid improvements. Asana, Canva, Cognition, DoorDash, Replit, and The Browser Company are among the companies using Claude 3.5 Sonnet and computer use. The new model is available via Anthropic, Amazon Bedrock and Google Cloud Vertex AI.

In a blog post, Anthropic outlined some of the research that went into Claude 3.5 Sonnet's computer use features. Among the takeaways:

- Claude looks at screenshots of what's visible to the user, then counts how many pixels vertically or horizontally it needs to move a cursor to click correctly.

- Anthropic spent a lot of time training Claude to count pixels. Without this skill, the model struggles to give mouse commands.

- Claude was able to turn a user's written prompt into a sequence of steps and then take action.

- The company said Claude isn't anywhere near human-level skill on the computer, but expects the gap to close.

Anthropic also outlined safety concerns. The company said:

“As with any AI capability, there’s also the potential for users to intentionally misuse Claude’s computer skills. Our teams have developed classifiers and other methods to flag and mitigate these kinds of abuses.

While computer use is not sufficiently advanced or capable of operating at a scale that would present heightened risks relative to existing capabilities, we've put in place measures to monitor when Claude is asked to engage in election-related activity, as well as systems for nudging Claude away from activities like generating and posting content on social media, registering web domains, or interacting with government websites. We will continuously evaluate and iterate these safety measures to balance Claude's capabilities with responsible use during the public beta."