Nvidia released NVLM 1.0, an open-source large language model family that includes a flagship 72B parameter version NVLM-D-72B.

The effort, detailed in a research paper, means Nvidia is also championing frontier open source LLMs. Previously, Meta and its Llama family of LLMs were leading the open-source model wave.

- Meta AI upgraded with Llama 3.2 models as Meta melds AI, AR, spatial computing

- Meta launches Llama 3.1 450B and for Zuckerberg it's personal

- Here's why Meta is spending $35 billion to $40 billion on AI infrastructure, roadmap

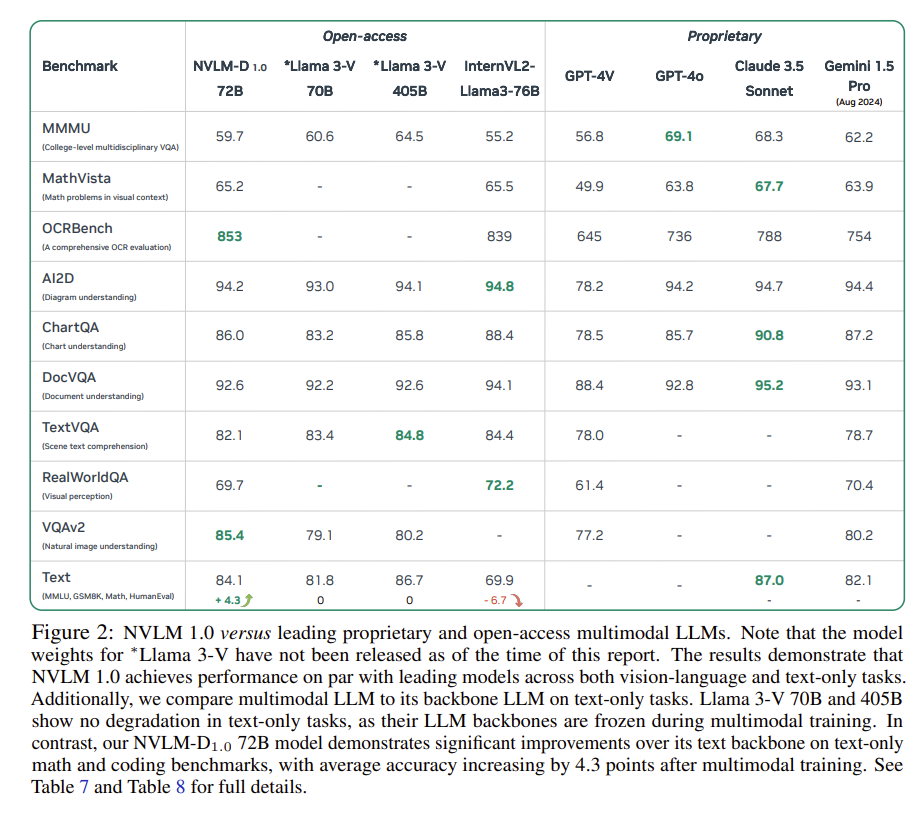

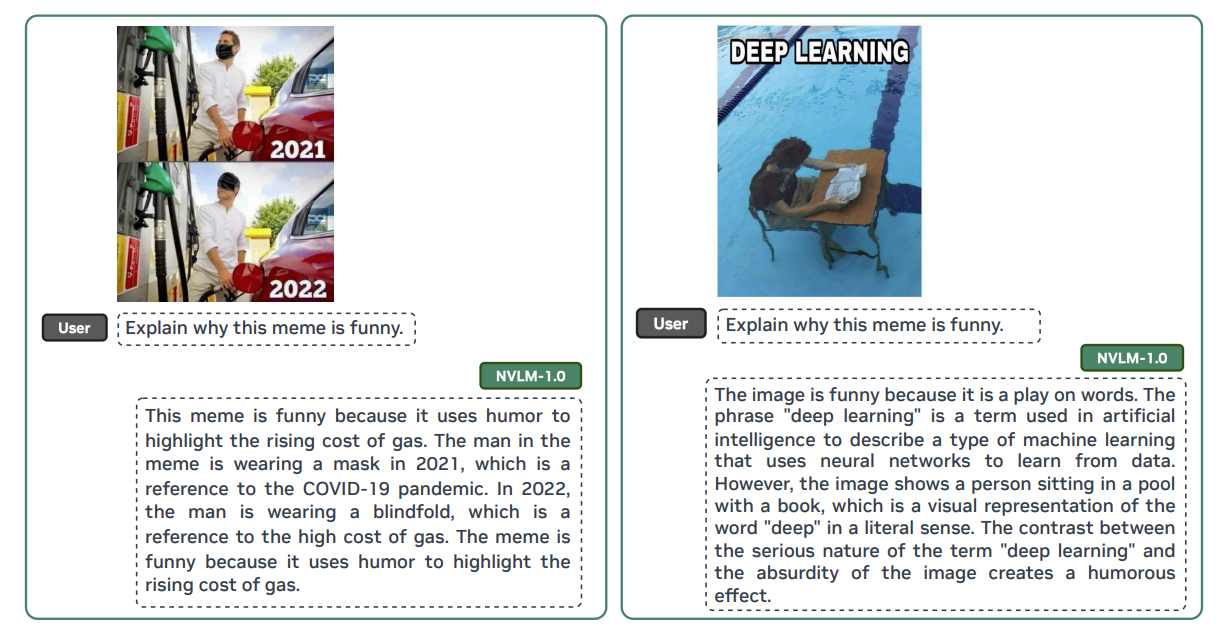

According to Nvidia researchers, NVLM 1.0 improves text-only performance after multimodal training. The Nvidia models have an architecture that enhances training efficiency as well as multimodal reasoning.

Nvidia also released the model weights for NVLM 1.0 and will open source the code. The move is notable since proprietary models don't release weights.

It remains to be seen whether LLM giants will follow suit. Regardless, NVLM 1.0 will enable smaller enterprises and researchers to piggyback off Nvidia's research. One thing is certain: LLM innovation is picking up pace.

- Liquid AI launches non-transformer genAI models: Can it ease the power crunch?

- Intuit embraces LLM choice for multiple use cases

- 13 artificial intelligence takeaways from Constellation Research’s AI Forum

- Google Cloud rolls out new Gemini models, AI agents, customer engagement suite

- OpenAI releases o1-mini, a model optimized STEM reasoning, costs

- Anthropic launches Claude Enterprise with 500K context window, GitHub integration, enterprise security

More on Nvidia:

- Nvidia's uncanny knack for staying ahead

- Nvidia highlights algorithmic research as it moves to FP4

- Nvidia launches NIM Agent Blueprints aims for more turnkey genAI use cases

- Nvidia shows H200 systems generally available, highlights Blackwell MLPerf results

- Nvidia outlines roadmap including Rubin GPU platform, new Arm-based CPU Vera