Privacy dies yet again

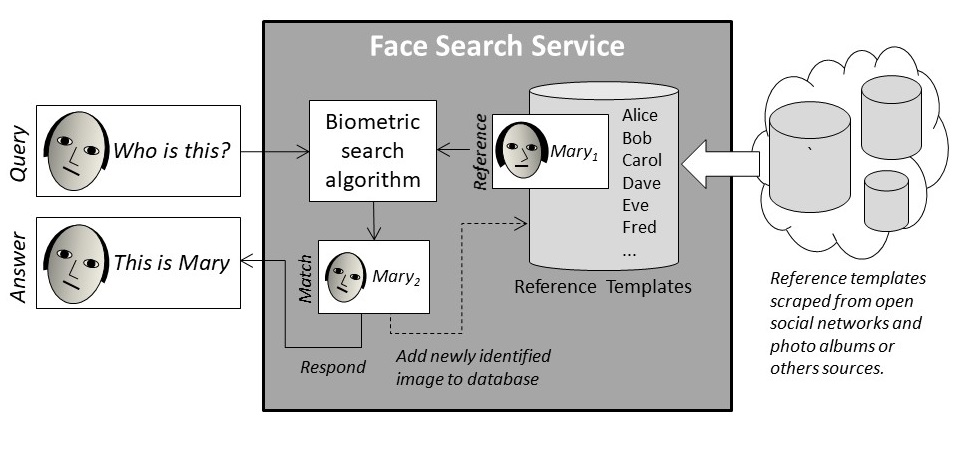

In another masterful piece of privacy reporting, Kashmir Hill in the New York Times has exposed the nefarious use of facial recognition technology by crime-fighting start-up Clearview. The business offers face searching and identification services, ostensibly to police forces, on the back of a strikingly large database of reference images extracted from the Internet. Clearview claims to have amassed three billion images -- far more than the typical mugshot library -- by scraping social media and other public sources.

It’s creepy, it offends our intuitive sense of ownership of our images, and the potential for abuse and unintended consequences is enormous. But how should we respond objectively and effectively to this development? Does facial recognition, as the NYT headline says, “end privacy as we know it”?

First let’s get one distraction out of the way. I would agree that anonymity is dead. But this is not the end of privacy (instead I feel it might be a new beginning).

If there’s nowhere to hide, then don’t

Why would I say anonymity is a distraction? Because it’s not the same thing as privacy. Anonymity is important at times, and essential in some lines of work, but it’s no universal answer for the general public. The simple reason is few of us could spend much of our time in hiding. We actually want to be known; we want others to have information about us, so long as that information is respected, kept in check, and not abused.

Privacy rules apply to the category Personal Data (aka Personal Information or sometimes Personally Identifiable Information) which is essentially any record that can reasonably be associated with a natural person. Privacy rules in general restrain the collection, use, disclosure, storage and ultimate disposal of Personal Data. The plain fact is that privacy is to protect information when it is not anonymous.

Broad-based privacy or “data protection” laws have been spreading steadily worldwide ever since 1980 when the OECD developed its foundational privacy principles (incidentally with the mission of facilitating cross border trade, not throttling it). Australia in 1988 was one of the world’s first countries to enact privacy law, and today is one among more than 130. The E.U.’s General Data Protection Regulation (GDPR) currently gets a lot of press but it’s basically an update to privacs codes which Europe has had for decades. Now the U.S. too is coming to embrace broad-based data privacy, with the California Consumer Privacy Act (CCPA) going live this month.

Daylight robbery

Long before the Clearview revelations, there have been calls for a moratorium on face recognition, and local government moves to ban the technology, for example in San Francisco. Prohibition is always controversial because it casts aspersions on a whole class of things and tends to blur the difference between a technology and the effects of how it’s used.

Instead of making a categorical judgement-call on face recognition, there is a way to focus on its effects through a tried and tested legal lens, namely existing international privacy law. Not only can we moderate the excesses of commercial facial recognition without negotiating new regulations, we can re-invigorate privacy principles during this crucial period of American law reform.

I wonder why privacy breaches for some people signal the end of privacy? Officially, privacy is a universal human right, as is the right to own property. Does the existence of robbery mean the end of property rights? Hardly; in fact it’s quite the opposite! We all know there’s no such thing as perfect security, and that our legal rights transcend crime. We should appreciate that privacy too is never going to be perfect, and not become dispirited or cynical by digital crime waves.

What is the real problem here?

Under most international data protection law, the way that Clearview AI has scraped its reference material from social media sites breaches the privacy of the people in those three billion images. We post pictures online for fun, not for the benefit of unknown technology companies and surveillance apparatus. To re-purpose personal images as raw material for a biometric search business is the first and foremost privacy problem in the Clearview case.

There has inevitably been commentary that images posted on the Internet have entered the “public domain”, or that the social media terms & conditions allow for this type of use. These are red herrings. It might be counter-intuitive, but conventional privacy laws by and large do not care if the source of Personal Data is public, so the Collection Limitation Principle remains. The words “public” and “private” don’t even feature in most information privacy law (which is why legislated privacy is often called “data protection”).

Data untouched by human hands

The second problem with Clearview’s activity is more subtle, but is a model for how conventional data protection can impact many more contemporary technologies. The crucial point is that technology-neutral privacy laws don’t care how Personal Data is collected.

If an item of Personal Data ends up in a database somewhere, then the law doesn’t care how it got there; it is considered to be collected. Data collection can be direct and human-mediated, as with questionnaires or web forms, it can be passive as with computer audit logging, or it can be indirect yet deliberate through the action of algorithms. If data results from an algorithm and populates a database, untouched by human hands, then according to privacy law it is still subject to the same Collection Limitation, Use & Disclosure Limitation and Openness principles as if it had been collected by another person.

The Australian Privacy Commissioner has developed specific advice about what they call Collection by Creation:

The concept of ‘collects’ applies broadly, and includes gathering, acquiring or obtaining personal information from any source and by any means. This includes collection by ‘creation’ which may occur when information is created with reference to, or generated from, other information the entity holds.

Data analytics can lead to the creation of personal information. For example, this can occur when an entity analyses a large variety of non-identifying information, and in the process of analysing the information it becomes identified or reasonably identifiable. Similarly, insights about an identified individual from data analytics may lead to the collection of new categories of personal information.

The outputs of a face search service are new records (or labels attached to existing records) which assert the identity of a person in an image. The assertions are new pieces of Personal Data, and their creation constitutes a collection. Therefore existing data protection laws apply to it.

The use and disclosure of face matching is required by regular privacy law to be relevant, reasonable, proportionate and transparent. And thus the effect of facial recognition technology can be moderated by the sorts of laws most places already have, and which are now coming to the U.S. too.

It’s never too late for privacy

Technology incidentally does not outpace the law; rather it seems to me technologists have not yet caught up with what long-standing privacy laws actually say. Biometrics certainly create new ways to break the law but by no means do they supersede it.

This analysis can be generalised to other often troubling features of the today’s digital landscape, to better protect consumers, and give privacy advocates some cause for regulatory optimism. For instance, when datamining algorithms guess our retail preferences or, worse, estimate the state of our health without asking us any questions then we rightly feel violated. Consumers should expect the law to protect them here, by putting limits on this type of powerful high tech wizardry, especially when it occurs behind their backs. The good news is that privacy law does just that.