Meta launched Llama 4 family with open weights with two models available on AWS, Microsoft Azure and Google Cloud.

With the large language models (LLMs) wars well underway, Meta announced Llama 4 on a Saturday so it can have the spotlight for a bit. With LLMs developing at a rapid clip, one advance can be overshadowed by another in just hours.

- AI Trends for 2025 and Beyond

- Google Gemini vs. OpenAI, DeepSeek vs. Qwen: What we're learning from model wars

- DeepSeek: What CxOs and enterprises need to know

- DeepSeek's real legacy: Shifting the AI conversation to returns, value, edge

According to Meta, the first installment of the Llama 4 suite is just the start. The company said more details about its AI vision will be outlined at LlamaCon on April 29.

Here's the Llama 4 family, which features natively multimodal AI, announced so far:

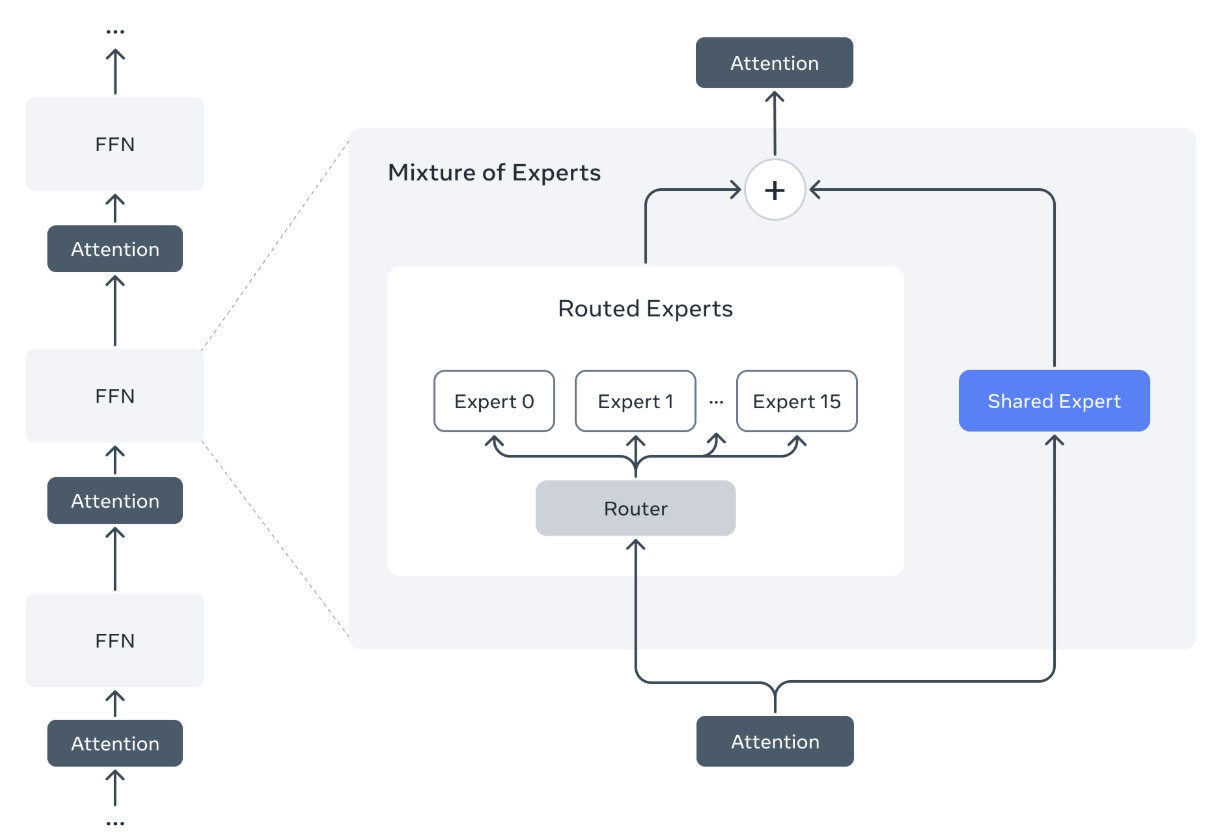

Llama 4 Behemoth: A 288B active parameter model with 16 experts and 2 trillion total parameters. Behemoth is in preview, still training and is Llama's most intelligent teacher model for distillation.

Meta said other Llama 4 models in its suite are distilled from Behemoth, which outperforms GPT-4.5, Claude Sonnet 3.7 and Gemini 2.0 Pro on multiple STEM benchmarks.

Llama 4 Maverick: A 17B active parameter model with 128 experts and 400B total parameters. Maverick, which is available now, is native multimodal with 1M context length.

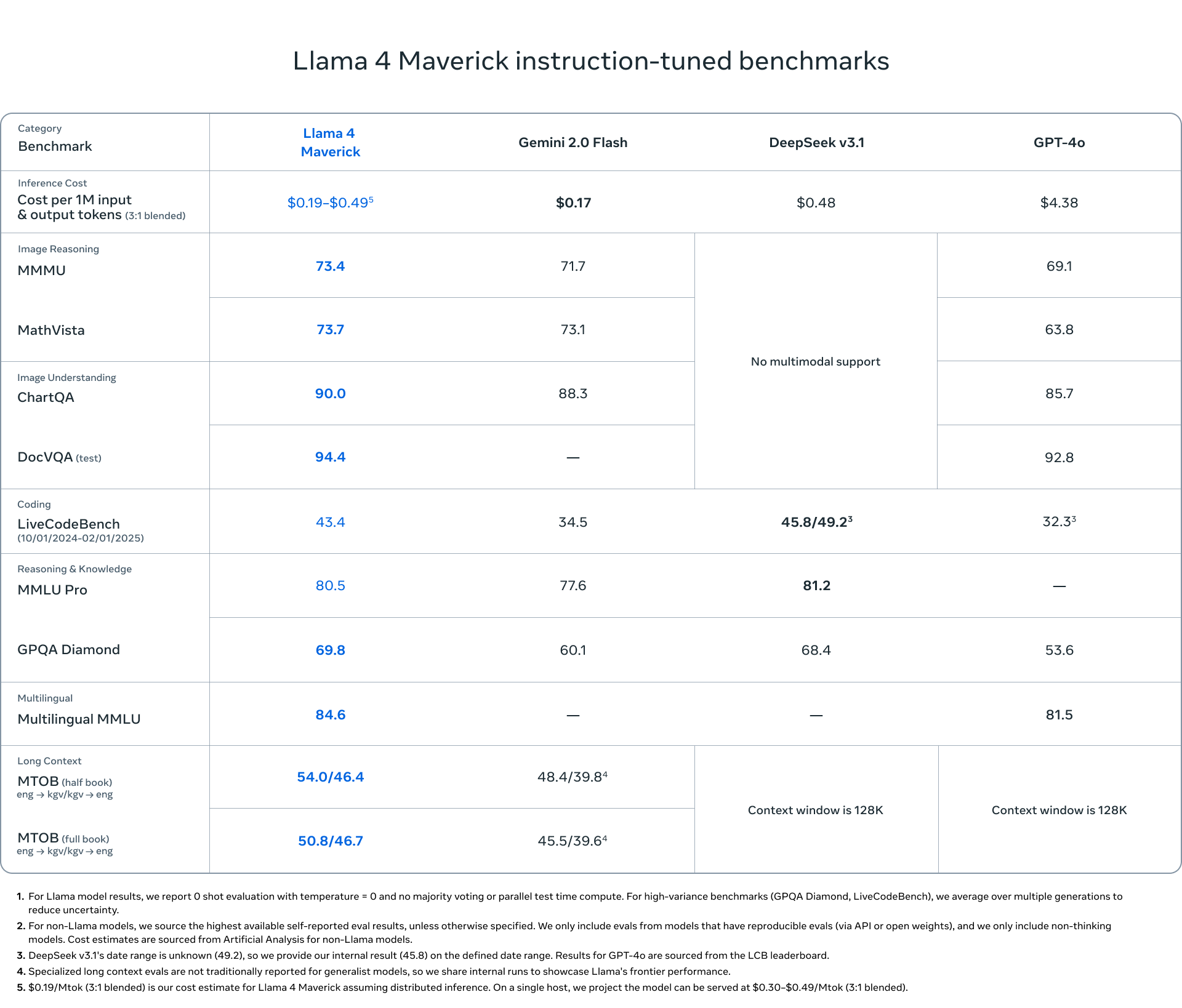

Maverick competes and wins against OpenAI's GPT-4o and Gemini 2.0 Flash and has comparable results to DeepSeek v3 on reasoning and coding.

Llama 4 Scout: A 17B active parameter model available now with 16 experts and 109B total parameters. Scout has a 10M context length and is optimized for inference.

Meta said Scout is more powerful than all of the previous Llama models and fits in a single Nvidia H100 GPU. Meta said Scout outperforms Gemma 3, Gemini 2.0 Flash-Lite and Mistral 3.1.

Meta's Llama 4 launch is notable because enterprises are using it widely via the big three cloud providers. AWS said Llama 4 is available on Amazon Bedrock, Microsoft Azure features it on Azure AI Foundry and Google Cloud has the LLM family on Vertex AI. Companies are taking Llama models, which are available on Hugging Face and Llama.com, and tailoring them to specific use cases.

- BT150 CxO zeitgeist: AI agents promising, but in transition period

- OpenAI's support puts MCP in pole position as agentic AI standard

- Enterprises will spend on agentic AI, but perhaps not yet

Meta CEO Mark Zuckerberg has said the company's goal is to make Llama as the flagship open source model and compete with or top proprietary competitors. Meta is also leveraging Llama 4 throughout its properties so taking it for a spin requires WhatsApp, Instagram or Facebook.

Here's a look at the Llama 4 architecture followed by benchmarks for Maverick.

Constellation Research's take

Constellation Research analyst Holger Mueller said:

"If someone wonders who is the leader in a tech category, watch the announcements before a major user conference by rivals. In this case, Google Cloud Next is looming, and both Microsoft and Meta make their boldest AI announcements yet. in Metas cases even previewing its Behemoth model is designed to stake out new ground. The Meta pitch of proving Llama across the three major cloud providers appeals to CxOs as it allows AI automation portability across cloud providers. On the flip side, the gap to Google is clear when it comes to Meta. Llama 4 is 12 months late with the 1M context window and being multimodal with Llama 4 Maverick. That was the news at Google Cloud Next in 2024."