The legacy of DeepSeek will have little to do with the engineering and performance of the model. The real impact of DeepSeek will be that it has shifted the AI workload conversation from hardware and GPUs to efficiency, cost for performance and the application layer.

DeepSeek has turned up during earnings conference calls with questions about whether it makes sense to spend so much on AI infrastructure. It's a valid question that's too early to answer.

DeepSeek: What CxOs and enterprises need to know | GenAI prices to tank: Here’s why

Tech giants did their best justifying the AI spend. After all, more efficient models could mean enterprises spend less on infrastructure. Nevertheless, Alphabet will spend $75 billion in 2025 on capital expenditures. Microsoft said it will spend $80 billion on AI data centers. Meta is planning to spend $60 billion to $65 billion on AI in 2025 and end the year with 1.3 million GPUs. Amazon’s capital expenditures, which are on a run rate of $105 million a year, also include distribution centers, supply chain improvements and technology.

The high-level takeaways from tech giants go like this:

- DeepSeek is evidence that foundational models are commoditizing. "I think one of the obvious lessons of DeepSeekR1 is something that we've been saying for the last two years, which is that the models are commoditizing. Yes, they're getting better across both closed and open, but they're also getting more similar and the price of inference is dropping like a rock," said Palantir CTO Shyam Sankar.

- That commoditization doesn't mean that there isn't a need for more infrastructure--at least initially.

- Hyperscalers are watching cheaper models and how they combine with their custom silicon. It's unclear what model commoditization and a focus away from training does to Nvidia.

- Cheaper models are moving value toward applications and use cases. AI usage will surge as will edge computing use cases. Enterprises will have a much easier time infusing applications with AI.

The reality is that DeepSeek is an advance and shifted the conversation to optimization and LLM pricing, but the model needs some work relative to other options. We saw DeepSeek put through a rubric on AWS Bedrock and AWS SageMaker compared to other models and the performance was a bit spotty. There were times DeepSeek went into a never-ending loop. DeepSeek may be good enough for some use cases, but in many areas it was meh. Nevertheless, DeepSeek plays into the AWS strategy to offer multiple models.

Sign up for Constellation Insights newsletter

Amazon CEO Andy Jassy said on the company’s fourth quarter earnings call that the AWS launch of Amazon Nova at re:Invent, DeepSeek and LLM choices give enterprises “a plethora of new models and features in Amazon Bedrock that give customers flexibility and cost savings.”

In the end, DeepSeek has been a great way to pivot the conversation on cheaper AI with a dash of a China vs. US AI war.

Jassy continued:

“We were impressed with what DeepSeek has done with some of the training techniques, primarily in flipping the sequencing of reinforcement training, reinforcement learning being earlier and without the human the loop. We thought that was interesting, ahead of the supervised fine tuning. We also thought some of the inference optimizations they did were also quite interesting. Virtually all the big generative AI apps are going to use multiple model types. Different customers going to use different models for different types of workloads. The cost of inference will substantially come down.”

Alphabet CEO Sundar Pichai said AI workloads and the foundational models underneath them will have to adhere to the Pareto frontier, which is a set of optimal solutions that balance multiple objectives of a complex system.

With Google Cloud now capacity constrained due to AI demand, Alphabet has no choice but to spend heavily on infrastructure. Microsoft took the plunge and noted that it can meet future AI demand due to its data center additions.

Nevertheless, it's worth highlighting what Pichai had to say. In a nutshell, scaling AI infrastructure and the commoditization of large language models aren't completely in conflict.

Pichai credited DeepSeek for its advances and said Gemini ranks well on price, performance and latency and all three matters for use cases. He added:

"You can drive a lot of efficiency to serve these models really well. I think a lot of it is our strength of the full stack development into an optimization, our obsession with cost per query, all of that, I think, sets us up well for the workloads ahead, both to serve billions of users across our products and on the cloud side.

If you look at the trajectory over the past 3 years, the proportion of the spend towards inference compared to training has been increasing, which is good because obviously inference is to support businesses with good ROIC. The reasoning models, if anything, accelerates that trend because it's obviously scaling upon inference dimension as well.

The reason we are so excited about the AI opportunity is we know we can drive extraordinary use cases because the cost of actually using it is going to keep coming down, which will make more use cases feasible. And that's the opportunity space. It's as big as it comes. And that's why you're seeing us invest to meet that moment."

Microsoft CEO Satya Nadella had a similar take. He said on Microsoft’s earnings call:

"What's happening with AI is no different than what was happening with the regular compute cycle. It's always about bending the curve and then putting more points up the curve. There are the AI scaling laws, both the pre-training and the inference time compute that compound and that's all software."

Nadella said DeepSeek is one data point that models are being commoditized and broadly used. Software customers will benefit. Given Nadella has Microsoft Azure, DeepSeek is just fine to him.

Meta CEO Mark Zuckerberg said it's too early to know what DeepSeek means for infrastructure spending. "There are a bunch of trends that are happening here all at once. There's already sort of a debate around how much of the compute infrastructure that we're using is going to go towards pretraining versus as you get more of these reasoning time models or reasoning models where you get more of the intelligence by putting more of the compute into inference, whether just will shift how we use our compute infrastructure towards that," said Zuckerberg.

Just because models become less expensive doesn't mean the demand for compute changes, said Zuckerberg. "One of the new properties that's emerged is the ability to apply more compute at inference time in order to generate a higher level of intelligence and a higher quality of service," said Zuckerberg. "I continue to think that investing very heavily in CapEx and infra is going to be a strategic advantage over time. It's possible that we'll learn otherwise at some point, but I just think it's way too early to call that."

And about that edge computing hook…

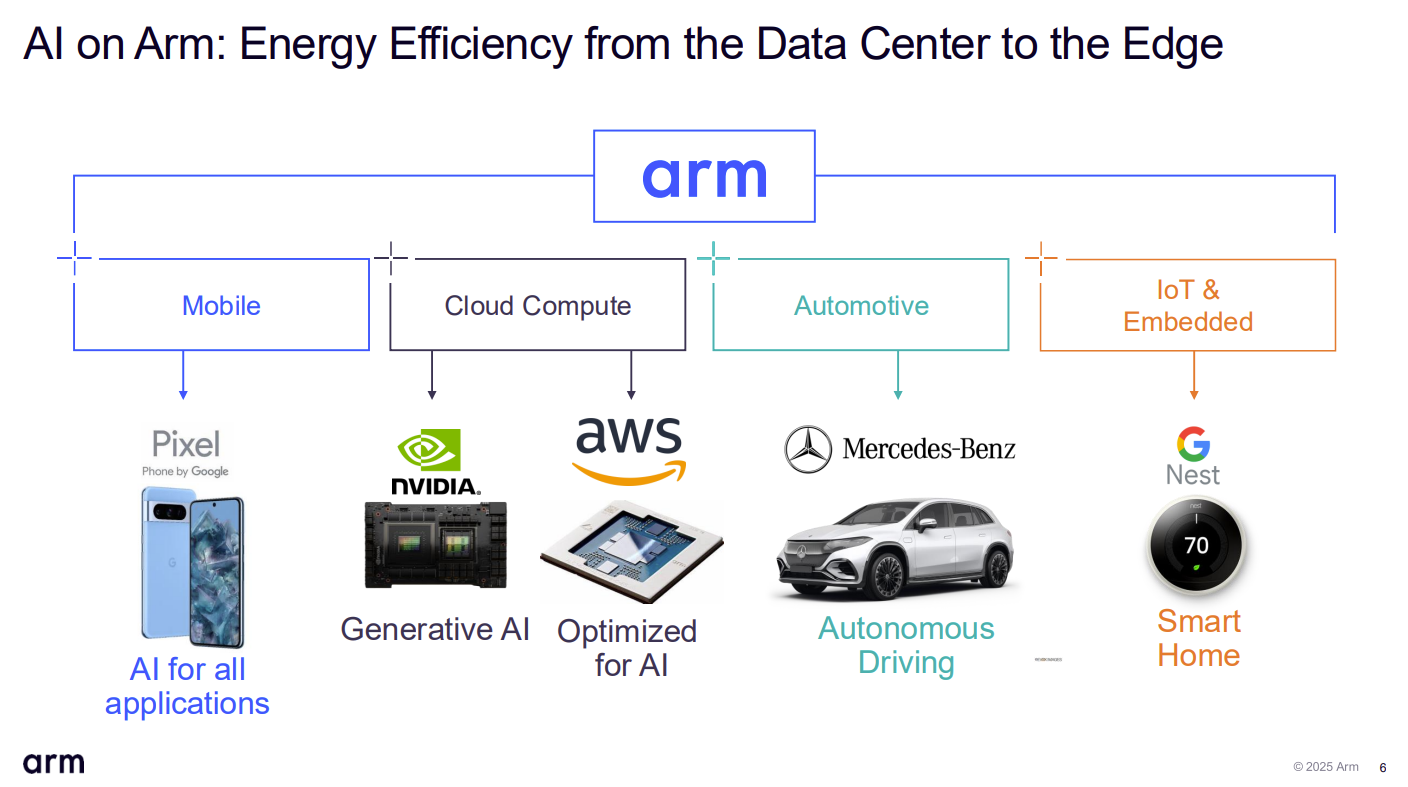

The DeepSeek-inference-lower cost AI discussion has also highlighted how edge devices--PCs, smartphones, Project Digits and more--are going to be a larger part of the AI inference mix. Here's what Arm CEO Rene Haas said on the company's third quarter earnings call:

"DeepSeek is great for the industry, because it drives efficiency, it lowers the cost. It expands the demand for overall compute. When you think about the application to Arm, given the fact that AI workloads will need to run everywhere and lower-cost inference, a more efficient inference makes it easier to run these applications in areas where power is constrained. As wonderful a product as Grace Blackwell is, you'd never be able to put it in a cell phone, you'd never be able to put it into earbuds, you can't even put it into a car. But Arm is in all those places. I think when you drive down the overall cost of inference, it's great."

Haas also added that the industry will still need some serious compute so the AI buildout will continue. "We're nowhere near the capabilities that could be transformational in terms of what AI can do," he said.

Qualcomm CEO Cristiano Amon said DeepSeek illustrates how AI will play into edge use cases. Amon said:

"We also remain very optimistic about the growing edge AI opportunity across our business, particularly as we see the next cycle of AI innovation and scale. DeepSeek-R1 and other similar models recently demonstrated the AI models are developing faster, becoming smaller, more capable and efficient, and now able to run directly on device. In fact, DeepSeek-R1 distilled models were running on Snapdragon powered smartphones and PCs within just a few days of its release.

As we entered the era of AI inference, we expect that while training will continue in the cloud, inference will run increasingly on-device, making AI more accessible, customizable, and efficient. This will encourage the development of more targeted, purpose-oriented models and applications."