Amazon Web Services launched Graviton4, its custom chip for multiple workloads, with big improvements over last year's Graviton3. AWS also launched the latest versions of its Trainium and Inferentia processors, two GPUs that may be able to bring the price of model training down.

The takeaway: AWS plans to push its custom silicon cadence to gain more workloads even as it partners with big guns such as Nvidia, Intel and AMD.

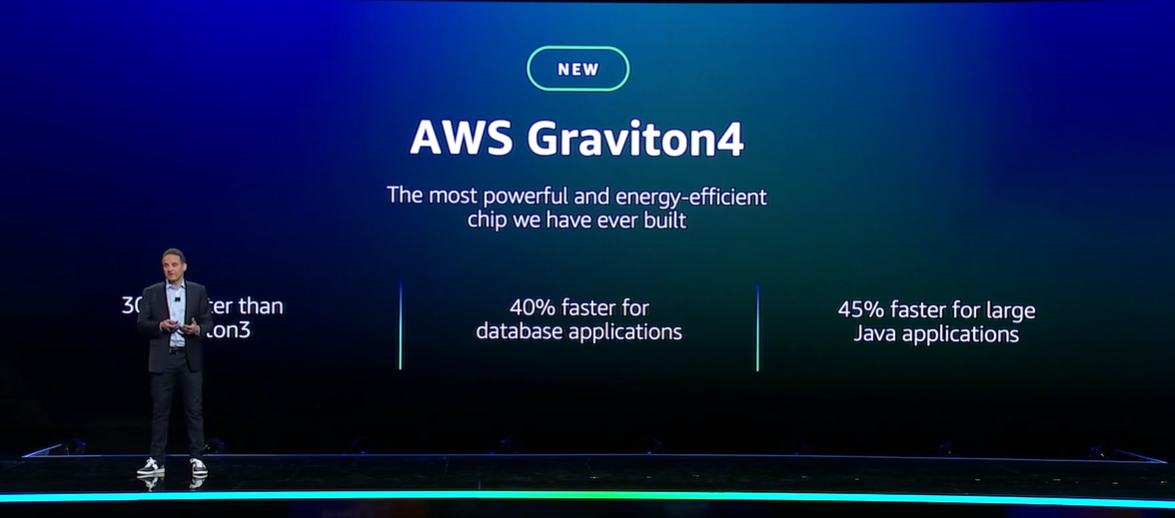

Graviton4 is billed as AWS' "most powerful and energy efficient chip that we have ever built. The launch of the chip also shows a faster cadence for AWS' processors. Graviton launched in 2018 with Graviton2 following up two years later. Graviton3 launched last year.

- AWS bets palm reading will come to an enterprise near you

- AWS Introduces Two Important Database Upgrades at Re:Invent 2023

- AWS launches Braket Direct with dedicated quantum computing instances, access to experts

- AWS re:Invent 2023: Perspectives for the CIO | Live Blog

- AWS, Salesforce expand partnership with Amazon Bedrock, Salesforce Data Cloud, AWS Marketplace integrations

According to AWS, Graviton4 is 30% faster than Graviton3, 30% faster for web applications and 40% faster for database applications. AWS has 150 different Graviton-powered Amazon EC2 instance types globally, has built more than 2 million Graviton processors, and has more than 50,000 customers including Datadog, DirecTV, Discovery, Formula 1 (F1), NextRoll, Nielsen, Pinterest, SAP, Snowflake, Sprinklr, Stripe and Zendesk.

Hyperscalers are racing to create custom processors that offer an option for enterprises to cut compute costs. While most of the focus is on model training and inferencing, AWS custom processor strategy is wider. In addition to Graviton, AWS launched Trainium and Inferentia aimed at AI workloads.

Adam Selipsky, CEO of AWS, said during his re:Invent keynote that Graviton is an effort to lower the cost of cloud compute. "We have more than 50,000 customers for Graviton," said Selipsky, who cited SAP as a key customer. "Other cloud providers have not delivered on their first server processors," he said.

Graviton4 will power R8g instances for EC2 with more instances planned. R8g instances are in preview.

Juergen Mueller, CTO of SAP, said Graviton-based EC2 instances have provided a 35% bump in price performance for analytical workloads. SAP will be validating Graviton4 performance.

In addition, AWS launched the latest versions of its Trainium and Inferentia processors, two GPUs that may be able to bring the price of model training down. Today, AI workloads are dominated by Nvidia and AMD is entering the market.

However, AWS has had GPUs in the market and has been able to acquire workloads for enterprises that may not need Nvidia's horsepower. Here's the breakdown:

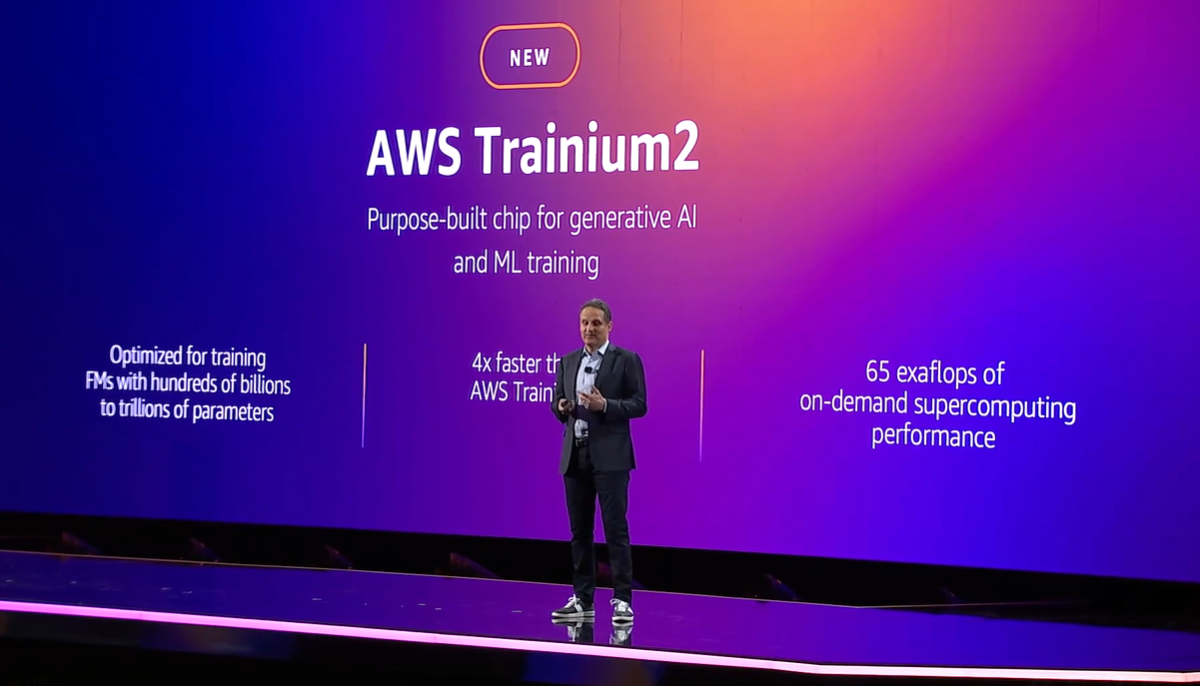

- For training generative AI models, AWS launched Trainium2, which is 4x faster than its previous version and operates at 65 exaflops.

- For using generative AI models and inference, AWS launched Inferentia2, which has 4x the throughput of the previous version and 10x lower latency.

"We need to keep pushing on price/performance on training and inference," said Selipky, who again referenced that other cloud providers were behind on custom silicon. Microsoft announced its AI processors at Ignite 2023.

Constellation Research analyst Holger Mueller said:

"AWS pushes its custom siliiicon with version 2 on Trainium and Inferentia chips, as well as its fundamental Graviton chip. When a large ISV like SAP moves 4M lines of code and sees cost savings it is something to take note of. Equally the sustainability aspect is key for the next version of custom silicon--it saves real money."

During the keynote Selipsky was sure to note that Nvidia remains a key partner for AWS, which has a bevy of Nvidia GPU instances. Nvidia CEO Jensen Huang appeared on stage to outline the next phase of the partnership with AWS.

- Nvidia sees Q4 sales of $20 billion, up from $6.05 billion a year ago

- Why enterprises will want Nvidia competition soon

Selipsky said AWS will add Nvidia DGX Cloud and latest GPUs to its platform. Huang said it will build its largest AI Foundry on AWS. Note that Huang also appeared with Microsoft CEO Satya Nadella to tout Nvidia’s partnership on Azure.

However, AWS is looking to broaden GPU workloads and the bet is that it'll handle a lot of those on its own silicon.