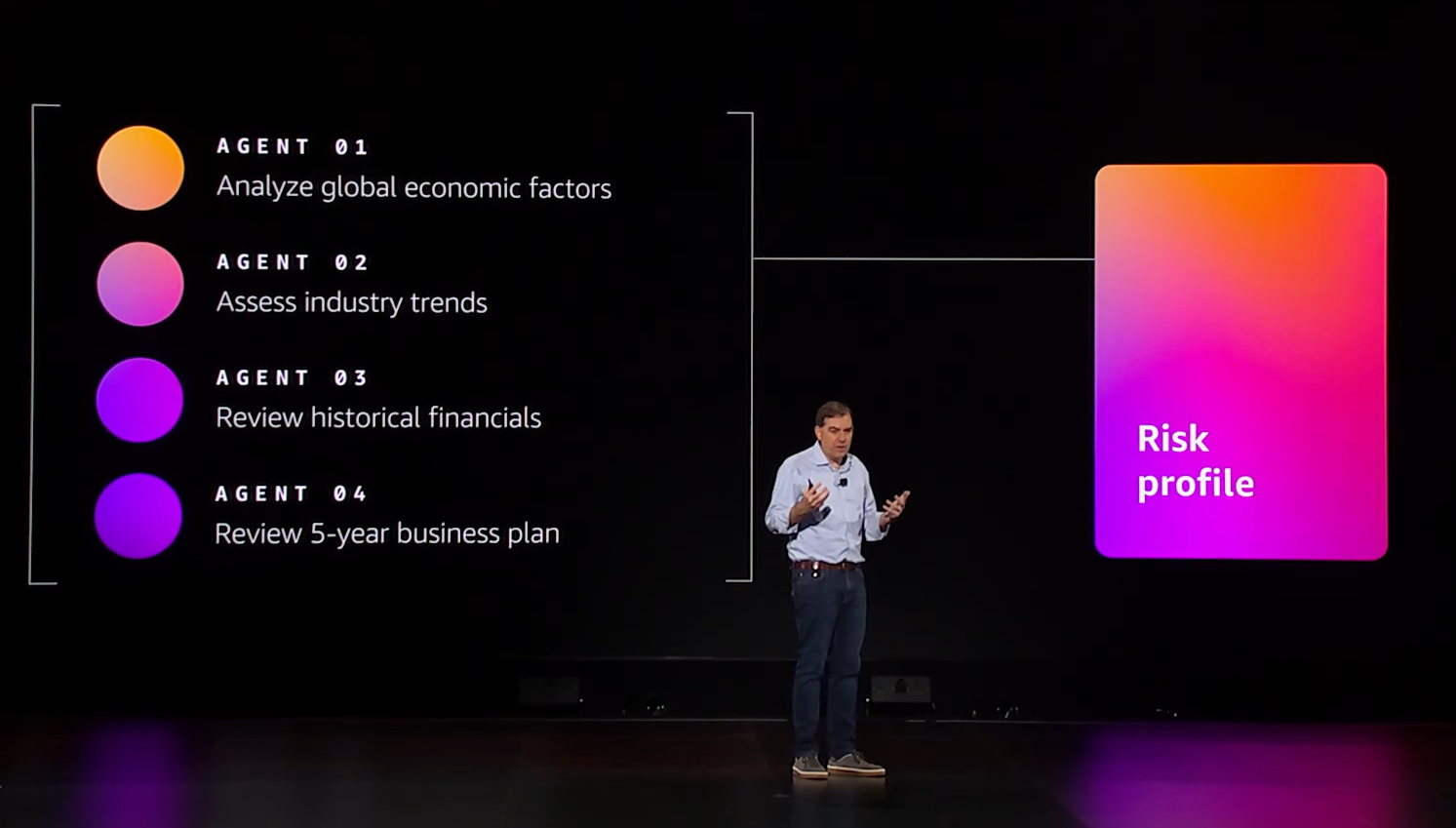

AWS is expanding Amazon Bedrock to enable multi-agent collaboration to address higher complexity tasks.

With multi-agent collaboration, Bedrock will use models as a team with planning, structure, specialization and parallel work.

Collaboration and orchestration of agentic AI will be a big theme in 2025 and vendors are trying to get ahead of agent coordination before enterprises implement at scale. First, the industry may have to agree on standards so AI agents can communicate.

- AWS revamps S3, databases with eye on AI, analytics workloads

- AWS scales up Trainium2 with UltraServer, touts Apple, Anthropic as customers

- AWS outlines new data center, server, cooling designs for AI workloads

- AWS re:Invent 2024: Four AWS customer vignettes with Merck, Capital One, Sprinkr, Goldman Sachs

- Oracle Database@AWS hits limited preview

Speaking during his AWS re:Invent 2024 keynote, CEO Matt Garman said:

"If you think about hundreds and hundreds of agents all having to interact, come back, share data, go back, that suddenly the complexity of managing the system has balloons to be completely unmanageable.

Now Bedrock agents can support complex workflows. You create these series of individual agents that are really designed for your special and individualized tasks. Then you create this supervisor agent, and it kind of acts like the thing about it as acting as the brain for your complex workflow. It ensures all this collaboration against all your specialized agents."

Amazon CEO Andy Jassy said Alexa's overhaul will be powered by some of Bedrock's orchestration tools. He said:

"We are in the process right now rearchitecting the brains of Alexa with multiple foundation models. And it's going to not only help Alexa answer questions even better, but it's going to do what very few generative AI applications do today, which is to understand and anticipate your needs, actually take action for you. So you can expect to see this in the coming months."

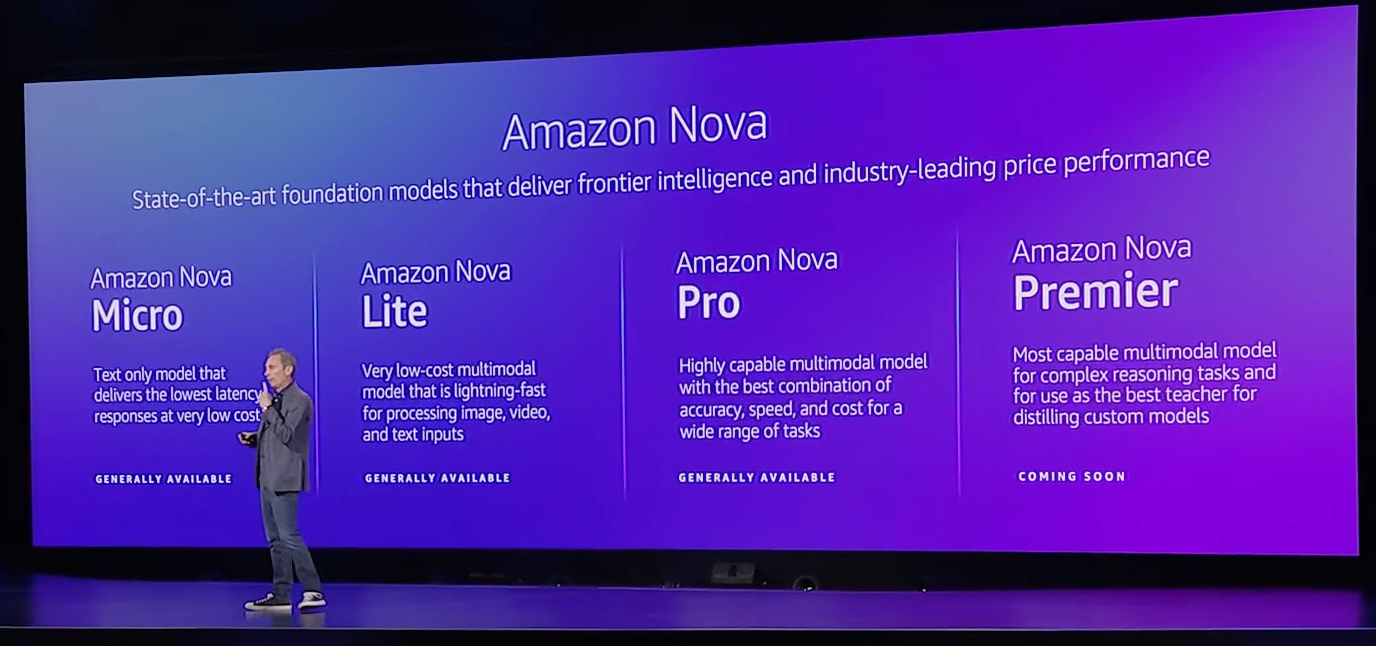

Jassy said the Bedrock capabilities are all about model choices. He said Amazon uses a lot of Anthropic's Claude family of models but also leverages Meta's Llama. "Choice matters with model selection," said Jassy. "It's one of the reasons why we work on our own frontier models."

AWS launched a series of models called Nova. Think of Nova as the LLM equivalent of what Amazon is doing with Trainium.

Amazon Bedrock will also get the following:

- Intelligent prompt routing, which will automatically route requests among foundation models in the same family. The aim is to provide high-quality responses with low cost and latency. The routing will be based on the predicted performance of each request. Customers can also provide ground truth data to improve predictions.

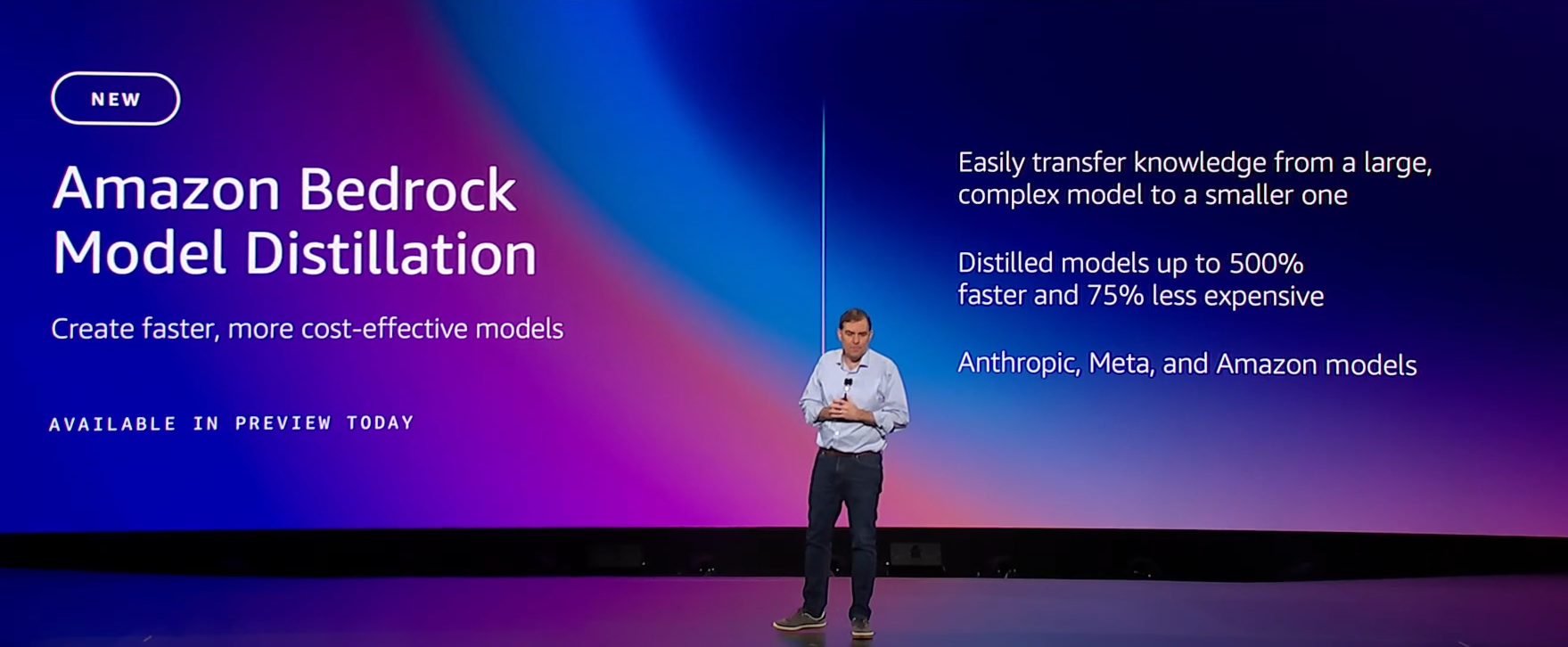

- Model distillation so customers can create compressed and smaller models with high accuracy and lower latency and costs. Customer can distill models by providing a chosen base model latency and training data.

- Automated reasoning check, which will validate or invalidate genAI responses using automated reasoning and proofs. The feature will explain why a generative AI response is accurate and inaccurate using provably sound mathematically techniques. These proofs are based on domain models from regulators, tax law and other documents.

- New models from Luma AI, a specialist in creating video clips from text and images, and Poolside, which specializes in models for software engineering. Amazon Bedrock has also expanded models from its current providers such as AI21 Labs, Anthropic, Cohere, Meta, Mistral, Stability.ai and Amazon.