Enterprises will be able to import their own large language models (LLMs) into Amazon Bedrock and evaluate models based on use cases, said Amazon Web Services. AWS also launched two Amazon Titan models.

The ability to import custom models and evaluate them plays into the broader themes of generative AI choice and orchestration. AWS' Bedrock bet is that enterprises will use multiple models and need a neutral platform to orchestrate them.

In addition, enterprises are showing broad interest in using open-source models and then customizing with their own data.

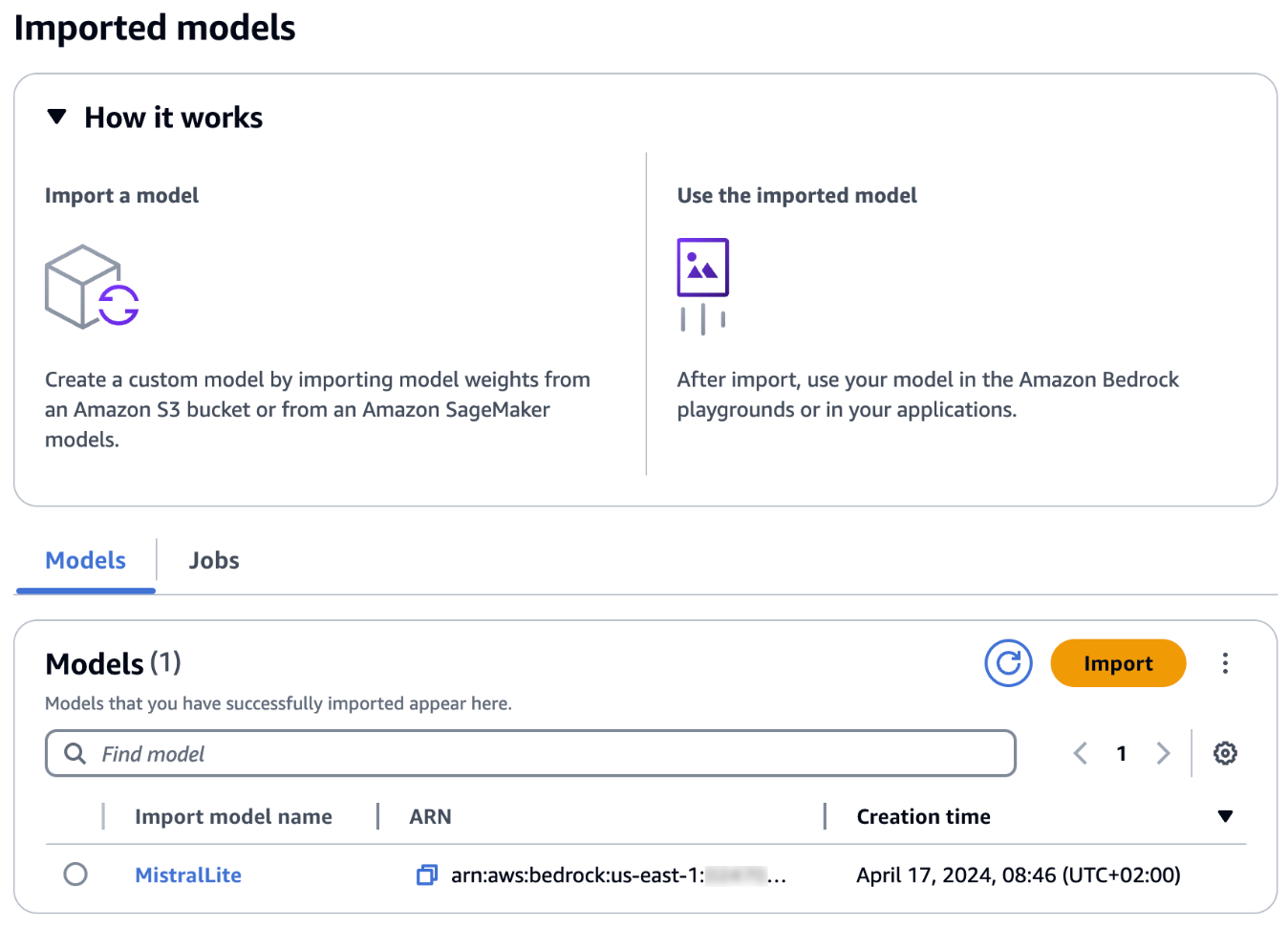

According to AWS, Amazon Bedrock Custom Model Import will give companies the ability to import and access their custom models via API in Bedrock. These custom models could be added to the various model choices in Bedrock.

AWS' Matt Wood on model choice, orchestration, Q and evaluating LLMs

Enterprises will be able to add models to Amazon Bedrock that were customized in Amazon Sagemaker or a third-party tool provider with automated validation. AWS said imported custom models and Amazon Bedrock models will use the same API. Custom Model Import is in private preview and supports Flan-T5, Llama and Mistral open model architectures with more planned.

AWS also said Model Evaluation in Amazon Bedrock is now available. Model Evaluation will analyze and compare models for various use cases, workflows and other evaluation criteria. Bedrock is also getting Guardrails for Bedrock so enterprises can have control over model responses.

As for the Titan models, AWS said Amazon Titan Image Generator, which has invisible watermarking, and the latest Amazon Titan Text Embeddings are generally available exclusive to Bedrock. Amazon Titan Text Embeddings V2 is optimized for Retrieval Augmented Generation (RAG) use cases.