Google Cloud announcements bring deep learning and big data analytics beyond data scientists, but enterprises will want more.

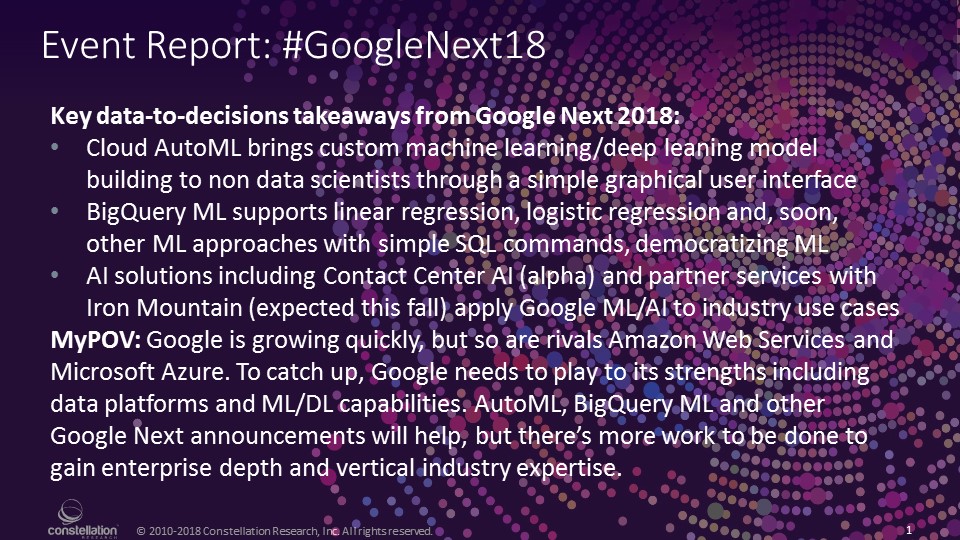

If last week’s Google Next 2018 event is any indication, Google Cloud is growing quickly. Registrations for the July 23-26 event topped 25,000, and actual attendance easily doubled the 10,000 at Google Next 2017. That’s good, but if this public cloud is going to catch up with also-fast-growing rivals Amazon Web Services (AWS) and Microsoft Azure, Google is going to have to play to its strengths.

From my perspective, Google’s biggest appeals to big businesses are its deep learning (DL), machine learning (ML) and data platform capabilities (though I’m biased and my Constellation colleagues who follow G Suite and the rest of Google Cloud Platform (GCP) cloud infrastructure might see it otherwise). Among the many announcements at Google Next 18, the biggest steps forward – and the ones I see as most likely to accelerate growth – were those aimed at expanding the use of Google’s DL, ML and data platform capabilities. Here’s a closer look.

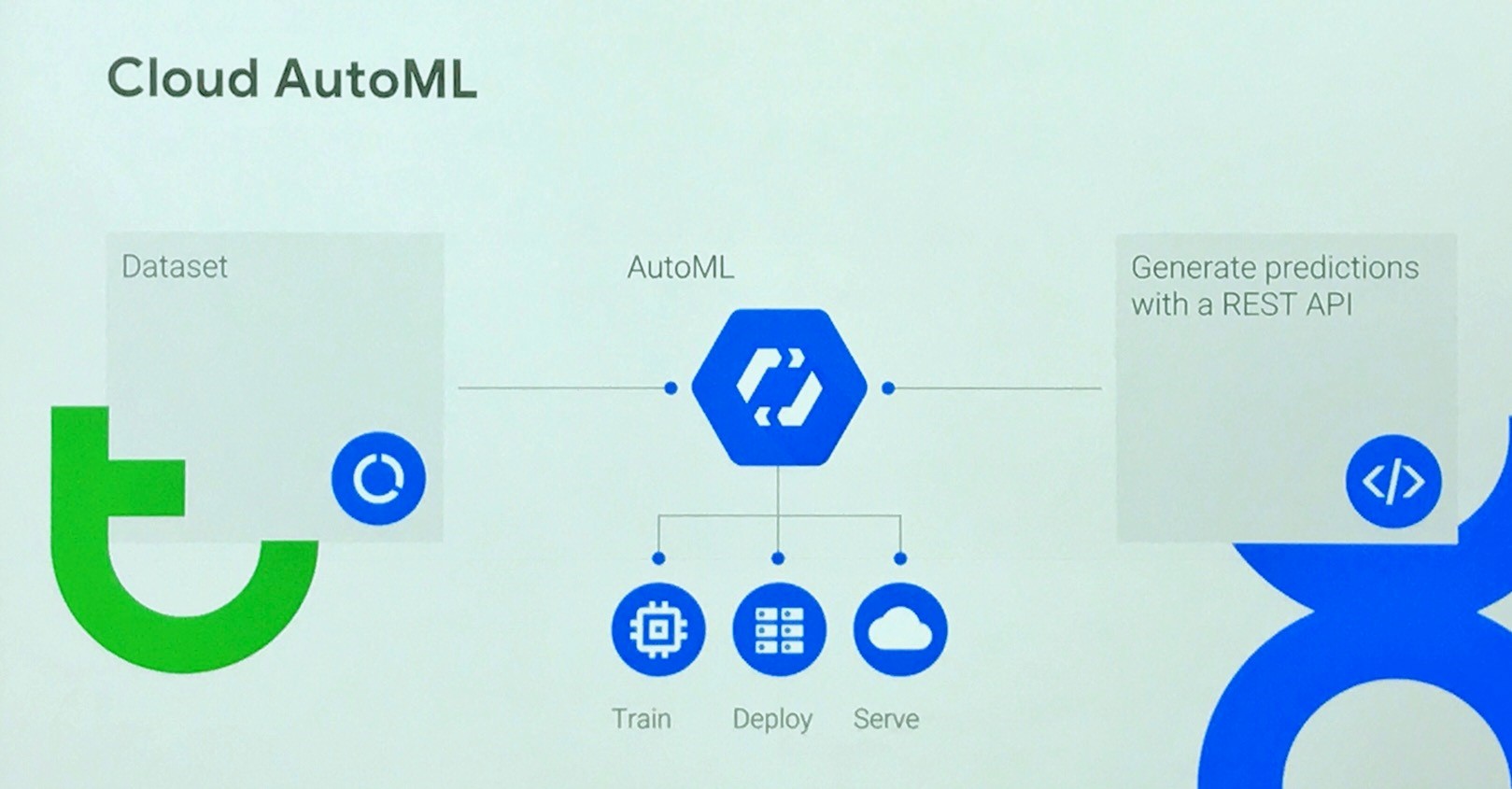

Cloud AutoML Democratizes Data Science

If I had to cite the single biggest announcement of Google Next 18, I’d say it was the beta release of Cloud AutoML, which promises to bring custom DL model building capabilities to organizations even if they don’t have data scientists on staff. It’s a self-service, democratized option that builds on the Google Cloud ML Engine, the data-scientist-oriented offering that became generally available in March 2017.

To review, Cloud ML Engine is a managed machine learning service that lets you train, deploy and export custom models based on Google’s open sourced TensorFlow ML framework or Keras (an open neural net framework written in Python that can run TensorFlow). Cloud ML Engine features automatic hyperparameter tuning and tools for job management and graphical processing unit (GPU)-based training and prediction. Models are also portable, so you can build and train on GCP but then export the models and run them on premises.

Of note to Cloud ML fans, the company announced at Google Next that the engine has added support for training and prediction using scikit learn (for Python-based machine learning) and XGBoost (for gradient boosting in C++, Java, Python or R).

You need to know what you’re doing to use the Cloud ML Engine, so to make things easier for non-data-science-experts, Google introduced a series of machine learning services based on pre-built models. Developers can simply invoke application programming interfaces (APIs) to tap into the services for Natural Language text analysis, Speech-to-Text and Text-to-Speech translation, and machine Vision image detection.

Invoking a service through an API is easy enough, but the down side is of these general-purpose, pre-built models is that they are generic. The idea with AutoML is to start with the pre-built models, but then enable non-data-scientist types to customize through an easy graphical user interface (GUI) and their own data. By taking advantage of all the training that went into the prebuilt model, AutoML customers save development time, but they also benefit from more accurate, custom models based on training on data that’s specific to their industry and organization.

Since its initial alpha release in February, Cloud AutoML Vision has been used selected customers. At Google Next we heard about how retailer Urban Outfitters has used AutoML Vision to build a custom model that recognizes attributes unique to its product imagery. The company says the customer model has improved the search experience on its web site, helping customers to find what they’re after based on visual cues, such as fabric patterns and neck lines. These visual cues don’t necessarily show up in textual metadata, and they’re also not trained into the model behind Google’s standard Vision service.

As announced last week, Cloud AutoML is now in beta (so it’s available to all customers) and it has been extended to include AutoML Natural Language and Translation as well as Vision.

MyPOV on Cloud AutoML. This is a great step forward for Google and it will clearly appeal to any company interested tapping into the power of deep learning without hiring a data scientist. It’s readily apparent to anybody who has compared Google Assistant to the likes of Amazon Alexa, Apple Siri and Microsoft Cortona that Google’s voice and language capabilities are the best available. Cloud AutoML makes state-of-the-art DL accessible to a broad audience, but I think it will appeal to mainstream developers and data scientists alike.

I also appreciate that Google has broadened the appeal of the Cloud ML Engine by adding support for scikit learn and XGBoost. Not all modeling challenges fit TensorFlow, and these open source options expand the possibilities both on GCP and for exporting and deploying models on premises.

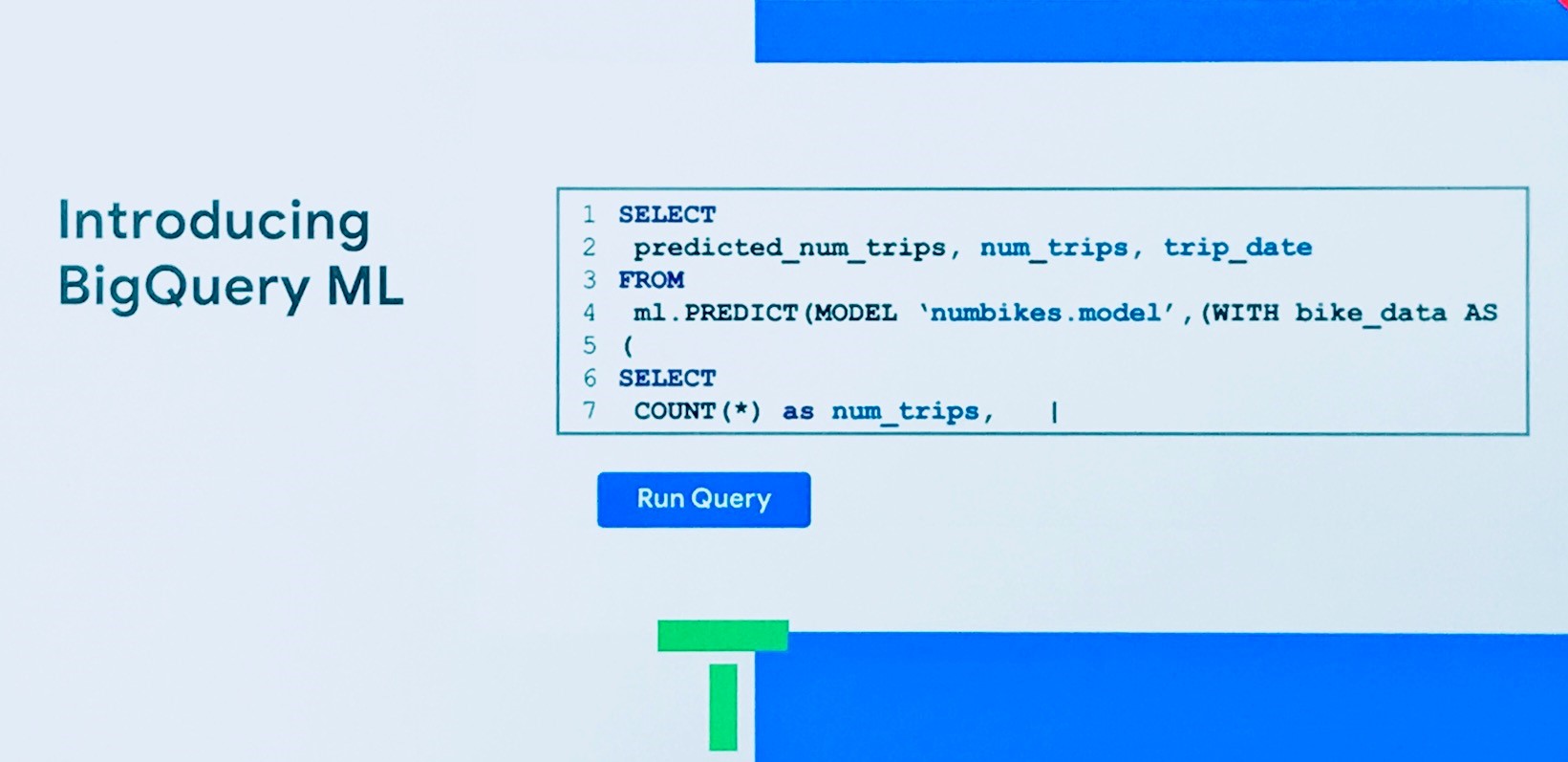

BigQuery ML Democratizes Machine Learning

The second big Google Next announcement in the theme of democratization was BigQuery ML, a beta release designed to support machine learning through simple, broadly understandable SQL statements. As the name suggests, this new ML capability has been added to BigQuery, Google’s highly popular data warehousing service. It’s popular largely because it’s serverless, meaning it elastically scales up to petabytes and back down on demand, without requiring database administration. It also supports SQL 2011 standard expressions, query federation, high availability, streaming analytics, encryption and other good stuff, but ease of use is BigQuery’s calling card -- and a differentiator versus more administratively challenging rivals AWS Redshift and Azure SQL Data Warehouse.

BigQuery ML extends SQL functionality to support machine learning through simple CREATEMODEL and ml.PREDICT SQL commands. At launch, BigQuery ML supports linear regression and binary logistic regression, but Google plans to add many more algorithms and supporting expressions. BigQuery ML applies what’s known as an in-database technique, and the alternative is the conventional approach of exporting data to a separate machine learning and analytics environment, which is obviously more cumbersome, time consuming and expensive.

MyPOV on BigQuery ML. Techniques for in-database execution of advanced analytics including machine learning have been around for nearly a decade, implemented in IBM Db2, Microsoft SQL Server, Oracle Database, and Teradata, among others. All of these databases are now available as cloud services, but where Google’s top hyperscale cloud competitor are concerned, AWS Redshift doesn’t have anything like BigQuery ML. Microsoft SQL Server supports in-database ML, but this functionality has yet to be extended to its Azure SQL and Azure SQL Data Warehouse cloud service counterparts.

So BigQuery ML is not a huge breakthrough, but Google is ahead of its chief cloud rivals in introducing it. I’m sure Microsoft will now bring support for SQL Sever Machine Learning Services to its Azure SQL service ASAP. I also won’t be surprised if AWS makes a similar announcement by Re:Invent 2018, in November, as in-database techniques are no longer rocket science. Once rivals are in the game, I’m sure we’ll see one upmanship in terms of the depth and breadth of ML capabilities. As I’ve seen with earlier in-database initiatives, regression and logistic regression are just the start of what companies will want to do with the masses of data in their data warehouses.

Going Vertical With AI Solutions

Google Cloud had a lot to say about its partner ecosystem at Google Next 18, and it even says it now has a commitment to include at least one partner in 100 percent of its new deals. The company also pivoted at Google Next by deemphasizing products and focusing instead on solutions. That’s another sign of maturation to go along with Google’s growth.

On the theme of playing to its strengths, Google made two other important announcements last week on early examples in an expected wave of AI solutions built with partners. The first announcement was Contact Center AI, which is designed to bring Google’s virtual agent capabilities -- including speech-to-text, text-to-speech, natural language processing and Dialogflow automated workflow -- into partner call center environments. Contact Center AI is now in alpha release, so customers can sign up, but they are being screened for initial deployments. The list of partners is extensive, including Cisco, Genesys, Mitel, Twillio, Vonage and leading systems integrators.

The second AI solution announcement was a planned set of services with partner Iron Mountain. Set for release in September, these services will make Google’s TensorFlow image and optical character recognition capabilities available to Iron Mountain’s content analytics, archiving and storage customers. The services will help customers know what physical and digital documents they have and, according to Iron Mountain, it will help them create new services based on AI-based understanding of and access to this content.

MyPOV on Google Solutions: Call center and document-oriented services are about as broad as you can get when it comes to solutions. Any company with a sizeable number of customers has a call center and Iron Mountain has literally hundreds of thousands of customers. Google also has a partnership with SAP, which is using TensorFlow for ML/DL solutions of its own. But Google has hardly scratched the surface where solutions are concerned.

When enterprise software companies introduce industry vertical solutions, they’re typically drawing on years of experience in multiple verticals. It’s not unusual to see these companies roll out with half a dozen examples in an initial release, and they’ll have at least a few more on the roadmap. That Google announced just two solutions and had no roadmap for additional releases tells you it’s very early days for this company’s solutions and vertical industry offerings.

My Overall Take on Google Next 18

Studies suggest that we’re moving into a multi-cloud world, and, indeed, I’ve talked to plenty of companies that use more than one public cloud provider. The most common pattern I see is companies building and running applications on AWS. In fewer cases Azure is their primary cloud, but it’s very often their choice for email services and desktop applications via Microsoft Office 365. When Google Cloud is in the mix, nine times out of ten I hear it was chosen for its data platforms and ML/DL capabilities. Of course my sampling is biased precisely because these are my research domains. Nonetheless, this is the key reason why I think it’s so important for Google to play up its data-to-decisions strengths.

Beyond the AutoML and BigQuery ML announcements, Google offered a number of other AI- and ML- related announcements. Kubeflow, for example, promises to support complete machine learning stacks on Kubernetes. And low-power Edge TPU (TensorFlow Processing Unit) chips promise to bring Google’s DL wizardry to mobile and remote sensors and devices. Thus I’d say Google Cloud did a good job of doubling down on these strengths, but much work needs to be done.

While Google has focused on democratizing data science with AutoML, AWS seems to have more to say about end-to-end model management with SageMaker. Microsoft, meanwhile, is addressing model management as well as data and model lineage and governance with Azure Machine Learning. As the number of models and versions mounts, model management and data lineage and governance become increasingly important. Outside of the basic topic of security, I didn’t hear much about these topics at Google Next. As for AI solutions, the partnerships with Iron Mountain, SAP and contact center vendors are a start, but the company is clearly at the start of the runway where AI-based industry solutions are concerned.

Related Research:

Amazon Web Services Adds Yet More Data and ML Services, But When is Enough Enough?

Microsoft Stresses Choice, From SQL Server 2017 to Azure Machine Learning

Google Cloud Invests In Data Services and ML/AI, Scales Business