Monday night, Nov. 27, at Re:Invent 2023, AWS’ Peter DeSantis, SVP of Utility Computing, announced two important database features: Amazon Aurora Limitless Database and Redshift Serverless AI Optimizations. Here's my analysis.

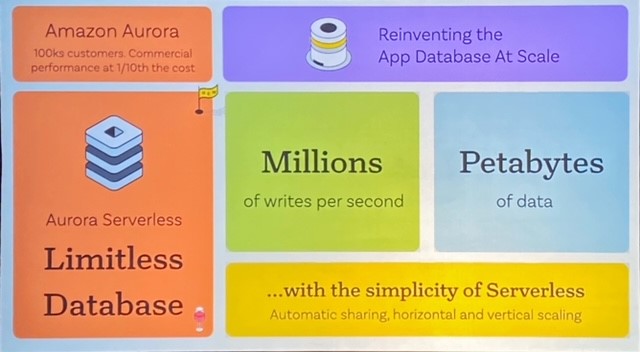

Amazon Aurora Limitless Database: Announced in private preview, This is an automated sharding feature for Aurora PostgreSQL that will enable customers to horizontally scale database write capacity via sharding. Aurora previously supported automated horizontal scaling of read capacity, but write capacity could only be automatically scaled vertically, by implementing larger and more powerful compute instances via the Aurora Serverless V2 feature. When customers reached the limit of vertical scaling, meaning they’ve already employed that most powerful compute instances, they would have to resort to sharding data across multiple database instances. This has been a common practice for large database deployments, but manual sharding at the application layer introduces complexity and administrative burdens. Aurora Limitless Database does away with these burdens by automating the sharding of data across database instances behind the scenes while ensuring transactional consistency.

Doug’s take: Aurora Limitless Database will step up competition with the world’s number-one database and Aurora’s biggest competitive target, Oracle Database. AWS is actually playing catch up with this feature, as Oracle introduced automated sharding back in 2017. Nonetheless, given that Aurora is so cost competitive, touted as one tenth the cost of its rivals, Aurora Limitless Database is an important step forward in potentially matching rivals such as Oracle on automated scalability.

Redshift Serverless AI Optimizations: In another move to match competitors, AWS introduced Amazon Redshift Serverless AI Optimizations. This feature brings ML-based scaling and optimization to AWS’s flagship analytical database for data warehousing. AWS introduced Redshift Serverless in 2021 in order to automate database scaling, but the capability was reactive. Given the time it takes to add new instances up in running, there were sometimes penalties in performance. The AI Optimizations feature introduces a new, machine learning-powered forecasting model that does a better job of forecasting the capacity requirements of existing as well as new and unfamiliar queries. A simple slider controls is said to enable administrators to set the balance between maximizing performance and minimizing cost.

Doug’s take: ML-based forecasting and optimization is old hat in the world of data warehousing, implemented by the likes of Oracle and Snowflake. Here, too, AWS is playing catchup, but the appeal of Redshift is as a cost-competitive data warehousing option within the AWS ecosystem. Matching rivals by adding sophisticated, ML-based scaling and optimization capabilities with cost guardrails will make Redshift more efficient and performant as well as more cost competitive.

Related resources:

Google Sets BigQuery Apart With GenAI, Open Choices, and Cross-Cloud Querying

Salesforce Data Cloud Emerges as an Obvious Choice for CRM Customers

How Data Catalogs Will Benefit From and Accelerate Generative AI