For years, the promise of the public cloud has been the primary end-game for IT infrastructure, offering enterprises the most flexible and scalable platform for their SaaS applications. These applications, characterized by their inherently dynamic nature, typically experience significant fluctuations in usage, a key aspect that public cloud was designed explicitly to address. However, times are changing and AI is now poised to upend cloud economic by altering the very nature of workloads, just as cloud spend becomes the top issue for CIOs when it comes to IT infrastructure.

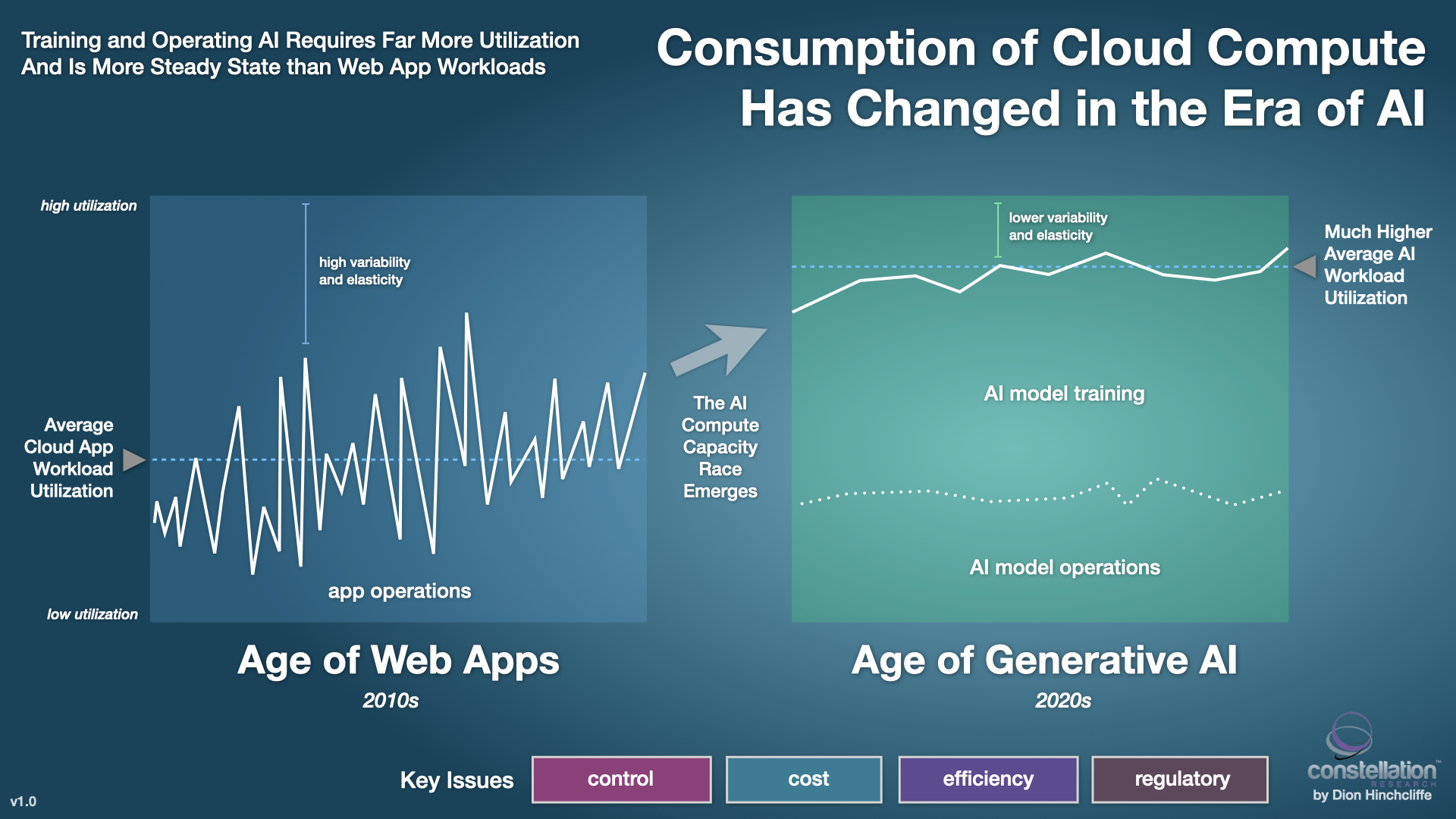

Traditional Web workloads have long exhibited spiky usage patterns, with traffic surging during peak hours (e.g., Black Friday sales) and plummeting during off-peak times and unexpected events. This unpredictable demand curve has aligned perfectly with the on-demand nature of cloud resources – businesses can easily scale their cloud instances up or down to meet the fluctuating needs of their Web applications, paying only for the resources they utilize. However, the overall complexion of cloud workloads has recently shifted due to generative AI, throwing capacity planning into flux. A widely-folowing 2023 study by Flexera, found that optimizing cloud costs has just moved to the top priority of cloud teams (64% of respondents), as they struggle with both workload forecasting and the growing impact of AI on their cloud compute mix.

So there's little question now: The arrival of generative AI has officially thrown a wrench into this well-established dynamic. Unlike Web applications, which exhibit intermittent bursts of high compute demand, AI models are insatiable consumers of continuous compute power, requiring consistent and substantial resources throughout both their training and operational phases. Training a large language model, for instance, can devour vast amounts of compute power for weeks or even months on end, relentlessly pushing the boundaries of available processing capacity. This long-term hunger for compute resources has ignited a fierce competition among cloud providers, each vying to be the leader in AI in the cloud. Evidence for this abouds: GPUs, the chips which provide most of the capacity for training and running AI models, has recently propelled the stocks of AI chip providers like NVIDIA into historic terrorities, and the trend is just beginning.

The top three hyperscalers, Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP), are each aggressively optimizing their public cloud offerings for AI workloads, investing heavily in the development of both AI cloud capacity as well as their own custom AI chips including Google's well-established Tensor Processing Unit (TPU), AWS's new Tranium2 chip, and Microsoft's upcoming Maia 100 AI processor which each compete with Nvidia's data center-friendly H200. These specialized chips offer significantly improved performance and efficiency for AI workloads compared to traditional CPUs. Of these, notably, only the H200 will be widely available for use within private enterprise data centers, thus making AI chips an emerging risk factor for a new type of cloud lock-in, to add to the concerns of CIOs seeking to come to grips with this new cloud landscape.

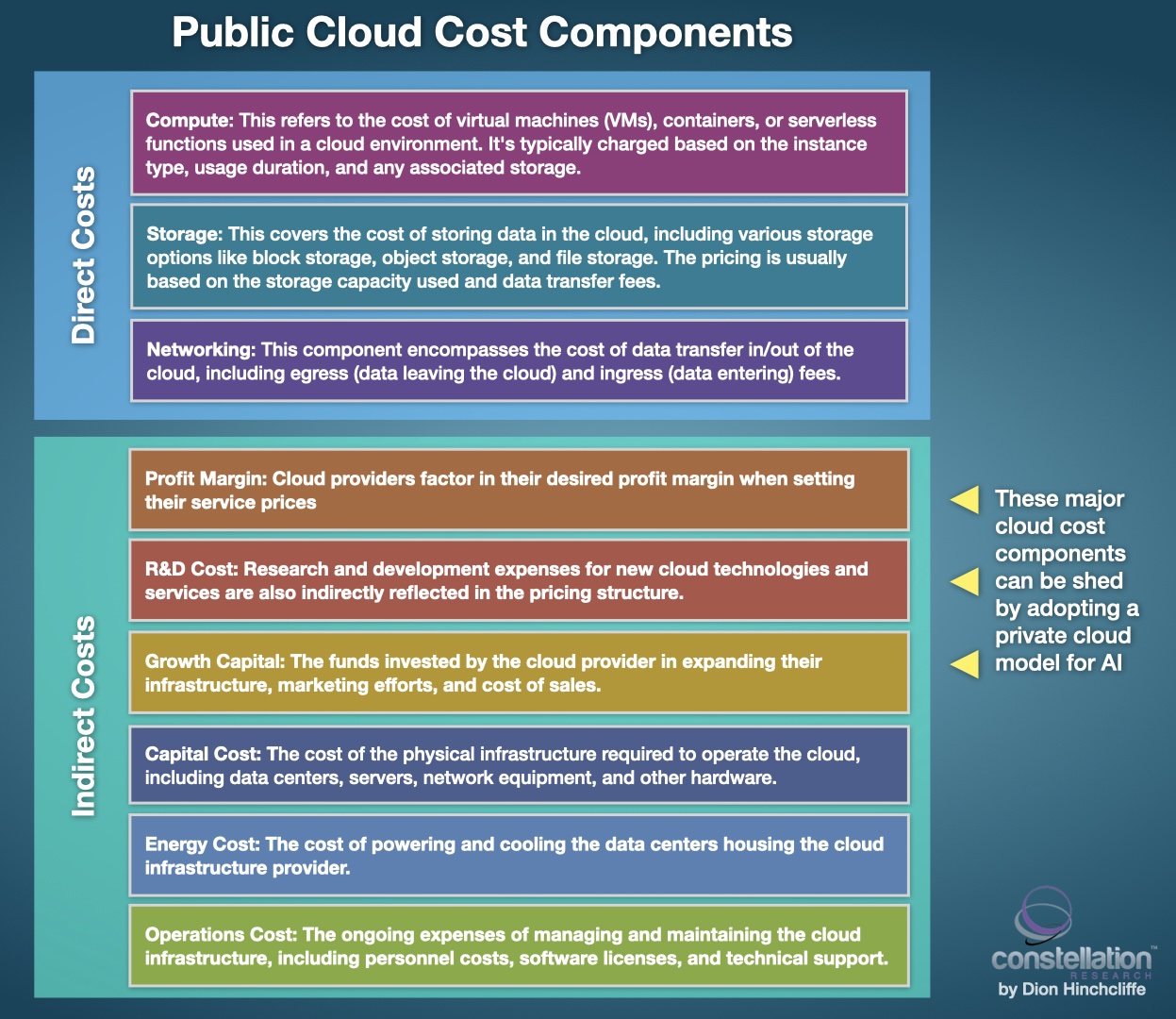

While this relentless pursuit of ever-greater AI performance promises significant advancements in various fields, it comes at a substantial additional cost. Hyperscalers must factor in the research and development expenses associated with creating custom AI chips and cloud-based AI tools, alongside the razor-thin profit margins typical of the cloud industry. Additionally, the staggering infrastructure required to support these highly demanding AI workloads translates into significant long-term capital expenditure for cloud providers. Ultimately, these costs are passed on to the cloud customer in the form of service fees, raising a critical question: as AI workloads continue to grow in complexity and resource demands, is the public cloud the most cost-effective solution for most AI workloads in the long run? This is the fundamental question today as AI becomes a growing percent of overall compute utilization.

AI Greatly Tranforms Cloud Economics

The shift towards AI workloads throws a stark light on the limitations of traditional cloud pricing models designed for bursty web applications. Unlike CPUs, which can be easily ramped up or down, GPUs, the workhorses of AI training and inference, are a different beast altogether. These specialized processors excel at parallel processing, making them ideal for the computationally intensive tasks involved in AI. However, unlike CPUs that can be idled during downtime, GPUs are most cost efficient when constantly utilized. While cloud providers typically offer GPU instances with per-second billing cycles, they argue that with their special chips and "paying only for what you use" they offer the highest net cost efficiency for AI workloads. This creates a scenario where businesses will indeed pay just for the AI workloads they need, but still face the increasingly large overhead cost components that used to be easier to hide when the cloud was a smaller industry, which now also includes custom silicon for AI, which each hyperscaler designs and builds out themselves. Although of course some also leverage third parties like NVIDIA as well.

Furthermore, the ever-evolving nature of AI training necessitates a continuous cycle of experimentation and improvement. Businesses are no longer dealing with static applications; whether they realize or not, they're now engaged in a perpetual competitive race to develop and refine better AI models. This ongoing process requires readily available compute resources for training and fine-tuning, pushing IT departments to grapple with the financial implications of constantly running AI workloads in the public cloud. The high bar for entry in terms of infrastructure investment and ongoing operational costs associated with large-scale AI training is creating a fertile ground for alternative solutions.

The take-away: Any certainty that public cloud was the best place for all AI workloads has greatly receded. CIOs are now considering all their options that yes, still includes the hyperscalers' AI services, but also specialty cloud providers, AI training service bureaus, and private GPU clouds.

Perhaps the most disruptive trend is that this evolving AI landscape is witnessing is the rise of a new class of cloud providers specifically designed to cater to the unique needs of AI workloads. Smaller but more nimble players like Vultr and Paperspace are carving out a niche by offering cloud instances optimized for GPU workloads. These providers often leverage economies of scale by utilizing custom hardware and innovative pricing models that align billing more closely with actual compute usage. Additionally, larger enterprises are increasingly exploring private cloud deployments as a means to maximize control over their AI infrastructure and optimize FinOps (financial management of the cloud), including the burgeoning practice of FinOps for AI. By bringing AI workloads in-house, businesses aim to squeeze every penny out of cost overhead and gain greater flexibility and strategic autonomy in managing their ever-growing compute needs for AI training and operations. This shift towards private and specialized cloud solutions suggests a potential bifurcation within the cloud computing market, with established hyperscalers potentially facing pressure from more targeted, cost-efficient alternatives.

Navigating the AI Cloud Conundrum: A Roadmap for CIOs

The future of cloud computing is undeniably intertwined with the relentless rise of AI. However, for CIOs, this presents a strategic conundrum. Public cloud providers offer unparalleled scalability and access to cutting-edge AI tools, but their cost structures are often ill-suited for always-on, high-performance AI workloads. The path forward necessitates a careful balancing act between agility, cost-efficiency, and control.

Here's an roadmap for CIOs to prepare for this AI-driven cloud future:

- Invest in Advanced AI Expertise: Building a competent internal team with expertise in AI development, data science, and especially, full stack cloud infrastructure management is now vital. This allows for a deeper understanding of workload requirements and informed infrastructure decisions and internal build out if neeeded. Upskilling for AI is now preferred to hiring in many cases.

- Hybrid Cloud Strategies: A hybrid cloud approach, leveraging both public and private cloud resources, is now the target environment today, which we saw last year in my research on the rebalancing between public and private cloud. Bursty workloads can reside in the public cloud, while mission-critical, always-on AI workloads can be migrated to a private cloud environment, optimizing cost and performance.

- Containerization: Containerization technology such as Docker and its robust ML/AI support allows for efficient, rapid packaging and re-deployment of AI models across various cloud environments. This fosters portability and flexibility in choosing the most cost-effective infrastructure for specific workloads. Kubernetes remains popular with larger enterprises, while Docker is favorted by mid-market firms in managing AI deployments.

- Cost Optimization Tools: Utilize cloud cost management platforms optimized for AI like Cast AI that offer granular insights into resource utilization and spending patterns and cuts costs by up to 50% in some cases. This enables proactive cost management strategies for AI workloads in either the public or private cloud, and allows evaluation of whether private cloud is a more optimal environment for a given AI workload depending on the optimizations needed.

- Security & Regulatory Considerations: AI workloads in the public cloud raise significant concerns about data security, regulatory and compliance requirements, as well as potential biases. CIOs must implement robust security protocols, conduct thorough risk assessments, and ensure alignment with all relevant regulations. This is another decision point that reflects heavily on the choice between public and private clouds, as private AI deployment can provide consierably more proactive, granular control over data residency and privacy issues with AI.

Competitive Ramifications

The ability to navigate this new landscape will have significant competitive ramifications. Companies that can develop and deploy AI models most cost-effectively and securely will, put simply, gain a significant edge in their industry. Conversely, those struggling to optimize AI workloads for the cloud will fall behind, as their investments just won't get them as far as their cohorts.

The Bottom Line

The future of AI in the cloud demands forward-thinking strategies. As AI workloads reshape the cloud landscape, CIOs are presented with a unique challenge and opportunity. The future demands not just technical expertise in generative AI and large language models, but also a spirit of creative adaptability, a rethinking of cloud orthodoxy, and eagle-eyed vision. Crafting a clear and frequently updated AI roadmap for the enterprise will be crucial to mobilize IT and the business, outlining key decision points and strategic considerations as lessons are learned.

This journey requires continuous learning and the ability to evolve alongside the technology. IT leaders must embrace a growth mindset, exploring diverse deployment models, and remaining open to new possibilities that will be vital for success. Those who are prepared to adapt and learn will not only survive but prosper in this transformative era. The cloud, once a playground for bursty applications, is now evolving into a dynamic ecosystem where 100% load AI workloads reign supreme. For the CIOs who embrace this change with an expansive vision and an open mind as to where AI workloads will best operate over time, the future holds immense promise.

My Related Research

A Roadmap to Generative AI at Work

Spatial Computing and AI: Competing Inflection Points

How to Embark on the Transformation of Work with Artificial Intelligence

AWS re:Invent 2023: Perspectives for the CIO

Dreamforce 2023: Implications for IT and AI Adopters

Video: Moving Beyond Multicloud to Crosscloud

My new IT Strategy Platforms ShortList

My current Digital Transformation Target Platforms ShortList

Private Cloud a Compelling Option for CIOs: Insights from New Research