Teradata adds Presto SQL-on-Hadoop option plus new streaming and analytics offerings. They’re all part of the vendor’s Unified Data Architecture strategy, but Teradata’s biggest challenge remains cost-per-terabyte thinking.

CEO’s are no longer asking for big data strategies, according to Randy Lea, vice president of Teradata’s Big Data practice. Now they’re asking for analytics strategies.

If that’s really the case, few companies are as well qualified as Teradata to offer advice. That much was clear at last week’s Teradata Influencer’s Summit in San Diego, where the company detailed a tremendous breadth of software, cloud services, applications, packaged solutions and consulting services.

It’s a good bet, though, that plenty of C-level leaders are still beguiled and befuddled by big data and all the competing claims. Teradata itself is contributing to the confusion this week by throwing its considerable weight behind Apache Presto, the open-source SQL-on-Hadoop option developed by Facebook.

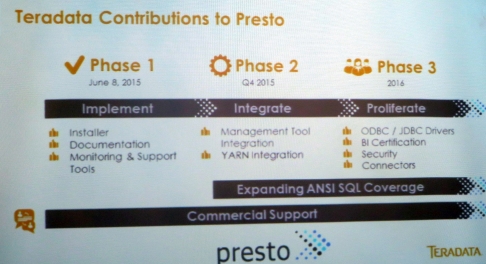

Teradata introduced a supported distribution of Presto SQL-on-Hadoop software on Monday, and it’s contributing planned development work to the open source project.

This move will confuse some because Teradata has partnered with the three leading Hadoop vendors: Cloudera, Hortonworks and MapR. Yet Presto competes with Cloudera Impala, Apache Hive, Hadoop’s incumbent query tool supported by Hortonworks, and Apache Drill, the open-source project supported by MapR.

All of the above support SQL querying of data in a Hadoop cluster. But Teradata insists its new Presto distribution, available as a free download with a subscription-support option, promises better performance than rival tools, thanks to its in-memory architecture. Teradata points to Presto adoption by Airbnb, Facebook, Teradata-customer Netflix, DropBox, and others as proof of Presto’s scalability and performance. Facebook, for one, also invented Hive, but it came up with Presto to get around Hive performance constraints tied to MapReduce processing.

MyPOV on the Presto Move: Adoption by Internet giants notwithstanding, Teradata has work to do help Presto meet enterprise expectations. For starters, Presto currently lacks YARN support, but it also needs integrations with Hadoop-system-management tools like Ambari, security-system connections, a better ODBC driver and, most importantly, broader SQL coverage and BI system integrations. Teradata says it’s working on all of the above and will contribute the work to the open source project.

Teradata’s contributions will obviously improve Presto’s fortunes, but it’s clear that the project’s independence – it’s ability to run on any Hadoop distribution — was as much an attraction to Teradata as Presto’s performance. Hive and Apache Spark (with Spark SQL) also run on any Hadoop distribution (and they already have YARN support). But those projects are under the control of the Hadoop and Spark communities, respectively.

Teradata clearly chose Presto in part because it can have greater influence in that community, even if the project itself is less mature than Hive and, in some respects, Spark. Indeed, Teradata execs acknowledged that Presto will have to work side-by-side with Hive for the foreseeable future because that tool is so widely used.

Teradata Evolves Unified Data Architecture

Teradata was once focused exclusively on great big enterprise data warehouse deployments, but my how times have changed. The company responded to the data warehouse appliance craze of the last decade by introducing its own broad range of appliances (from memory-intensive speed demons to archival boxes) all sharing the same database.

The company responded to the big data craze first by acquiring Aster Data. Now billed as the vendor’s Discovery Platform, Teradata Aster handles multi-structured data and multi-mode analysis including MapReduce, machine learning, graph analysis and R all invoked through SQL and SQL-like queries. A second response to big data was embracing Hadoop. Teradata is now partnered with the top-three Hadoop distributors, and it resells and deploys Hortonworks’ distribution on a Teradata appliance or on third-party hardware. A third response to the big data craze was buying Revelytix for big data metadata management, Hadapt for SQL-on-Hadoop expertise and ThinkBig Analytics for bid data application and deployment consulting.

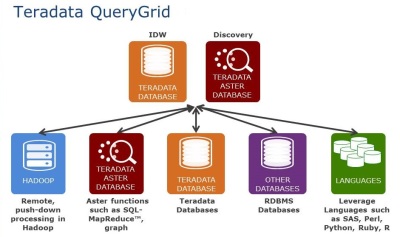

Teradata, Aster, and Hadoop are now the three legs of the company’s Unified Data Architecture (UDA), and Teradata Query Grid is the connective data fabric that lets customers bring all that information together into analyses on Teradata or Aster. (Query Grid previously used Hive to tap into Hadoop, but now Presto adds a low-latency querying option.)

The latest push in the UDA strategy is into the cloud. Last year the vendor started offering multi-tenant Teradata and Aster instances hosted in two U.S.-based Teradata data centers. (Plans call for additional data centers in Europe and Asia, but there are not firm dates on that.) Cloud use cases include backup/disaster recovery, test-and-development, Aster (Discovery Platform) deployments, consolidation of shadow-IT data marts, and support for system-integrator and Teradata-partner cloud deployments.

Seeing Amazon’s success with Redshift, Teradata also believes it can go down-market, serving customers who want agile cloud deployment, but who also need consulting, industry domain knowledge, and deployment and operational hand holding that Amazon Web Services doesn’t offer. Teradata cited “complete” pricing for a 24-terabyte Teradata cloud deployment, including DBA as-a-service, at $250,000 per year with a three-year commitment.

Teradata says it has tuned Query Grid and other software for cloud delivery, adding REST APIs and breaking out micro-services. It’s also testing Teradata Listener, a cloud-ready streaming-data capture option (late to the streaming party, but welcome nonetheless). Based on Kafka and Spark processing and set for Q3 beta, Listener is aimed at real-time analytics and Internet-of-things applications.

The bigger picture with UDA, Query Grid, and the new cloud options is providing yet more flexible options for analytics. Teradata has 17 industry solutions covering everything from warranty and product-quality analyses for the automotive industry to customer-segmentation and network-planning apps for utility companies. The company has also built an Aster App Center offering “bundles” (think app blueprints) for site-search optimization and customer satisfaction analyses. The former is for improving product-search success and sales-conversion rates. The latter helps subscription-service providers in the telco, cable, and satellite industries spot likely defectors and avoid churn.

MyPOV On Teradata’s Evolution

One sticky metric that has pained Teradata in recent years is cost per terabyte. Hadoop vendors, in particular, trumpet this measure as a differentiator. Indeed it’s a big reason why Hadoop is quickly gaining adoption. Whether you call them data lakes or data hubs, Hadoop-based repositories look like they’re here to stay. Clickstreams, sensor data, log files, mobile data and social data at scale are some of the high-volume data types that beg for a low-cost-per-terabyte storage option.

But what about the analysis? There’s a race on to analyze the data within Hadoop without having to hire armies of data scientists and without writing and managing thousands of lines of arcane bespoke code. Lots of database providers (including Actian, HP, IBM, Microsoft, Oracle, Pivotal as well as Teradata) have come up with ways to query Hadoop using SQL. Hadoop vendors have done that, too, with Impala, Hive and Drill.

Far fewer vendors from the database camp have gone beyond SQL. With Aster, Teradata’s advantage is invoking machine learning, graph analysis, R-based algorithms and MapReduce techniques all with SQL and SQL-like statements. Query Grid also supports push-down techniques, so you can do the heavy processing on Hadoop or Teradata when the big data lives there.

When Teradata first acquired Aster in 2011, my first thought was “who wants to have to run three platforms,” meaning Teradata and Hadoop, plus Aster? But the only option that matches Aster’s breadth (at present) is Apache Spark, and that, too, is another platform. Spark is an open-source platform with a fast-growing community, so it will only get stronger, but it does not invoke all these modes of analysis through SQL and SQL-like expressions.

Teradata is making as strong a case as ever for Aster, but as my colleague Holger Mueller pointed out in this blog, the vendor has at least acknowledged other options by maintaining ThinkBig as a separate business unit. Presentations by ThinkBig at last week’s event clearly showed Spark and other open source options in the thick of the company’s consulting plans.

In short, if you’re only thinking about data and how big it might get, you’re susceptible to cost-per-terabyte thinking. If you’re thinking ahead to the range of analyses you’re going to need to support, and if you want industry domain expertise and guidance on deployment and platform integration, measures such as price-per-analytic, price-per-user, and time-to-successful-deployment will matter most. That’s when Teradata belongs on the RFP list.

Have a question about your big data/analytics strategy? Let's talk! Contact me here.