Nvidia CEO Jensen Huang said the company is aiming to expand use cases for physical AI including next-generation robots and autonomous vehicles with the launch of world foundation models called Cosmos.

Cosmos is a family of world foundation models, or neural networks that can predict and generate physics-aware virtual environments. Speaking at the headline keynote at CES 2025, Huang said Nvidia Cosmos models will be open sourced. "The ChatGPT moment for robotics is coming. Like large language models, world foundation models are fundamental to advancing robot and AV development," said Huang. "We created Cosmos to democratize physical AI and put general robotics in reach of every developer."

Huang added that world foundation models (WFMs) will be as important as large language models, but physical AI developers have been underserved. WFMs will use data, text, images, video and movement to generate and simulate virtual worlds that accurately models environments and physical interactions. Nvidia said 1X, Agility Robotics and XPENG, and autonomous developers Uber, Waabi and Wayve are among the companies using Cosmos.

- Nvidia CEO Jensen Huang has a dream...

- AI data center building boom: Four themes to know

- On-premises AI enterprise workloads? Infrastructure, budgets starting to align

The Cosmos launch is part of a wide range of models Nvidia outlined to bolster its ecosystem NeMo framework and platforms like Omniverse and AI Enterprise. Nvidia also launched Nvidia Llama Nemotron and Cosmos Nemotron models for developers creating agentic AI applications.

Huang said WFMs are costly to train. The best way to democratize physical AI was to spend upfront on a suite of open diffusion and autoregressive transformer models for physics-aware video generation and then open them so developers can refine. Cosmos models have been trained on 9,000 trillion tokens from 20 million hours of real-world human interactions, environment, industrial, robotics and driving data.

Cosmos can process 20 million hours of data in just 40 days on Nvidia Hopper GPUs, or 14 days on Blackwell GPUs.

Huang showcased Cosmos models capabilities such as video search and understanding, 3D-to-real synthetic data generation, physical AI model development and evaluation, the ability to predict next potential actions and simulations.

Cosmos WFMs are available on Hugging Face and Nvidia's NGC catalog. Cosmos models will soon be optimized for Nvidia NIM microservices.

Key details about Cosmos include:

- The models come in three categories. Cosmos Nano is optimized for low-latency inference and edge deployments. Super is for baseline models and Ultra is designed for maximum quality and best for distilling custom models.

- Cosmos is designed to be paired with Nvidia Omniverse 3D outputs.

- Developers can use Cosmos models for text-to-world and video-to-world generation with versions ranging from 4 billion to 14 billion parameters.

- Cosmos WFMs can enable synthetic data generation to augment training datasets and simulate, test and debug physical AI models before being deployed.

- Data curation and training of Cosmos used thousands of Nvidia GPUs via Nvidia DGX Cloud.

- Cosmos will be supported via Nvidia AI Enterprise.

- Nvidia said the Cosmos platform includes data processing and curation for Nvidia NeMo Curator.

- Cosmos will include Cosmos Guardrails and will have a built-in watermarking system.

Industrial AI applications

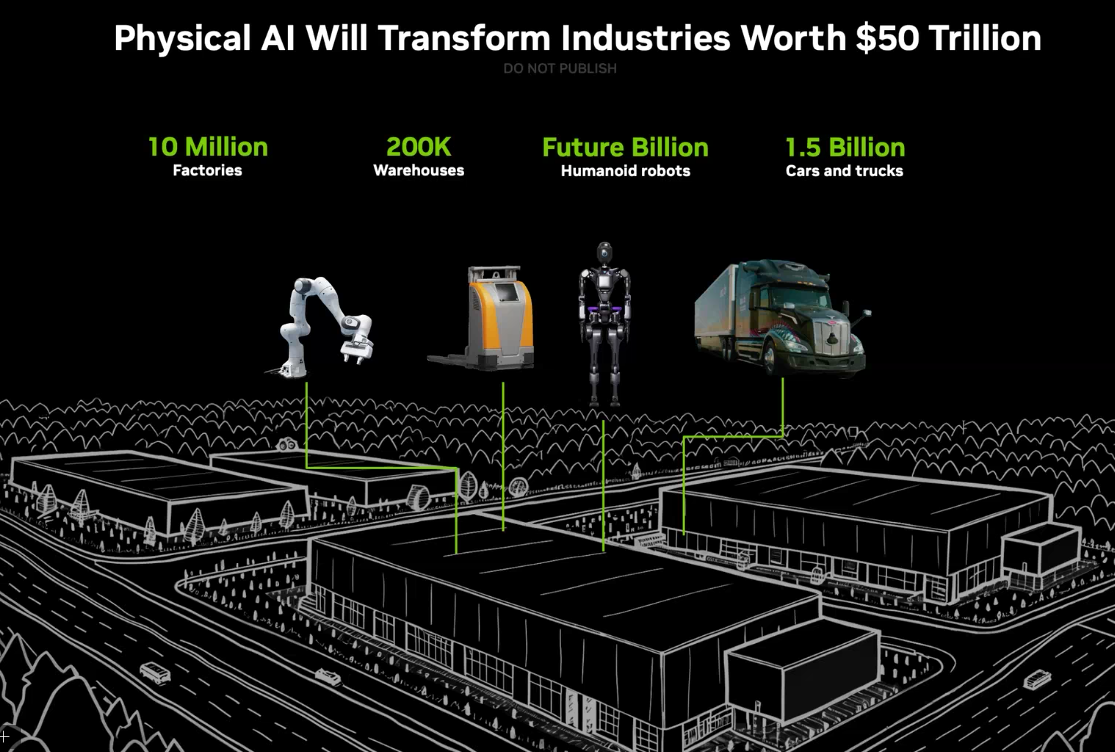

The launch of Cosmos combined with Nvidia Omniverse is designed to accelerate industrial AI applications. These applications--notably factory and manufacturing optimization, robotic digital twins and autonomous applications--are the next era of AI.

To that end, Nvidia is leveraging partners such as Accenture, Altair, Ansys, Cadence, Foretellix, Microsoft and Neural Concept to integrate Omniverse into software and services. Siemens also announced that its Teamcenter Digital Reality Viewer is available and powered by Nvidia Omniverse.

Huang said:

"Physical AI will revolutionize the $50 trillion manufacturing and logistics industries. Everything that moves — from cars and trucks to factories and warehouses — will be robotic and embodied by AI."

Nvidia's plan is to pair Cosmos with Omniverse and its DGX platform to generate synthetic data and then combine it with blueprints and agents.

Nvidia outlined a set of Omniverse Blueprints including;

- Mega, which is for developing and testing robot fleets at scale in a factory or warehouse digital twin before real-world deployment.

- Autonomous Vehicle simulation for AV developers can replay driving data, generate ground truth data and closed loop testing.

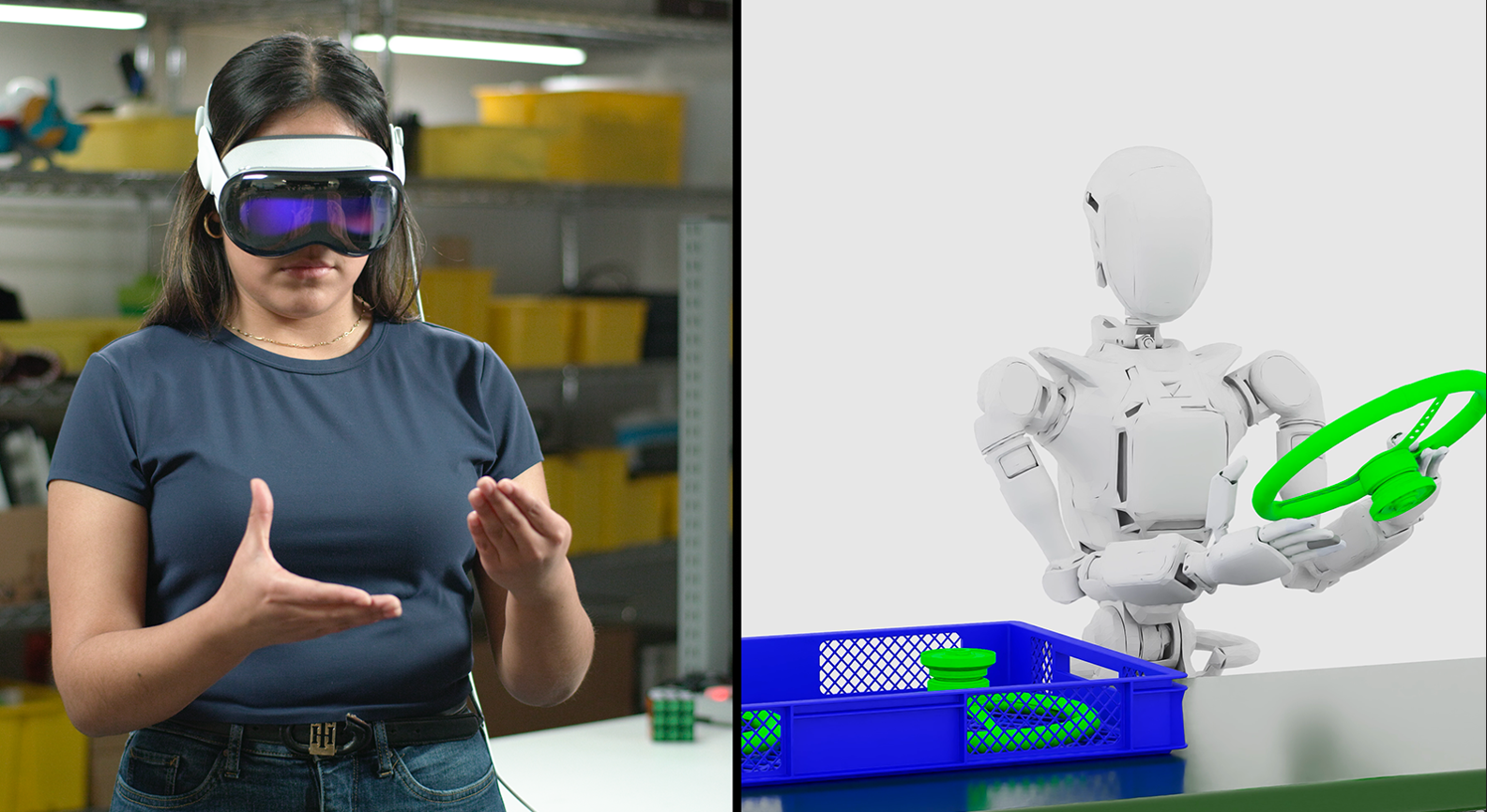

- Omniverse Spatial Streaming to Apple Vision Pro for enterprise applications.

- Real-Time Digital Twins for Computer Aided Engineering, a reference workflow.

Nvidia also launched the Isaac GR00T Blueprint to accelerate humanoid robotics development. Nvidia Isaac GR00T is designed for synthetic motion generation that can train robots using imitation learning. The workflow captures human data and then multiplies it with synthetic data.