This post first appeared in the Constellation Insight newsletter, which features bespoke content weekly and is brought to you by Hitachi Vantara.

Generative AI is supposed to be a boon for vendors, enterprises and overall productivity and efficiency, but so far, the economic benefits have gone to just a few. Simply put, it is very early in tracking the trickledown effects of generative AI, but worth pondering the economic impacts.

There's little doubt that generative AI will have an economic impact both good and bad. Some enterprises are seeing returns as they move from pilots to production. It's no secret that software development is the premier genAI use case. Vendor spoils from generative AI are a bit harder to track because pure plays are hard to find. Beyond Nvidia and Supermicro there have been few generative AI winners among enterprise technology vendors.

Here are the moving parts to ponder regarding trickledown genAI economics.

- Nvidia and Supermicro are clear beneficiaries.

- Other vendors are anticipating demand pops that haven't showed up yet. These vendors often talk about pipelines and interest instead of revenue.

- Hyperscalers such as Microsoft Azure, Google Cloud and Amazon Web Services are seeing sequential revenue gains as the cloud optimization phase ends and genAI gooses workloads.

- Traditional enterprise hardware vendors--Cisco, Dell, HPE--are seeing demand in AI optimized systems as enterprises ponder hybrid workload approaches to large language models (LLMs).

- Component vendors that feed into those AI-optimized systems will see benefits. Think AMD, Intel and Western Digital to name a few.

- SaaS vendors may benefit from generative AI, but CXOs are already pushing back on the copilot upsell. Copilots are boosting SaaS contracts and CXOs will choose what workers get access to generative AI.

- Enterprises are in early stages of genAI deployments and many of them are focusing on the data management and architectures to innovate. As a result, data platforms including Snowflake and Databricks will benefit.

Here's a look generative AI's trickledown economics so far.

Nvidia wins (always).

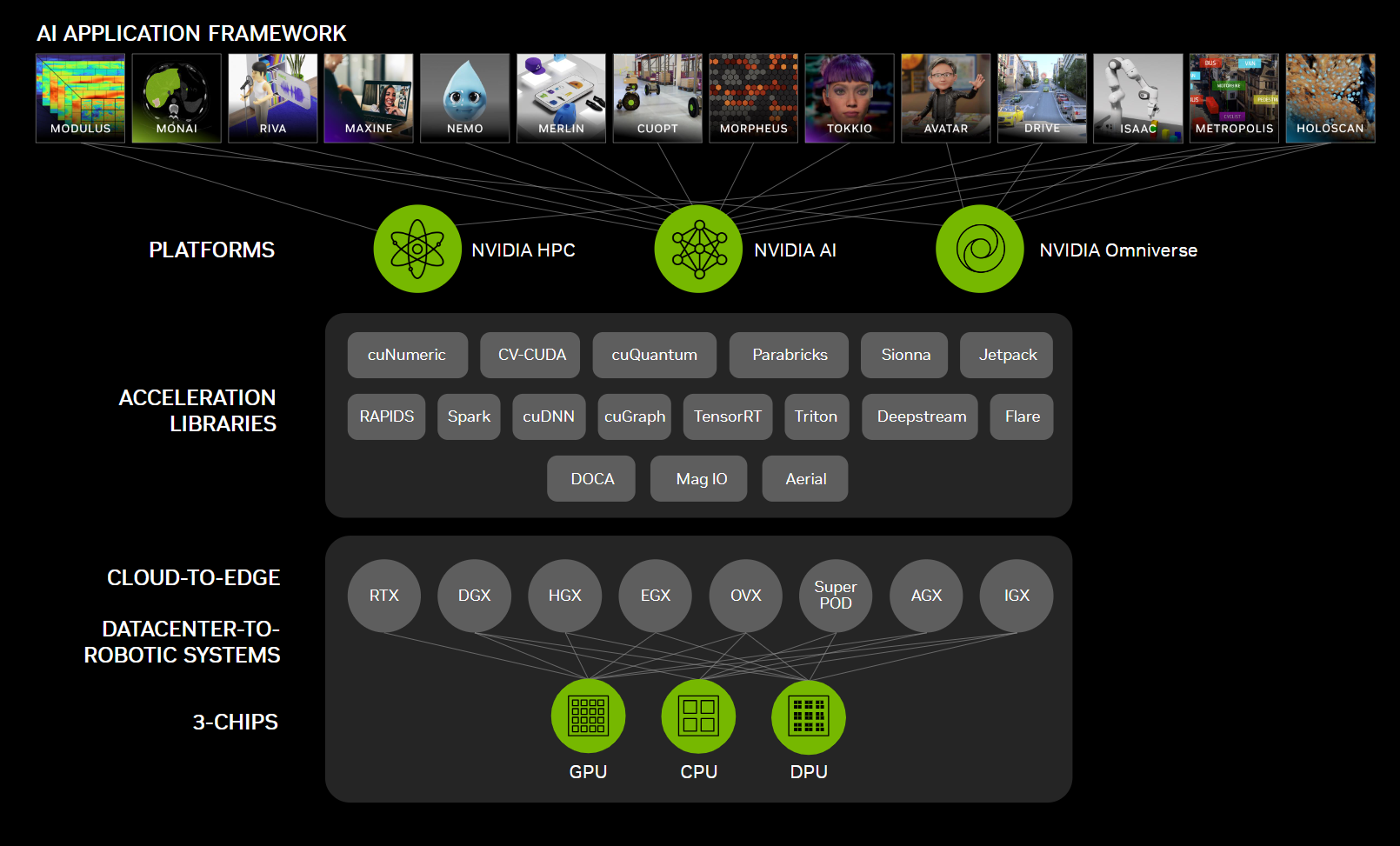

To say expectations are inflated for Nvidia's fourth quarter earnings on Feb. 21 would be an understatement. However, Nvidia has been able to be at the right place at the right time with the research and development and GPUs for generative AI workloads.

Nvidia is expected to deliver fourth quarter revenue of $20.23 billion with non-GAAP earnings of $4.52 a share. In the third quarter, Nvidia beat revenue estimates by $2 billion. For a bit of perspective, Nvidia quarterly revenue is approaching what it used to put up a year. In fiscal 2023, Nvidia revenue was $26.97 billion.

Until Supermicro crushed estimates with its AI-optimized gear, there weren't any other pure genAI winners. Supermicro's fourth quarter results indicate that there will be other winners. Keep in mind, Supermicro's gains are largely due to Nvidia-powered systems.

You can expect other vendors to start touting generative AI system demand. Dell and HPE have already said pipelines are being built for generative AI systems.

What's unclear at this point is how much of Nvidia's strength will trickle down to server makers. Most of the genAI buildout has revolved around hyperscale cloud players and Meta. Those hyperscale providers buy white box servers.

Rob Mionis, CEO of contract equipment manufacturer Celestica, said on the company's fourth quarter earnings call:

"We're still in the early innings of the upgrade cycle. It just started about a year or so ago. About 50% of any data center needs to be upgraded, I think we're still in the very early innings of that upgrade cycle. It's a multi-year process. It's certainly five plus years."

In addition, networking gear is being upgraded too. Generative AI is also driving upgrades for AI and machine learning workloads.

Meta's capital spending highlights how Nvidia will be poised to win. "By the end of this year, we'll have about 350,000 (Nvidia) H100s, and including other GPUs, which will be around 600,000 H100 equivalents of compute. We're well positioned now because of the lessons that we learned from Reels. We initially underbuilt our GPU clusters for Reels. And when we were going through that, I decided that we should build enough capacity to support both Reels and another Reels-sized AI service that we expected to emerge so we wouldn't be in that situation again," said Meta CEO Mark Zuckerberg.

- Nvidia launches H200 GPU, shipments Q2 2024

- Enterprises seeing savings, productivity gains from generative AI

- Nvidia, Foxconn aim to build AI factories, collaborate on EVs, robotics

The boom is coming for these vendors (maybe).

AMD is seen as a major challenger to Nvidia and Intel will get some portion of the genAI pie too. These vendors see demand coming, but real revenue surges will take time to develop.

AMD has increased its accelerated computing chip demand forecast from $2 billion to $3.5 billion. AMD CEO Lisa Su said:

"It really is mostly customer demand signals. So as orders have come on books and as we've seen programs moved from, let's call it, pilot programs into full manufacturing programs, we have updated the revenue forecast. As I said earlier, from a supply standpoint, we are planning for success. And so, we worked closely with our supply chain partners to ensure that we can ship more than $3.5 billion, substantially more depending on what customer demand is as we go into the second half of the year."

Arm Holdings appears to be another winner. Arm licenses its designs to chipmakers and CEO Rene Haas said on the company's third quarter earnings conference call that AI driving revenue growth. "We've seen a significant transition now continuing from our v8 product to our v9 product. Our v9 product garners roughly 2x the royalty rate of the equivalent v8 product," he said.

Haas added that Nvidia's Grace Hopper 200 are Arm v9 based as are custom data center chips from Amazon Web Services with Graviton and Microsoft Azure with Cobalt. Haas said:

"There's definitely growth coming from the data center side. So proof points such as Nvidia's Grace Hopper, the Microsoft Cobalt design, the work that AWS has been doing in Graviton. What we are seeing is more and more AI demands in the data center, whether that's around training or inference. And because the Arm solution in the data center, in particular, is extremely good in terms of performance per watt and the constraints that are on today's data center is relative to running these AI workloads puts a huge demand on power, that's a great tailwind for Arm."

Storage is also going to see trickle down generative AI gains.

Western Digital CEO David Goeckeler said on the company's fiscal second quarter earnings call:

"In addition to the recovery in both Flash and HDD markets, we believe storage is entering a multi-year growth period. Generative AI has quickly emerged as yet another growth driver and transformative technology that is reshaping all industries, all companies, and our daily lives...We believe the second wave of generative AI-driven storage deployments will spark a client and consumer device refresh cycle and reaccelerate content growth in PC, smartphone, gaming, and consumer in the coming years. Our Flash portfolio is extremely well positioned to benefit from this emerging secular tailwind."

Goeckeler said to date the investment is being made by hyperscalers for genAI and that'll expand to edge devices moving forward.

Hyperscale cloud players driving demand and will reap rewards.

Microsoft, Alphabet (Google) and Amazon earnings results made it clear that the cloud optimization phase has ended, and generative AI workloads were driving demand.

Amazon CEO Andy Jassy said cloud migrations are picking up again. "If you go to the generic GenAI revenue in absolute numbers, it's a pretty big number, but in the scheme of $100 billion annual revenue run rate it's still relatively small. We really believe we're going to drive 10s of billions of dollars of revenue over the next several years (with GenAI). It's encouraging how fast it's growing, and our offerings really resonate with customers," said Jassy, who noted that Amazon Web Services is preaching model choices to land genAI workloads. Also keep in mind that Amazon is leveraging genAI across its commerce and delivery businesses too.

On a conference call with analysts, Alphabet Sundar Pichai said Google Cloud is seeing strong usage of Vertex AI. "Vertex AI has seen strong adoption with the API requests increasing nearly 6x from the first half to second half last year," said Pichai. Pichai also said Duet AI was boosting productivity.

Microsoft CEO Satya Nadella added on his company’s most recent earnings: "We now have 53,000 Azure AI customers, over a third are new to Azure over the past 12 months."

Direct generative AI revenue for these hyperscalers will be hard to pin down. Why? These cloud providers will likely benefit from the workloads and usage from generative AI, which will drive compute, storage and managed cloud revenue. For instance, enterprises are likely to use custom processor options from AWS and Google Cloud for generative AI workloads that don't require Nvidia's price tag.

- AWS launches Amazon Q, makes its case to be your generative AI stack

- AWS, Microsoft Azure, Google Cloud battle about to get chippy

- Google Cloud CEO Thomas Kurian on DisrupTV: Generative AI will revamp businesses, industries

- Google launches Gemini, its ChatGPT rival, adds AI Hypercomputer to Google Cloud

- Microsoft updates Copilot with ChatGPT-4 Turbo

- Microsoft launches Azure Models as a Service

- Microsoft launches AI chips, Copilot Studio at Ignite 2023

Enterprises’ hybrid genAI deployments to benefit legacy providers.

Hyperscale cloud providers won't garner all the genAI revenue. Enterprises are already thinking of small language models, specific use cases and on-premise deployments for security reasons. Cisco, Dell and HPE should all see gains from generative AI deployments via sales of converged and hyperconverged systems.

These traditional vendors will also benefit due to inferencing at the edge.

Comments from these traditional vendors indicate that there will be an on-prem upgrade cycle too.

Also keep in mind that enterprises eyeing generative AI are likely to turn to their preferred service partners. Accenture and Infosys are just a few services companies citing strong genAI demand. Accenture CEO Julie Sweet noted in December that its GenAI sales in the first quarter were $450 million, up from $300 million three months earlier. Sweet said:

"We are now focusing on helping our clients in 2024 realize value at scale. We are excited about the recent launch of our specialized services to help companies customize and manage foundation models. We're seeing that the true value of generative AI is to deliver on personalization and business relevance. Our clients are going to use an array of models to achieve their business objectives."

- How AI workloads will reshape data center demand

- The New 2023 Cloud Reality: A Rebalancing Between Private and Public

- Meet Data Inc. and what a post AI company looks like

SaaS, data platforms, the copilot game.

Trickle down generative AI economics is clearly making its way through the tech ecosystems for companies involved in the infrastructure buildout and those involved with strategy and expertise. Enterprise software providers will be the battleground to watch.

Before we get into the state of play for software companies, it's worth noting a few winners. Databricks is a winner. Snowflake is a winner. Palantir appears to be landing commercial accounts. MongoDB is surging as developers eye generative AI apps. I can take an educated guess and say ServiceNow and its use-case specific model approach is a winner. Salesforce's Data Cloud looks like a winner. But there are some big questions ahead: How many enterprise software vendors can realistically charge extra for generative AI capabilities? How many copilots am I willing to pay for? Why would I pay extra for what will be a standard feature in a few years? Won't generative AI be table stakes for any application in the future?

- Here's why generative AI disillusionment is brewing

- Privacy, data concerns abound in enterprise, says Cisco study

- Why vendors are talking RPOs, pipelines, pilots instead of generative AI revenue

- Generative AI features starting to launch, next comes potential sticker shock

Constellation Research analyst Dion Hinchcliffe noted on the most recent CRTV news segment that CXOs are already pushing back. The pushback is understandable. Simply put, copilots are blowing the budget. Meanwhile, the genAI approaches from Adobe, Workday and Zoom make more sense for customers in the long run.

Sure, Microsoft created a model where there's a $30 per user/month charge for copilot functionality. Some applications--such as GitHub Copilot--justify the upsell due to the returns provided. Other areas are going to be a tougher sell. Should an enterprise forgo the copilot upsell if they can't generate 50% returns? What's the number? And if generative AI drives consumption or economic value for vendors elsewhere (compute, storage, consumption) shouldn't the copilot tax be waived?

Multiply that math across the entire software stack and CXOs have budget issues ahead. One thing is clear: Not every software vendor is going to be able to charge extra for a copilot or generative AI feature (even if heavily discounted).

My take: Trickledown economics for GenAI isn't going to make it to all enterprise software vendors. Not every enterprise vendor is going to be a generative AI winner.

From our underwriter

Hitachi Vantara and Cisco launched a suite of hybrid cloud services, Hitachi EverFlex with Cisco Powered Hybrid Cloud, that aims to automate deployments and provide predictive analytics. The combination aims to bring Hitachi Vantara's storage, managed services and hybrid cloud management and integrate them with Cisco's networking and computing stack. Hitachi Vantara and Cisco said customers will see a consistent experience across on-premises and cloud deployments.