OpenAI launched new developer tools, models and GPTs designed for specific use cases.

On its first developer day, OpenAI moved to expand its ecosystem, enable developers, and leverage the popularity of its models so they can be customized.

For OpenAI, the set of announcements brings it closer to where enterprises are going--smaller large language models (LLMs) and generative AI that is tailored to tasks. These task-specific models--called GPTs--will roll out today to ChatGPT Plus and Enterprise users. Constellation Research analyst Holger Mueller said:

"Buried in the press release in a side sentence is what is the biggest challenge in enterprises adopting LLMs. Important information is in OLTP systems and can't be accessed by LLMs. If the OpenAI GPTs capability to access OlTP databases work - we will enter the next generative AI era."

In a blog post, OpenAI said:

"Since launching ChatGPT people have been asking for ways to customize ChatGPT to fit specific ways that they use it. We launched Custom Instructions in July that let you set some preferences, but requests for more control kept coming. Many power users maintain a list of carefully crafted prompts and instruction sets, manually copying them into ChatGPT. GPTs now do all of that for you."

While OpenAI's ChatGPT is being used for context specific use cases in Microsoft productivity applications, the company is also looking to put its own mark on its models. The game plan for OpenAI is to build a community and launch a GPT Store, which will feature GPTs across a broad range of categories. Developers will get a cut of the proceeds from the GPT Store.

Key points about GPTs:

- Your chats with GPTs are not shared with developers. If a GPT uses third party APIs you have control over what data can be sent to API and what can be used for training.

- Developers can use plug-ins and connect to real-world data sources.

- Enterprises can use internal-only GPTs with ChatGPT Enterprise. These GPTs can be customized for use cases, departments, proprietary data and business units. Amgen, Bain and Square are early customers.

- OpenAI also launched Copyright Shield, which will indemnify customers across ChatGPT Enterprise and the developer platform.

That backdrop of GPTs complements a bevy of other OpenAI launches that are more aligned with where the LLM market is headed.

Related: Why generative AI workloads will be distributed locally | Software development becomes generative AI's flagship use case | Enterprises seeing savings, productivity gains from generative AI | Get ready for a parade of domain specific LLMs

Here's the breakdown.

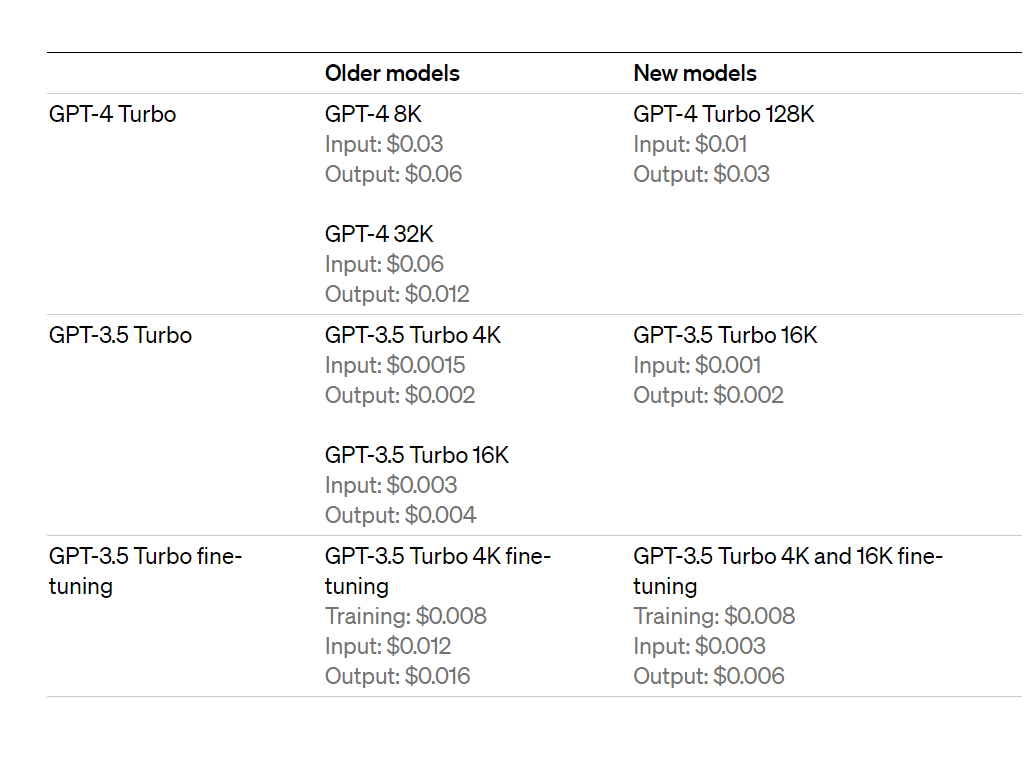

- OpenAI launched GPT-4 Turbo, which is up to date through April 2023 and has a 128k context window. It is also optimized for a 3x cheaper price for input tokens and 2x cheaper price for output tokens relative to GPT-4.

- GPT-4 Turbo will also be able to generate captions and analyze real-world images in detail and read documents with figures.

- DALL-E 3 has been updated to programmatically generate images and designs. Prices start at 4 cents per image generated.

- The company improved its function calling features that enable you to describe functions of your app to external APIs to models. Developers can now call multiple functions, send one message to call multiple functions and improve accuracy.

- GPT-4 Turbo supports OpenAI's new JSON mode.

- OpenAI added reproducible outputs to its models for more consistent returns. Log probabilities will also be released so developers can improve features.

- The company released the Assistants API in beta for developers to build agent-like experiences in applications. These assistants use Code Interpreter to write and run Python code, Retrieval to leverage knowledge outside of OpenAI models and Function calling.

- Developers will also get text-to-speech APIs to generate human-quality speech.

As for prices, OpenAI models are enabling lower costs.