The unfortunate reality of today’s highly digitized and cloud-powered organizations is that most continue to struggle to reach anything close to the full potential value of their data. Yet for most companies, their data remains the single most under-leveraged, high value asset they have. The CIOs I’ve spoken to in the last several years about challenge this still decry that they still cannot buy or build systems that fully tap into what the organization actually knows from the information already on hand.

The Prime Challenge: Getting Data to Where It Needs to Be

Yet properly unleashing the value of data has long been the primary end goal of enterprise digitization and transformation in most organizations. Building capabilities to manage its growth, velocity, scale, and agility are and will continue to be a foundational activity of a strong business, as well as strategic, future-looking activities like digital transformation. Enterprises have spent the better part of the last decade starting to open up their data silos to unleash data across the organization, all while powering more and more automation with that data. CIOs and tech leaders must now be a strategic "enabler to ensure data fully serves the organization", as I noted recently.

In short, much progress has been made with putting data to work, but it’s still not enough. A large gap remains between what is possible and what many organizations are actually achieving. What's more, this gap has significant disruptive and competitive implications.

In recent years, there have been several generations of market activity around the tools and technologies to improve how well we can actually use the data that directly informs and empowers our businesses. These cycles have variously included data lakes, data warehouses, backward-looking data analytics, and business intelligence.

More recently, many companies have also begun to aggregate and transform their data storage ecosystems by moving to the cloud. Most recently, the push has been to adopt cloud-native architectures, to tap into better models for using the cloud and make new IT investments more future-proof, among other benefits.

Yet all of this is not sufficiently addressing the core challenge in getting value from an organzation's data estate. One key trend is to use data to enhance analytics capabilities and improve increasingly personalized digital customer journeys. But a surprisingly small number of enterprises have genuinely harnessed the power of the relevant data to achieve the revenue potential that is possible.

The reasons for this, after observing a great many different efforts and their stable of data technologies and techniques over the years: Companies’ most valuable data, created through the countless interactions they have with their customers, still remains too-firmly trapped in the next generation of silos, or if it’s unleashed, cannot be tapped into in sufficient timeframes.

Despite all the investments we’ve made, the very platforms and tools we use to harvest data for our systems themselves can actually foster new silos of operational data. Unexpectedly, these new data farms have expanded rapidly to support urgent and important activities like digital transformation. And that’s not all: As application and IT systems became ever-more decoupled and finer-grained, they have proliferated in number, creating new silos faster than we can eliminate them. Finally, even if data can be accessed, it often cannot be reached rapidly enough to support today’s increasingly fast-moving digital business processes. The key mantra here is simply that data in silos tends to be data not used.

What then can help organizations make the next major functional leap to close the gaps that remain in taking full value from our data? The key to unlocking this major step in progress is realizing that the dramatic increase in the complexity of our data architectures is counterintuitively making it harder to optimize for scale and speed. It is also just layering on costs to optimize for often-narrow scenarios. This sprawl of interlocking of databases and repositories is also quite expensive to license and maintain.

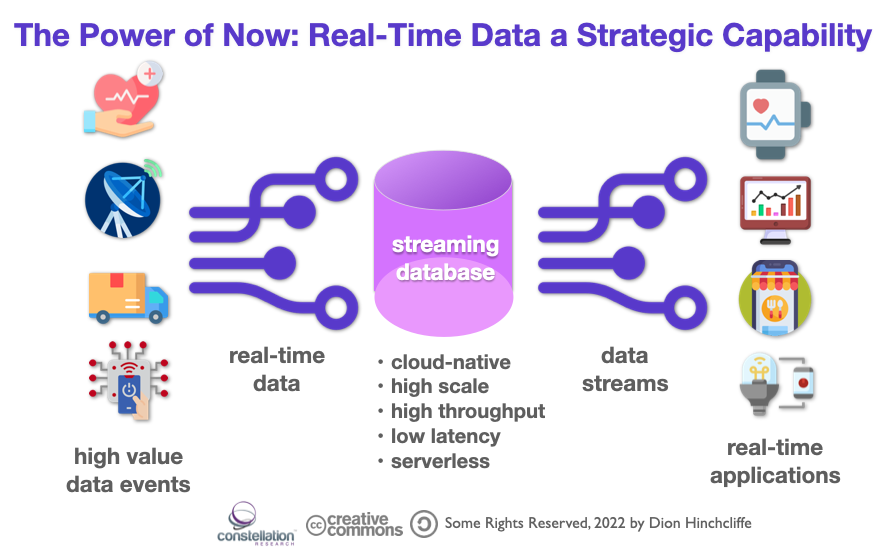

The Value of The Data of Now

Instead, to compete today, it's simply not enough to capture and store bottomless streams of data from the hundreds of applications that power the typical enterprise, then hope to eventually mine it for intelligence at some later date. A new approach is growing in popularity: Tapping into the streams directly as the data moves (or otherwise being able to treat enterprise data in an in-motion manner.) Data innovators have begun to run their businesses and outcompete using real-time data. This is creating real-time intelligence and actions tied to important events or vital changes in the operating environment.

For example, Barracuda Networks, a worldwide leader in threat detection security, application delivery and data protection solutions, has been employing a real-time approach to data using a multicloud-friendly, serverless capability known as Astra DB from DataStax, built on Apache Cassandra, the popular very high-scale NoSQL database. Astra DB’s ability to scale thousands of simultaneous data flows from around the world to deliver real-time threat detection services literally defines Barracuda’s competitive advantage. Barracuda protects customers with its Advanced Threat Protection (ATP) service, which analyzes traffic across all major threat vectors, including email, web browsing, web applications, remote users, mobile devices, and the network perimeter. They use this low-latency, high-throughput, high-scale data capability to directly enable millions of simultaneous customers to stave off security issues in real-time around the globe with zero latency or downtime.. The ability to tap seamlessly into thousands of simultaneous data flows from around the world into a real-time service is the strategic capability that makes this possible.

The Future Belongs to Simple, Streamlined, Real-Time Cloud-Native Data at Scale

I recently hosted a LinkedIn Live session with my latest predictions on data and digital transformation. In the session I noted that “just having data in all our SaaS systems and our various public clouds and data centers isn’t enough. It's activating that data, it's reaching into it and using it” that matters most at the end of the day. Organizations seeking to make the next big leap in progress will move to new real-time data architectures in the cloud. Businesses that can tap into the real-time windows of their data will be engines of the next generation of innovation and growth. They will better automate operational decisions when it matters most. They will offer compelling, in-the-moment digital experiences that customers now expect and demand.

The enormous challenge – and sustainable advantage -- of turning real-time data into revenue is one that’s faced by most enterprises today. But now there is a new generation of capabilities available – explicitly designed for the highly dynamic and limitless world of the cloud and edge that we are moving rapidly to. Technology leaders can now move to best-of-breed capabilities that enables organizations to break our most vital assets out of their silos, create open and shared operational capabilities that unleash it all, and wield it to its fullest potential.

Additional Research and Analysis

The Cloud Reaches an Inflection Point for the CIO in 2022

CIOs Talk Data Strategy (with quotes from Dion)

The Strategic New Digital Commerce Category of Product-to-Consumer (P2C) Management

How DataStax is Emerging as a Strategic Anchor in Cloud Data Management