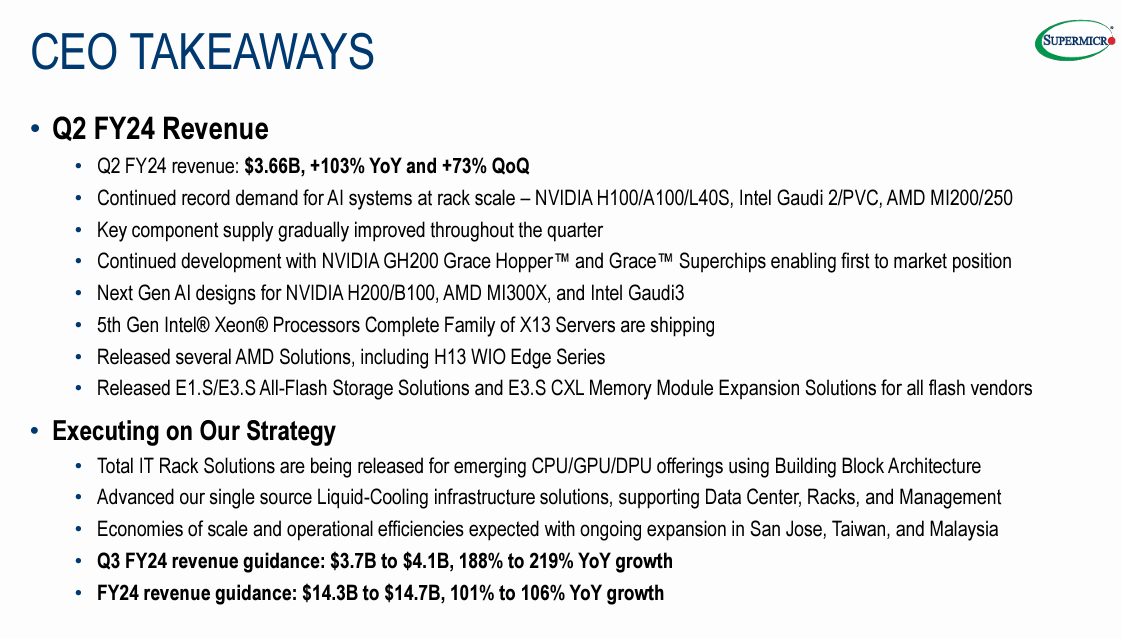

Supermicro raised estimates for its fiscal second quarter and then handily beat estimates.

The company, which is benefiting from the generative AI workload boom, reported revenue of $3.66 billion, up from $1.8 billion a year ago. Supermicro preannounced revenue of $3.6 billion to $3.65 billion.

The company reported second-quarter earnings of $296 million, or $5.10 a share. Non-GAAP earnings of $5.59 a share compared to $3.26 a year ago. Supermicro's non-GAAP earnings were above the $5.40 a share to $5.55 a share given on Jan. 18.

Charles Liang, President and CEO of Supermicro, said:

"While we continue to win new partners, our current end customers continue to demand more Supermicro’s optimized AI computer platforms and rack-scale Total IT Solutions. As our innovative solutions continue to gain market share, we are raising our fiscal year 2024 revenue outlook to $14.3 billion to $14.7 billion."

Supermicro's results highlight how the generative AI data center buildout is moving beyond benefiting Nvidia to other hardware vendors downstream.

Both Dell Technologies and HPE have signaled strong pipelines for generative AI systems.

- Why vendors are talking RPOs, pipelines, pilots instead of generative AI revenue

- How AI workloads will reshape data center demand

- The New 2023 Cloud Reality: A Rebalancing Between Private and Public

As for the outlook, Supermicro projected third-quarter sales of $3.7 billion to $4.1 billion with non-GAAP earnings of $5.20 a share to $6.01 a share.

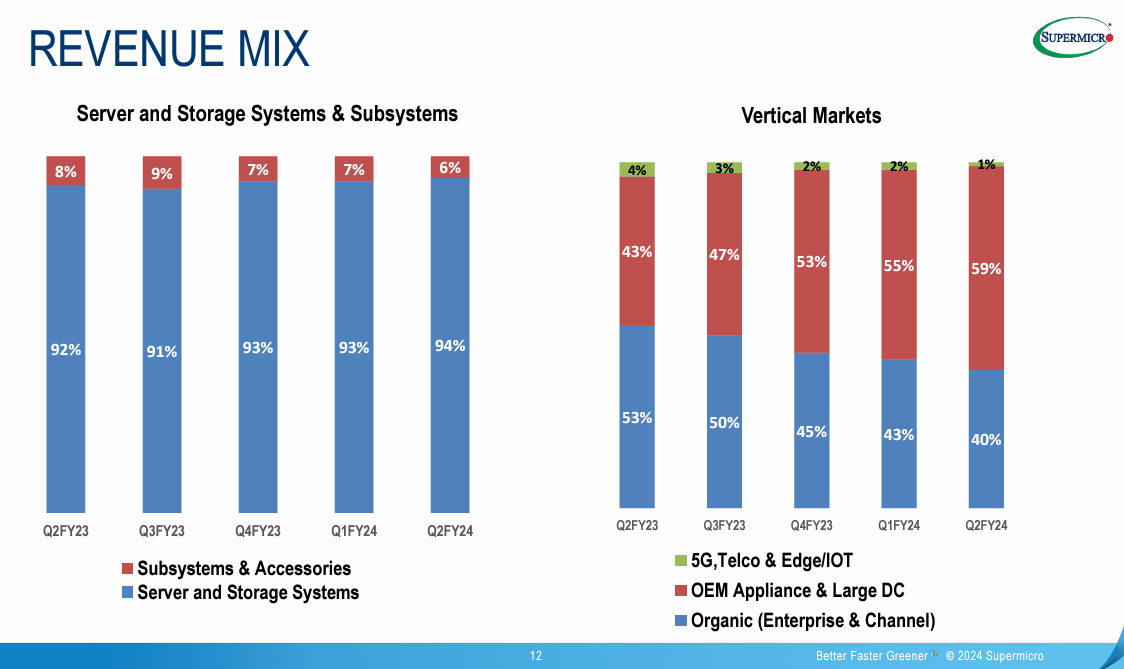

Supermicro said it is seeing record demand for AI systems powered by NVIDIA H100/A100/L40S, Intel Gaudi 2/PVC and AMD MI200/250. Revenue is being driven by hyperscale data center builds in the US.

On a conference call with analysts, Liang said demand was strong for Nvidia powered systems, but AMD and Intel systems were on the runway.

"Our AI rack-scale solutions, especially the Deep-Learning and LLM-optimized based on NVIDIA HGX-H100, continue gaining high popularity. The demand for AI inferencing systems and mainstream compute solutions has also started to grow.

We have been preparing to more than double the size of our current AI portfolio with the coming soon NVIDIA CG1, CG2 Grace Hopper Superchip, H200 and B100 CPUs -- GPUs, L40S Inferencing-optimized GPUs, AMD MI300X/MI300A, and Intel’s Gaudi 2 and Gaudi 3. All these new platforms will be ready for high volume production in the coming month and quarters."

Liang said Supermicro is further optimizing its Nvidia GPU based systems with AMD AI-optimized gear sampling. Supermicro plans the same for Intel's Gaudi platform.

Other takeaways:

- Liquid cooled systems demand will pick up. "I think liquid cooling will be the trend and we continue to make ourselves ready and try our best to support the customer, including providing some help to their data center infrastructure," said Liang. "I believe liquid cooling percentage will continue to grow, but at this moment most of the shipping is still air cooled."

- AI systems beyond generative AI are seeing growth. "Other than generative AI deep learning segment continues to grow very strong," said Liang. "Our inferencing opportunity in general CPU customer base also growing. So many verticals around the world need more inferencing solutions as well, including private cloud and data center."

- Demand will expand beyond large enterprises. "The economies of scale is very important to us when we further grow our total revenue, we will have a more large scale customers and more middle-sized and small-sized customers as well," said Liang.