Snowflake launched Snowpark Container Services in private preview in a move that will give developers more options to deploy and run generative AI applications.

The announcement, outlined at Snowflake Summit, follows up on the partnership with Nvidia. Snowflake Container Services expands Snowflake's ability to run workloads including full-stack applications, hosting large learning models (LLMs) and model training.

Constellation Research analyst Doug Henschen said "Snowpark Container Services are a linchpin for the Nvidia partnership and a catalyst for more capabilities Snowflake is promising."

Henschen added that Snowflake is enticing developers to do more on its data platform and create generative applications.

"Snowpark Container Services, which are entering private preview, will run in an abstracted Kubernetes environment for which Snowflake handles the complexity of managing and scaling capacity. It’s a way to run an array of application services directly on Snowflake data but without burdening the data warehouses and performance sensitive analytical applications that run on them. AI model building, data science workloads and Snowflake Native Applications are all example use cases. What they all have in common is that they run in the Snowflake environment, so there’s no need for data movement and you can use all the data security, access and governance controls already set up in Snowflake."

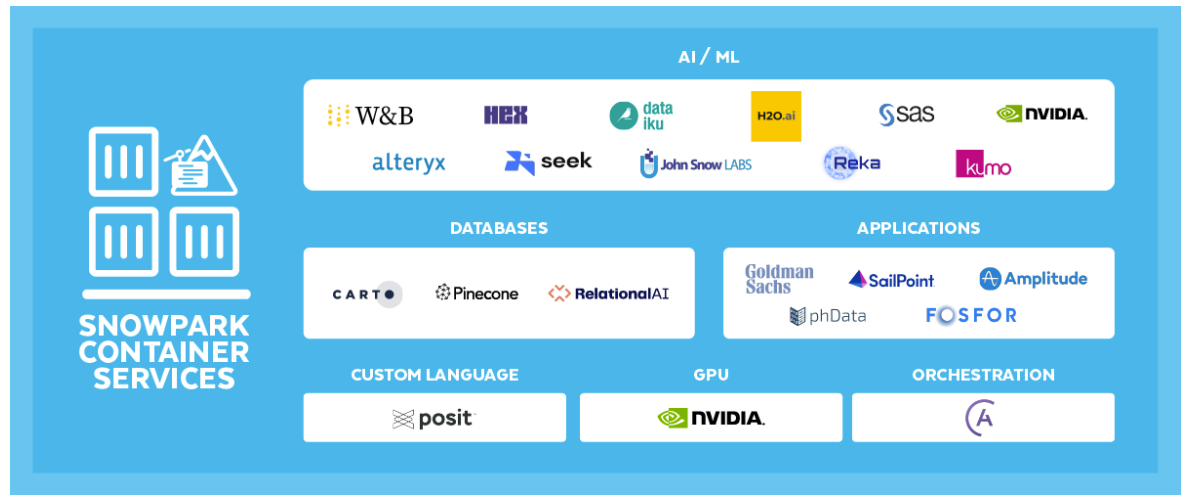

To that end, Snowflake said Snowpark Container Services also features partnerships with Nvidia as well as Alteryx, Astronomer, Dataiku, Hex and SAS.

According to Snowflake, Snowpark Container Services will expand the scope of Snowpark by giving developers more infrastructure options to run workloads within its platform. Customers will be able to gain access to third-party software and apps including LLMs, APIs, Notebooks and MLOps tools.

At Snowflake Summit, Snowflake also outlined the following:

- The company outlined a new LLM to focus on documents. Document AI, which is in private preview, is enabled by the Applica acquisition. Document AI is a generative AI tool for document processing and gives Snowflake the ability to address more data types.

- Snowflake said it will extend performance and governance to Apache Iceberg, an open source standard for open table formats. The company outlined Iceberg Tables, which will enable enterprises to work with data in their own storage in Apache Iceberg's format.

- Snowflake outlined more than 25 new Snowflake Native Apps on Snowflake Marketplace. The Snowflake Native App Framework is in public preview on AWS and available for developers.

Snowflake, along with Databricks and MongoDB, are building out their platforms to appeal to developers creating generative AI apps. These data platform players are aiming to show the benefits of working within their respective data environments while building out LLMs. Snowflake CEO Frank Slootman quipped that "AI is being mentioned so much it's like there's only two letters in the alphabet," but "in order to have an AI strategy you have to have a data strategy."

Here's Henschen's take on the competitive environment.

"Many vendors are talking about helping customers to use their data to build AI capabilities, analytical capabilities and next-generation, cloud-native apps harnessing that data and those capabilities. These are still preview capabilities, but Snowflake is putting the pieces in place to help its customers do more with their data all on the Snowflake platform. Rivals like Databricks and companies in other categories, like developer-focused MongoDB, are also extending their platforms and filling out gaps in their portfolios to help customers harness their data and do more on their platforms. Snowflake’s core appeal remains data warehousing, but it’s expanding."

Related:

-

Databricks adds on to the Lakehouse, acquires MosaicML for $1.3 billion

- MongoDB launches Atlas Vector Search, Atlas Stream Processing to enable AI, LLM workloads

In addition to Snowpark Container Services, Snowflake said it includes a new set of Snowpark machine learning APIs, which are in public preview, a Snowpark Model Registry (private preview) and Streamlit in Snowflake (public preview shortly).

Key items about Snowpark Container Services include:

- Snowpark Container Services enables customers to build in any programming language and deploy on a wide range of infrastructure choices.

- The service can be used as part of a Snowflake Native App, which is in public preview on AWS.

- Snowpark Container Services will be able to run third-party generative AI models directly within Snowflake accounts.

- Nvidia AI Enterprise will be integrated with Snowpark Container Services. Nvidia AI Enterprise includes more than 100 frameworks, pretrained models and development tools.

- Streamlit in Snowflake will enable developers to use Python code to develop apps.