Microsoft and its merry band of PC makers launched AI PCs at scale. The launch of Copilot+ PCs, ahead of Microsoft Build 2024, was notable for a host of reasons once you get past how executives were a bit obsessed with outperforming Apple's MacBook.

Consider:

- The first Copilot+ PCs will launch with Qualcomm's Snapdragon X Elite and Snapdragon X Plus processors. The rollout of AI PCs is big for Qualcomm and the Arm PC ecosystem.

- Microsoft did say that it will have AI PCs powered by AMD and Intel paired with Nvidia and AMD GPUs. AMD CFO Jean Hu said at an investor conference that the chipmaker will outline its AI PC processors in "coming weeks." "It's a very exciting product and very competitive to power the AI applications in the PC market. We do believe AI PC is a very significant inflection point. It will potentially help refresh the PC market," said Hu.

- Businesses can easily add AI PCs to the mix with the same controls they do today. Copilot+ PCs are billed as a productivity and collaboration booster.

- Copilot+ PCs have neural processor units (NPUs) capable of more than 40 trillion operations per second. In other words, a lot of inferencing work can be done on the edge.

- During his Build 2024 keynote, Microsoft CEO Satya Nadella said there are 40 models included into Copilot+ PCs including its new Phi-3 models, OpenAI's GPT-4o and other small language models as well as large ones. "We have 40 plus models available out of the box including Phi-3 Silica, our newest member of our small language family model designed to run locally on Copilot+ PCs to bring that lightning-fast local inference to the device. The copilot library also makes it easy for you to incorporate RAG (retrieval-augmented generation) inside of your applications on the on-device data,” said Nadella.

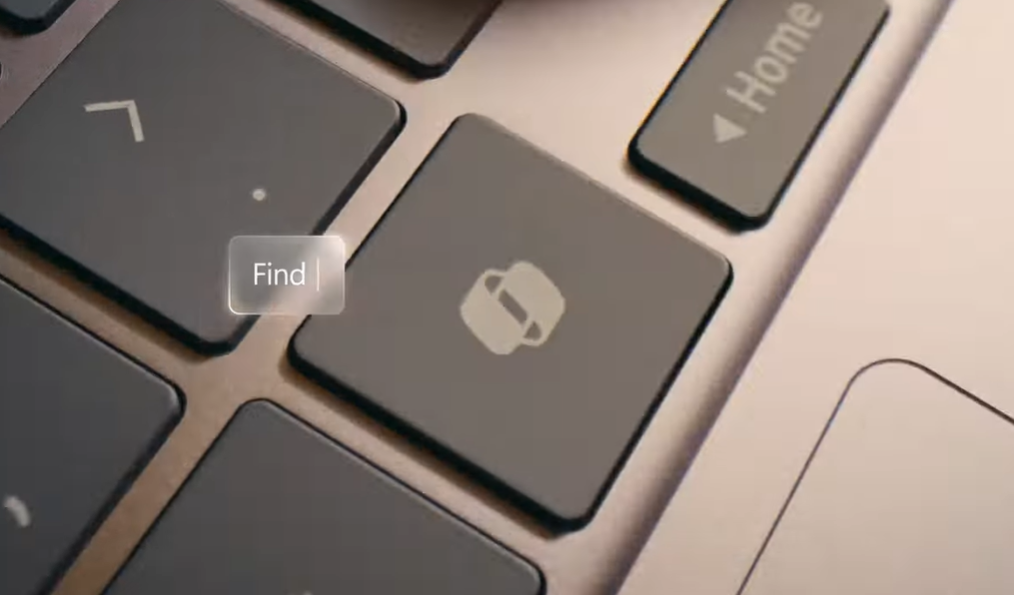

- Features like Recall, which makes your PC a recorder of all of the information, relationships and associations. Recall can help you remember items to create individual experiences. Cocreator and Live Captions are additional AI-driven features.

Add it up and CIOs are going to start testing these Copilot+ PCs and pondering their refresh cycles. Perhaps there's a future of work or productivity play to be had. The reality is that CIOs probably have more business transformation projects with higher priority rankings.

This post first appeared in the Constellation Insight newsletter, which features bespoke content weekly and is brought to you by Hitachi Vantara.

But what's surprising is how the big picture was glossed over with the Copilot+ PC launch. If these AI PCs scale, you're going to have a lot of computing power at the edge that can be used for inferencing and potentially even training.

Think peer-to-peer networked model inference. Smartphones are increasingly building in the capacity for AI models. Now you add in PCs. Generative AI can be decentralized from the AI factory vision that's popular today. Generative AI requires costly computing infrastructure. Why wouldn't you offload some of that work to the nodes on the edge?

For businesses, this emerging distributed AI computing system could mean using the PC to run models for personal identifiable information (think mortgage applications) and build personalized apps on the fly. You could automate any process that touches a customer using that person's compute power.

Are we there yet? Not really since these AI PCs just launched and it's doubtful any of these features are going to cause a buying frenzy. But PCs are already past their normal shelf life so the refresh cycle will drive some demand.

And the economic incentives are there to figure out a more peer-to-peer computing approach. At some point, enterprises are going to grow weary of paying up for AI compute. Finding a way to leverage AI PCs in the field could be a salve. There's a reason Dell Technologies considered AI PCs as part of its AI factory vision.

More on genAI:

- Copilot, genAI agent implementations are about to get complicated

- Generative AI spending will move beyond the IT budget

- Enterprises Must Now Cultivate a Capable and Diverse AI Model Garden

- Secrets to a Successful AI Strategy

- Return on Transformation Investments (RTI)

- Financial services firms see genAI use cases leading to efficiency boom

- Foundation model debate: Choices, small vs. large, commoditization