On-premise enterprise AI workloads are being talked about more as technology giants are betting that enterprise demand will launch in 2025 due to data privacy, competitive advantage and budgetary concerns.

The progression of these enterprise AI on-premises deployments remains to be seen, but the building blocks are now in place.

To be sure, the generative AI buildout so far has been focused on hyperscale cloud providers and companies building large language models (LLMs). These builders, many of them valued at more than a trillion dollars, are paying another trillion-dollar giant in Nvidia. That GPU reality is a nice gig if you can get it, but HPE, Dell Technologies and even the Open Compute Project (OCP) are thinking ahead toward on-prem enterprise AI.

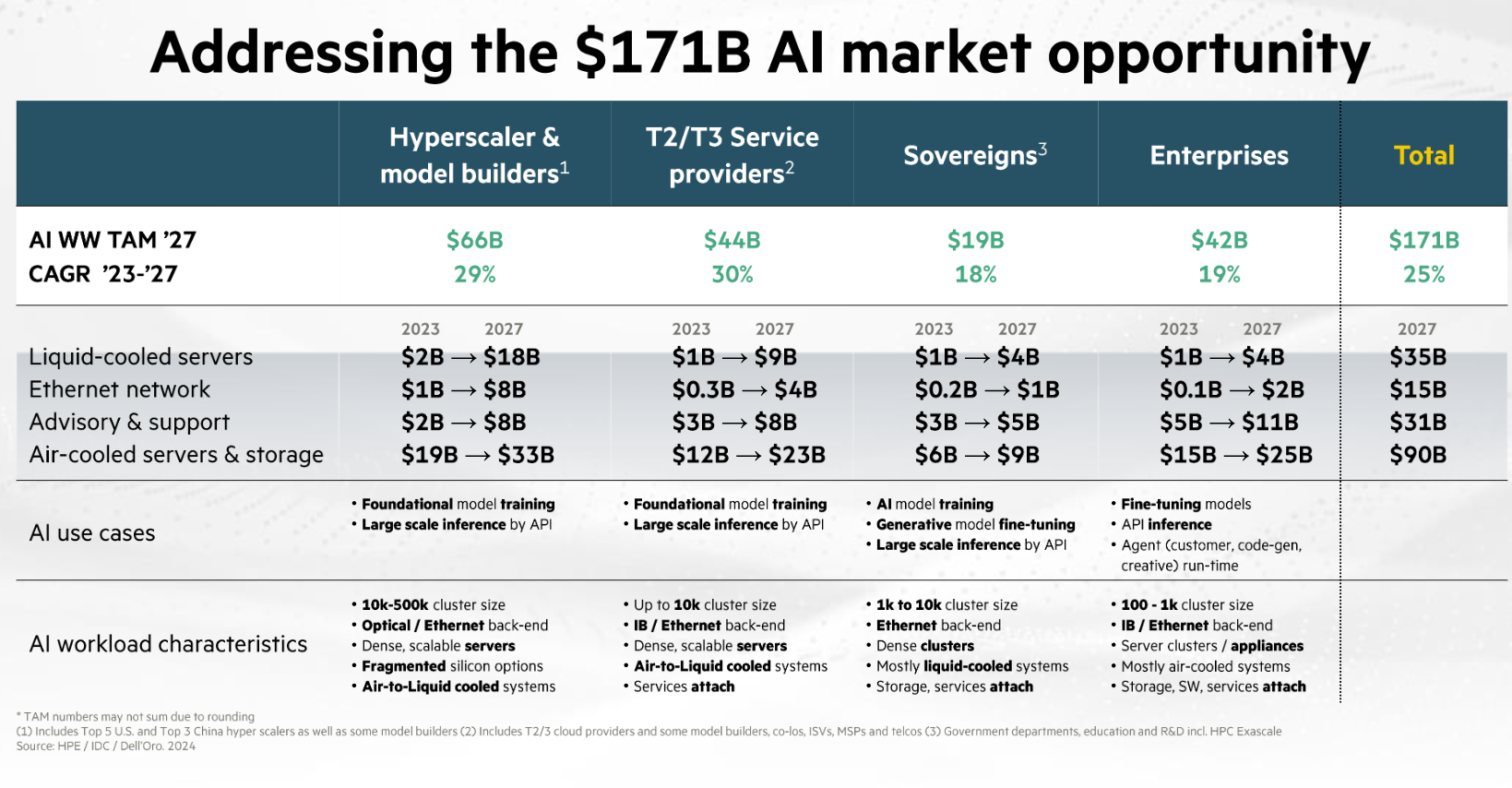

During HPE's AI day, CEO Antonio Neri outlined the company's market segments including hyperscalers and model builders. "The hyperscaler and model builders are training large language AI models on their own infrastructure with the most complex bespoke systems. Service providers are providing the infrastructure for AI model training or fine-tuning to customers so they can place a premium on ease and time to deployment," said Neri.

Hyperscalers and model builders are a small subset of customers, but can have more than 1 million GPUs ready, added Neri. The third segment is sovereign AI clouds to support government and private AI initiatives within distinct borders. Think of these efforts as countrywide on-prem deployments.

This post first appeared in the Constellation Insight newsletter, which features bespoke content weekly and is brought to you by Hitachi Vantara.

The enterprise on-premises AI buildout is just starting, said Neri. Enterprises are moving from "experimentation to adoption and ramping quickly." HPE expects the enterprise addressable market is expected to grow at a 90% compound annual growth rate to represent a $42 billion opportunity over the next three years.

Neri said:

"Enterprises must maintain data governance, compliance, security, making private cloud an essential component of the hybrid IT mix. The enterprise customer AI needs are very different with a focus on driving business productivity and time to value. Enterprises put a premium on simplicity of the experience and ease of adoption. Very few enterprises will have their own large language AI models. A small number might build language AI models, but typically pick a large language model off the shelf that fits the needs and fine-tune these AI models using their unique data."

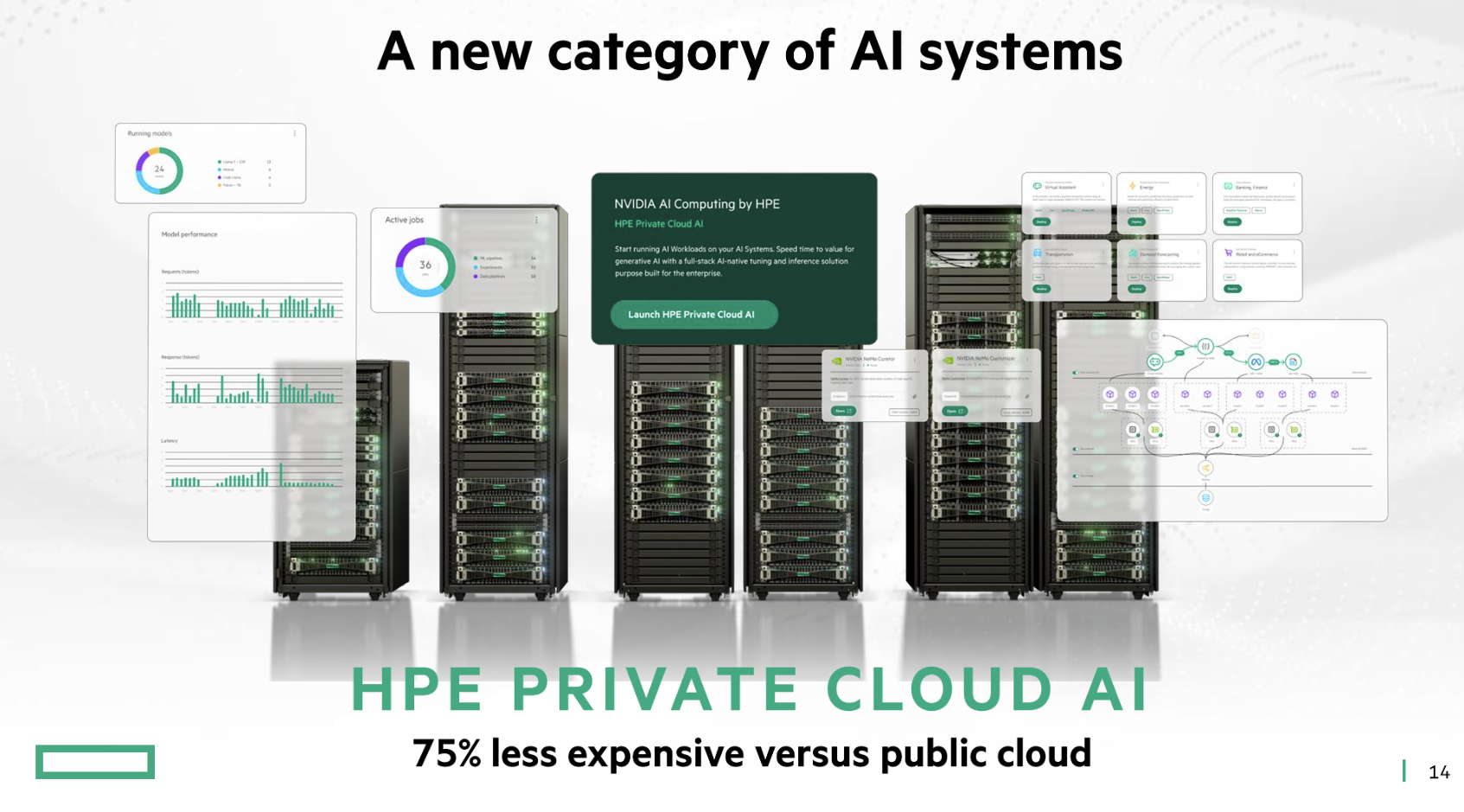

Neri added that these enterprise AI workloads are occurring on premises or in colocation facilities. HPE is targeting that market with an integrated private cloud system with Nvidia and now AMD.

HPE's Fidelma Russo, GM of Hybrid Cloud and CTO, said enterprises will look to buy AI systems that are essentially "an instance on-prem made up of carefully curated servers, networking and storage." She highlighted how HPE has brought LLMs on-premises for better accuracy and training on specific data.

These AI systems will have to look more like hyperconverged systems that are plug and play because enterprises won't have the bandwidth to run their own infrastructure and don't want to pay cloud providers so much. These systems are also likely to be liquid cooled.

Neil MacDonald, EVP and GM of HPE's server unit, outlined the enterprise on-prem AI challenges that go like this:

- The technology stack is alien and doesn't resemble classic enterprise cloud deployments.

- There's a learning curve on top of the infrastructure and software stack to master.

- The connection from generative AI model to enterprise data requires business context and strategy. Enterprises will also struggle to get to all of that data.

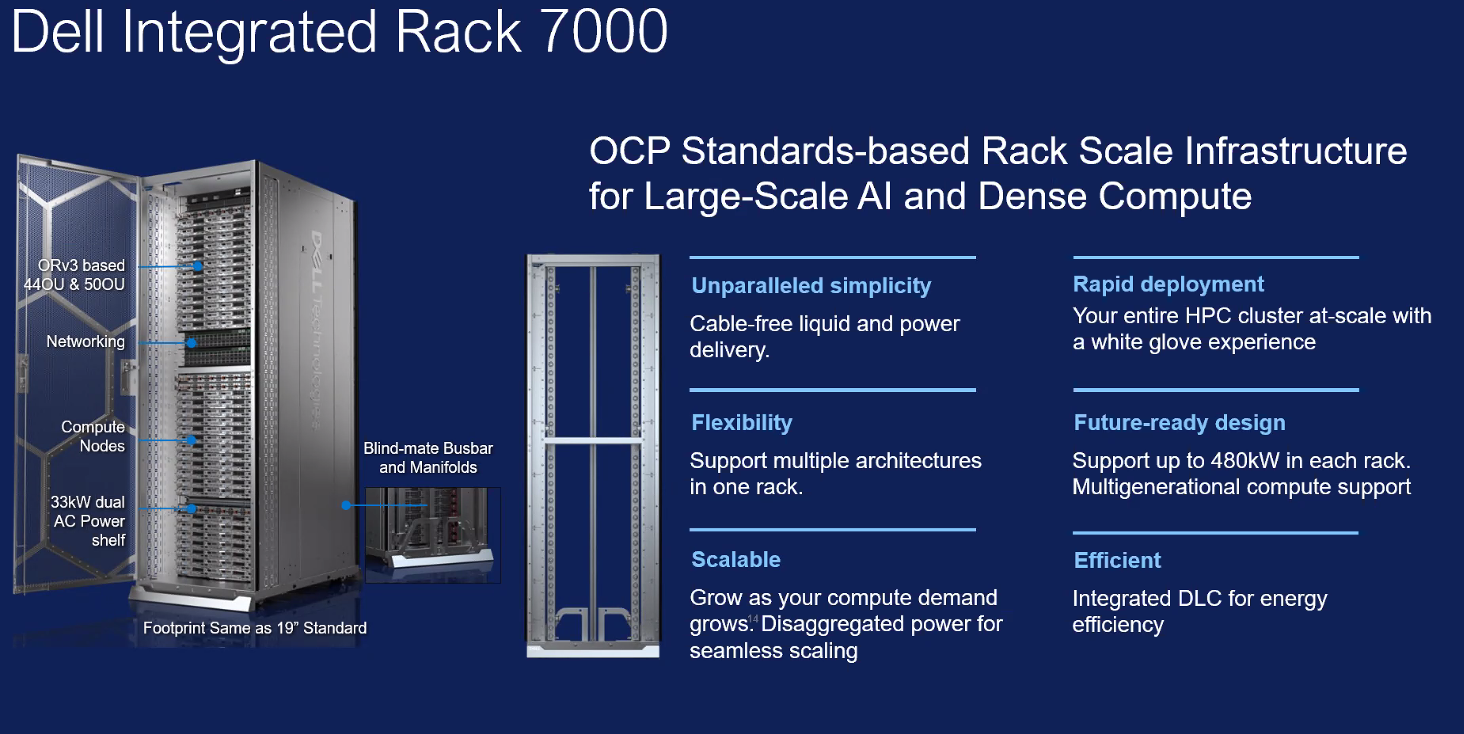

Dell Technologies recent launches of AI Factories with Nvidia and AMD highlight how enterprise vendors are looking to provide future-proof racks that can evolve with next-generation GPUs, networking and storage. These racks obviously appeal to hyperscalers and model builders, but play a bigger role by giving enterprises faith that they aren't on a never-ending upgrade cycle.

Blackstone's data center portfolio swells to $70 billion amid big AI buildout bet

- DigitalOcean highlights the rise of boutique AI cloud providers

- Equinix, Digital Realty: AI workloads to pick up cloud baton amid data center boom

- The generative AI buildout, overcapacity and what history tells us

To that end, the Open Compute Project (OCP) added designs from Nvidia and various vendors to standardize AI clusters and the data centers that host them. The general idea is that these designs will cascade down to enterprises looking toward on-premises options.

George Tchaparian, CEO at OCP, said the goal of creating a standardized "multi-vendor open AI cluster supply chain" is that it "reduces the risk and costs for other market segments to follow."

Rest assured that the cloud giants will be talking about on-premises-ish deployments of their clouds. At the Google Public Sector Summit, the company spent time talking to agency leaders about being the "best on-premises cloud" for workloads that are air-gapped, separated from networks and can still run models. Oracle’s partnership with all the big cloud providers is fueled in part by being a bridge to workloads that can’t go to the public cloud.

Constellation Research analyst Holger Mueller said that he is a fan of on-premise AI deployments except with a twist--these deployments should be build on a cloud stack. He said:

"CxOs need to keep in mind that on-premises AI is pitched by vendors that have failed at providing a public cloud option - the most prominent being HPE and Dell. As there is merit for on premises - for speed, privacy and compliance - the bottleneck remains NVidia GPUs. As these are better utilized in the cloud, the cloud providers have a chance to pay more than any enterprise. And this is just compute - we have not even talked about storage / data. CxOs need to be aware of moving data and workloads every year or so - which also means extra cost, downtime and risk - something enterprises cannot afford. In short - the future of AI is in the cloud."

The cynic in me would dismiss these on-premises AI workload mentions and think everything would go to the cloud. But there are two realities to consider that make me more upbeat about on-prem AI:

- Infrastructure at the data center and edge will have to move closer to the data.

- Accounting.

The first item is relatively obvious, but the accounting one is more important. On a Constellation Research client call about the third quarter AI budget survey, there was a good bit of talk about the stress enterprise operating expenses were seeing.

The art, ROI and FOMO of 2025 AI budget planning

Simply put, the last two years of generative AI pilots have taken budget from other projects that can't necessarily be put off much longer. Given the amount of compute, storage and cloud services required for generative AI science projects, enterprises are longing for the old capital expenditure approach.

If an enterprise purchases AI infrastructure it can depreciate those assets, smooth out expenses and create more predictable costs going forward.

The problem right now is that genAI is evolving so fast that a capital expenditure won't have a typical depreciation schedule. That's why these future-proof AI racks and integrated systems from HPE and Dell start to matter.

With AI building blocks being more standardized, enterprises will be able to have real operating expense vs. capital expense conversations. CFOs are arguing that on-prem AI is simply cheaper. To date, generative AI means that enterprises can't manage operating expenses well and budgets aren't sustainable. The bet here is that capital budgets are going to make more sense once the hyperscale giants standardize a bit.

Bottom line: AI workloads may wind up being even more hybrid than cloud computing.

Insights Archive

- The art, ROI and FOMO of 2025 AI budget planning

- Intuit's Enterprise Suite could upend midmarket ERP

- 13 artificial intelligence takeaways from Constellation Research’s AI Forum

- Enterprises leading with AI plan next genAI, agentic AI phases

- With Salesforce push, AI Agents, agentic AI overload looms

- Enterprise security customers conundrum: Can you have both resilience, consolidation?

- Big software deals closing on AWS Marketplace, rival efforts

- Peraton's Cari Bohley: Why internal talent recruiting and retention is critical

- Starbucks lands new CEO from Chipotle: Here’s how digital strategy could change

- Disruption is coming for enterprise software

- Enterprise software vendors shift genAI narrative: 'GenAI is just software'

- The generative AI buildout, overcapacity and what history tells us

- Enterprises start to harvest AI-driven exponential efficiency efforts

- GenAI may be the new UI for enterprise software