Physical AI and the foundational models that go with them will be a recurring theme across enterprises now that Nvidia has laid out its Cosmos world foundation models.

Speaking at CES 2025, Nvidia CEO Jensen Huang launched its own physical AI models with the aim of popularizing use cases across industries and robotics. Huang said Cosmos is needed to jump start the next generation of foundation models. After all, training world foundation models (WFMs) is expensive.

"The ChatGPT moment for robotics is coming. Like large language models, world foundation models are fundamental to advancing robot and AV development," said Huang.

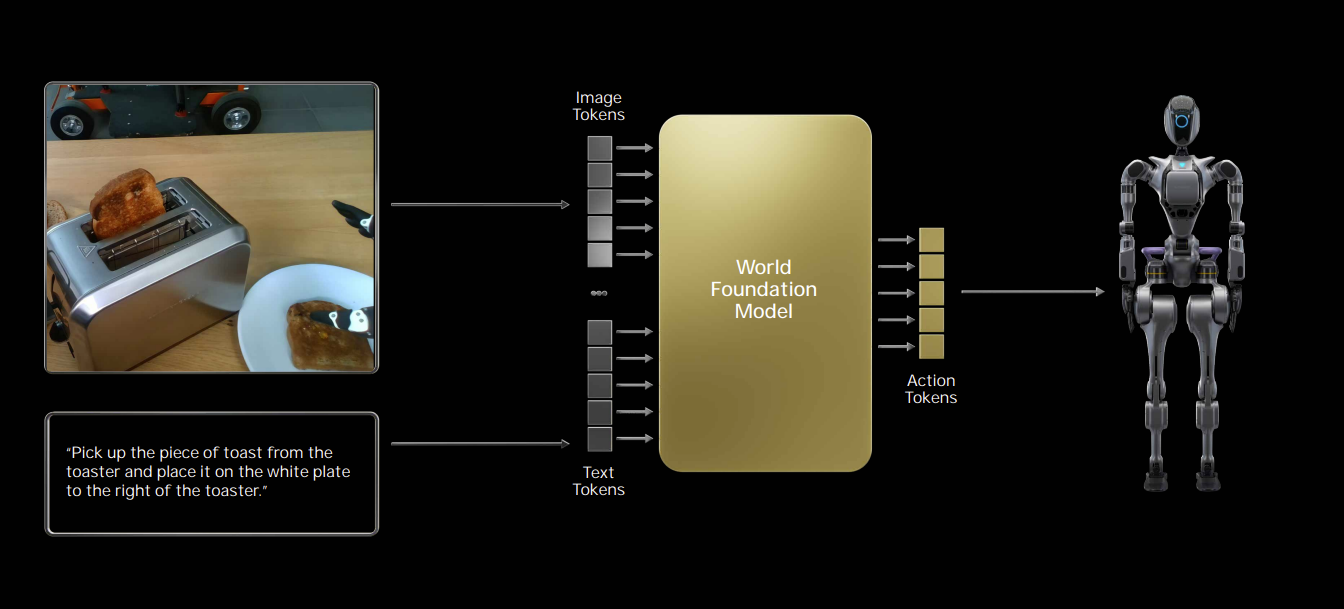

WFMs will use data, text, images, video and movement to generate and simulate virtual worlds that accurately models environments and physical interactions.

Cosmos models have been trained on 9,000 trillion tokens from 20 million hours of real-world human interactions, environment, industrial, robotics and driving data, said Huang. WFMs will usher in a new era where AI can "proceed, reason, plan and act."

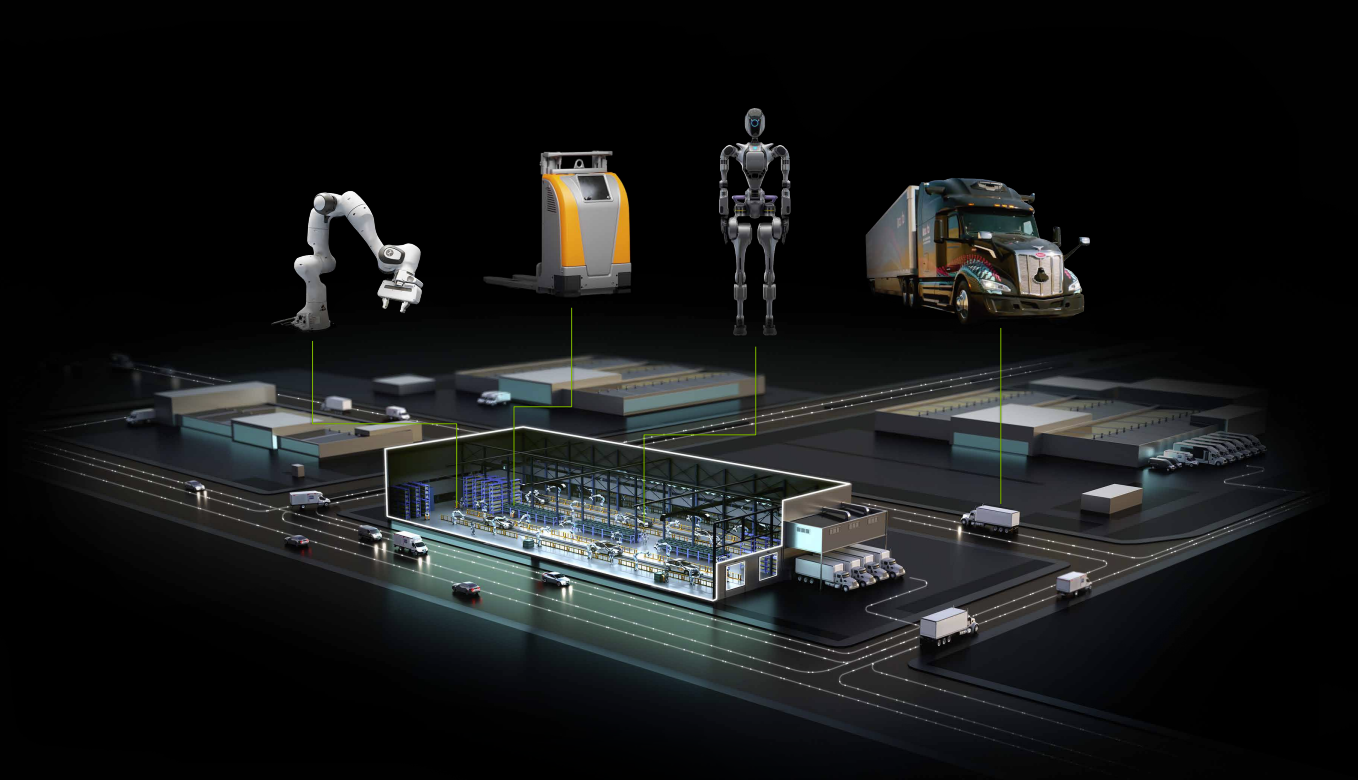

The play for physical AI is robotics and autonomous vehicles, said Huang. "There are no limitations to robots. It could very well be the largest computer industry ever," said Huang. "There's a very serious population and workforce situation around the world. The workforce population is declining, and in some manufacturing countries it's fairly significant. It's a strategic imperative for some countries to make sure that robotics is stood up. Human robots will surprise everybody with how incredibly good they are.”

Nvidia's launch of WFMs may jump start physical AI efforts that have been percolating. Consider:

- Archetype AI raised $13 million in seed funding to develop its WFM called Newton. Archetype AI's funding round was led by Venrock and included Amazon Industrial Innovation Fund, Hitachi Ventures, Buckley Ventures and Plug and Play Ventures. Archetype AI’s primers on physical AI are worth a read.

- Google DeepMind is putting together a team to create generative AI models to simulate the world.

- Odyssey raised a $18 million Series A round to train generative AI models for film, gaming and world. The funding is initially being used to capture data from the real world to train models.

- AI on Demand in Europe is also working on physical AI models. "What distinguishes Physical AI systems is their direct interaction with the physical world, contrasting with other AI types, e.g., financial recommendation systems (where AI is between the human and a database); chatbots (where AI interacts with the human via Internet); or AI chess-players (where a human moves the chess pieces and reports the chess board state to the AI algorithm)," said AI on Demand.

There are other efforts to develop WFMs and physical AI use cases. Huang obviously thinks physical AI is a huge market across multiple industries. It's also likely that all of the sensor data available from Internet of things sensors will also be handy.

Sign up for the Constellation Insights newsletter

Stanford University and Robotics at Google have compiled datasets on physical concepts and properties.

Accenture is also all-in on the physical AI and WFM effort. In Accenture's Technology Vision report for 2025, Karthik Narain, Group Chief Executive of Technology and CTO at Accenture, said:

"We are reaching a watershed moment as the power of generative AI is applied to physics and the field of robotics. Gone are the days of narrow, task-specific robots that require specialized training. A new generation of highly tuned robots with real world autonomy that can interact with anyone, take on a wide variety of tasks, and reason about the world around them will expand robotic use cases and domains dramatically."

What remains to be seen with physical AI will be the various intersections with WFMs. At AWS re:Invent 2024, a few executives noted that IoT is cool again and going to fuel industrial AI use cases.

Physical AI also sounds like it'll rhyme with active inference, a theme at Constellation Research's Connected Enterprise 2024. Denise Holt, Founder and CEO AIX Global Media, said active inference will "create digital twins of everything."

Holt said the agents that power active inference leverage sensor data from IoT, cameras and robotics and measure it against a real-world model. "We will have smart cities, autonomous systems, improving the efficiency of everything, global supply chains, personal and critical systems," said Holt.

Rest assured that physical AI in practice will combine multiple disciplines and technologies. Get up to speed because your board may be asking about physical AI soon.