Opaque Systems, a software startup focused on confidential computing, has named Aaron Fulkerson as CEO. Fulkerson most recently ran ServiceNow's Impact unit and was Founder and CEO of MindTouch, which was acquired by Nice Systems.

With the addition of Fulkerson, Dr. Rishabh Poddar, co-founder and CEO until recently, will become CTO. Opaque Systems has raised $22 million in Series A funding in 2022 to bring total financing to $31.6 million. Investors include Walden Catalyst Partners, Storm Ventures, Thomvest Ventures Intel Capital, Race Capital, The House Fund, and FactoryHQ.

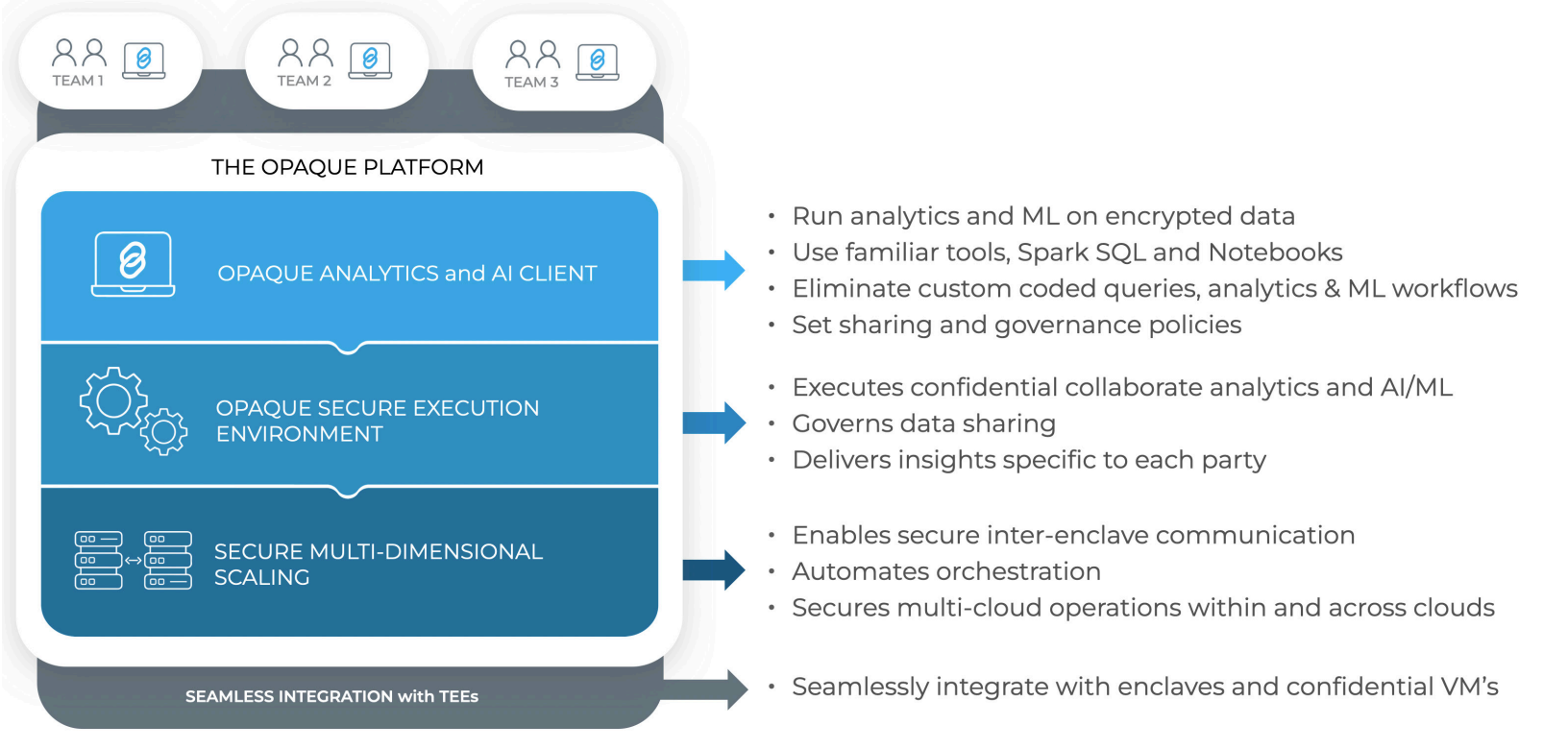

Opaque Systems features an analytics and AI platform that's built for confidential computing and enables data to be shared and analyzed by multiple parties while maintaining confidentiality and protecting data. The company provides Data Clean Rooms as well as OpaquePrompts, a privacy layer for large language models, for multiple industries including manufacturing, finance, healthcare and insurance.

I caught up with Fulkerson and Poddar to talk privacy, data management and generative AI as well as the need for a platform agnostic privacy technology provider. Here are the takeaways from our conversation.

What brought you to Opaque Systems? At ServiceNow, Fulkerson ran the Impact unit, which drives personalized recommendations, training and experts on demand to drive value from ServiceNow's platform. That personalization required data and model training that was often challenged by data privacy and data sovereignty. At the same time, generative AI and large language models (LLMs) surged. "For many organizations there's some layer of privacy over the top of an LLM that allows an organization to use it without exposing proprietary data or sensitive information," said Fulkerson. "There's going to have to be a privacy wrapper and privacy enhancing technologies. What Opaque Systems has done is built a product with verifiable trust in the DNA of every digital interaction. It was impossible to pass up the opportunity."

The technology. Opaque Systems has a specialized software stack that offers encryption as well as a digital signature at the hardware level. Intel pioneered confidential computing hardware, which is now available on all the major cloud vendors enabling Opaque Systems to focus on the software.

Although Opaque Systems got started with data clean rooms, Fulkerson said the vision for the company is broader. Data clean rooms are a feature available from multiple vendors, but most of these efforts are tied to platforms. Opaque Systems offers a privacy layer across multiple data sets and systems. "Opaque is allowing companies and organizations to encrypt data sets, put it into an Opaque room with multiple other encrypted data sets and then you can run data processing jobs while maintaining encryption," explained Fulkerson. "Encryption in transit is typical but we have encryption at the time of processing." He added that verifiable trust technology allows for analytics, machine learning models and other applications to be built on top of encrypted data.

Generative AI use cases. Poddar said generative AI is in Opaque Systems wheelhouse because it is fundamentally about running AI and analytics on sensitive data. "LLMs are an emergent and particularly exciting class of AI applications evolving as we speak, but we've seen a bunch of data privacy issues," said Poddar. "Enterprises will have to define and figure out their privacy strategies around LLMs."

"If you're training a model, you don't want the model to remember your confidential data," said Poddar. "You want to guarantee your data remains protected and encrypted while still allowing these generative AI workloads to be run on that data. LLMs were a natural extension of our core capabilities around confidential AI and analytics."

Poddar and Fulkerson added that LLM use cases in the enterprise are exploratory for now, but that'll change quickly, and privacy issues will need to be addressed.

Privacy first deployments. Fulkerson said the shift from exploratory generative AI use cases to production will happen in the next year. "Generative AI is going to be the killer app and we have to be able to provide a data privacy wrapper."

Fulkerson said Opaque Systems has a bevy of financial services customers using Opaque's analytics. "We're working with financial institutions that have data sets that can't be shared across business units because of regulatory requirements or different geographies even within their own organizations," he said. "If you have a retail bank, wealth management and lender you can't share data across them. If you're able to encrypt those first party data sets, you can use analytics to solve problems like fraud detection and anti-money laundering."

Customer 360 efforts will also become privacy first because of regulations as well as the need to enrich profiles with encrypted data, said Fulkerson.

Poddar added that LLMs will become part of the AI pipeline in enterprises and privacy ops will become a crucial component. "People will need to think about privacy and security issues from the ground up as they adopt this technology," he said.

The state of privacy. Fulkerson today's software paradigm for privacy revolves around contracts and that's not going to scale well with LLMs and generative AI applications. "Whenever you use software in the cloud or any kind of service, you trust that the vendor will be good custodians of your data. That's a problem," said Fulkerson. "You could be working with trusted third parties vulnerable to attacks. The problem then gets compounded in the context of cloud and collaboration."

In other words, trust needs to be verifiable and cryptographically assured so data can be shared in a way that solves problems, said Fulkerson.

Poddar added that auditing and following the data trail will also become critical in the age of generative AI. "We guarantee at all times that your data is used only in the way you permit and that it is verifiable by you, auditable by you, and you retain control," said Poddar.