Nvidia's data center business continued to surge and the company continues to raise its revenue guidance at a rapid clip.

The company reported fourth quarter revenue of $22.1 billion, up 265% from a year ago, with earnings of $4.93 a share. Non-GAAP earnings for the fourth quarter were $5.16 a share.

Wall Street was looking for Nvidia to report fourth-quarter non-GAAP earnings of $4.64 a share on revenue of $20.62 billion.

For fiscal 2024, Nvidia reported earnings of $11.93 a share on revenue of $60.9 billion.

The fact Nvidia is raking in dough isn't surprising given cloud providers and Meta said they were spending heavily on GPUs to train models. Constellation Research CEO Ray Wang said "we haven't hit peak Nvidia yet."

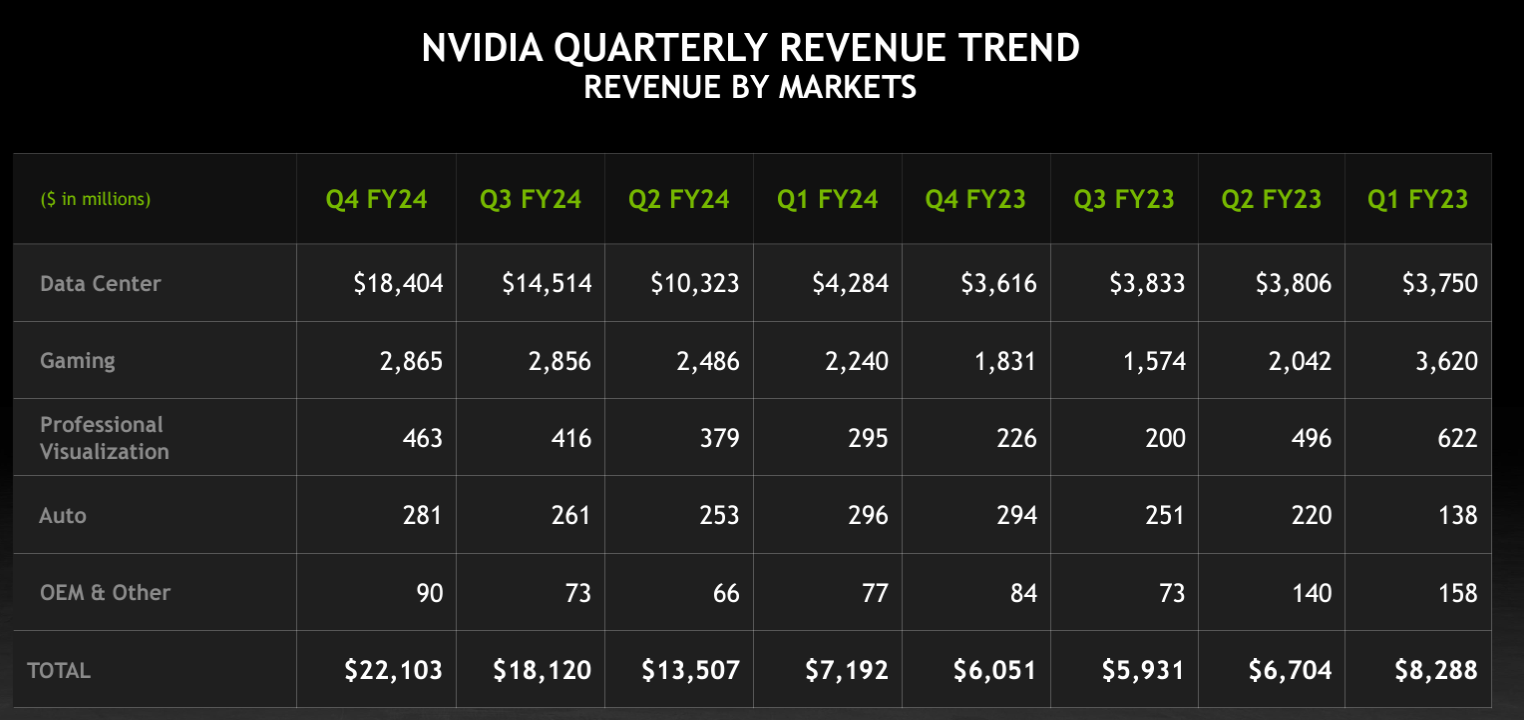

By business unit, Nvidia's quarterly revenue trend is almost comical. The company's fourth quarter data center revenue was $18.4 billion, up from $3.83 billion a year ago.

As for Nvidia's outlook, the company projected first quarter revenue of $24 billion with GAAP margins of 76.3%.

To date, most of the spoils from the generative AI boom have gone to Nvidia with SuperMicro being an exception.

- Nvidia's uncanny knack for staying ahead

- Nvidia launches H200 GPU, shipments Q2 2024

- Nvidia sees Q4 sales of $20 billion, up from $6.05 billion a year ago

- Why enterprises will want Nvidia competition soon

CEO Jensen Huang said generative AI and accelerated computing has hit an inflection point with surging demand. "Our Data Center platform is powered by increasingly diverse drivers — demand for data processing, training and inference from large cloud-service providers and GPU-specialized ones, as well as from enterprise software and consumer internet companies," he said.

Nvidia CFO Colette Kress said:

"In the fourth quarter, large cloud providers represented more than half of our Data Center revenue, supporting both internal workloads and external customers. Strong demand was driven by enterprise software and consumer internet applications, and multiple industry verticals including automotive, financial services, and healthcare. Customers across industry verticals access Nvidia AI infrastructure both through the cloud and on-premises."

Kress added that sales to China declined significantly. Gross margins improved due to lower component costs. Nvidia's cash, cash equivalents and marketable securities was $26 billion, nearly double from a year ago. Regarding inventory, Kress added:

"Inventory was $5.3 billion with days sales of inventory (DSI) of 90. Purchase commitments and obligations for inventory and manufacturing capacity were $16.1 billion, down sequentially due to shortening lead times for certain components. Prepaid supply agreements were $5.0 billion. Other non-inventory purchase obligations were $4.6 billion, which includes $3.5 billion of multi-year cloud service agreements, largely to support our research and development efforts."

Kress said data center revenue was driven by the Nvidia Hopper GPU computing platform along with InfiniBand and and networking. Compute revenue grew more than 5x and networking growth revenue tripled from last year, she said. "We are delighted that the supply of Hopper architecture products is improving and demand remains very strong. We expect our next generation products to be supply constrained as demand far exceeds supply," said Kress.

Conference call highlights

Key items from the conference call with analysts:

Nvidia's networking business is at a $13 billion annualized revenue run rate. Spectrum X Ethernet has adaptive routing congestion control, noise isolation and traffic isolation. Huang said Spectrum X Ethernet will be an AI optimized system and InfiniBand will be an AI dedicated networking option.

Huang said there's strong demand across industries but cited healthcare and financial services as well as sovereign AI as key areas.

He said:

"Sovereign AI has to do with the fact that the language, the knowledge, the history, the culture of each region, are different. And they own their own data. They would like to use their data, train it to create their own digital intelligence and provision it to harness that raw material themselves. It belongs to them."

He added that the generative AI boom experienced in the US will be replicated in multiple countries.

Nvidia's supply constraints are improving. "Our supply chain is just doing an incredible job for us," said Huang. "The Nvidia Hopper GPU has 35,000 parts. It weighs 70 pounds. These things are really complicated. We call it an AI supercomputer for good reason."

Demand will outstrip supply. "We expect the demand will continue to be stronger than supply provides, and we'll do our best," said Huang. "The cycle times are improving. Whenever we have new products, it ramps from zero to a very large number. And you can't do that overnight."

Allocation depends on how soon customers can launch services and infrastructure. Kress said allocation is a moving process and Nvidia looks to get inventory to customers right away. Huang said cloud service providers have a clear view of Nvidia's roadmap and the visibility of what products can buy and when. "We allocate fairly and to avoid allocating too early. Why allocate before a data center is ready?" said Huang. "We bring our partners customers. We are looking for opportunities to connect partners and users all the time."

Nvidia will work within government constraints to reset its product offering to China. Nvidia is doing its best to compete. "Hopefully we can go there and compete for business," said Huang, who said the company is sampling with China customers.

Software is as important as hardware. "Software is fundamentally necessary for accelerated computing. If you don't have software, you can't open new markets or enable new applications," said Huang, who said Nvidia has teams working with a bevy of enterprise software companies.

Huang said Nvidia will do the optimization, patching and optimization for enterprise software companies. "Think of Nvidia AI enterprise as a runtime like an operating system. It's an operating system for artificial intelligence," said Huang.

Constellation Research's take

Wang said there's no reason why Nvidia can't keep rolling. He said:

"This is the most important stock in the world right now and a barometer of AI health. Four years ago we talked about Nvidia hitting a $1 trillion market cap and $2 trillion by 2025. We are in the age of AI and Nvidia is king. This is real demand and it's growing. We haven't hit peak Nvidia nor peak AI and we'll see software as the second wave of AI in about 6 months to a year."

Constellation Research analyst Dion Hinchcliffe said Nvidia's moat is also about software. Hinchcliffe said:

"Amid all the hoopla about NVIDIA's chip prowess, what most observers still miss is that Nvidia has carefully cultivated and strategically wielded its CUDA (Computing Unified Device Architecture). CUDA is the unique software stack that has won the hearts and minds of next-gen compute devs in gaming, HPC, and now AI.

CUDA has become the key competitive weapon that connects 3rd party apps to Nvidia GPUs. It’s the magic handshake that enables AI algorithms to work effortlessly with the massive compute power of Nvidia's modern chip architectures.

But CUDA isn’t just an ordinary piece of software. It's a closed-source, low-level API that optimally wraps a powerful software stack around Nvidia’s GPUs. The result has created an ecosystem for parallel computing that's potent while also carefully keeping devs captive due to their code's dependency on it. Even the most formidable competitors such as AMD and Intel have struggled with only minor success as CUDA has become widely adopted in various industries like HPC, AI, and deep learning.

I project that we'll continue to see Nvidia's lead grow as it continues to corral devs with the success of CUDA and and keeps competitors at bay as long as the API remains relatively non-portable."