Nvidia reported a better-than-expected third quarter, raised its outlook and said that Blackwell shipments will begin in the fourth quarter. Data center revenue in the third quarter was up 112% from a year ago.

Collette Kress, CFO of Nvidia, said:

"We completed a successful mask change for Blackwell, our next Data Center architecture, that improved production yields. Blackwell production shipments are scheduled to begin in the fourth quarter of fiscal 2025 and will continue to ramp into fiscal 2026. We will be shipping both Hopper and Blackwell systems in the fourth quarter of fiscal 2025 and beyond. Both Hopper and Blackwell systems have certain supply constraints, and the demand for Blackwell is expected to exceed supply for several quarters in fiscal 2026."

The company reported third quarter earnings of $19.3 billion, or 78 cents a share, on revenue of $35.08 billion, up 94% from a year ago. Non-GAAP earnings in the quarter were 81 cents a share.

- Nvidia outlines Google Quantum AI partnership, Foxconn deal

- Softbank Corp. first to land Nvidia Blackwell systems in bid to be genAI provider in Japan

- Nvidia drops NVLM 1.0 LLM family, revving open-source AI

Wall Street was looking for third quarter earnings of 75 cents a share on revenue of $33.14 billion.

Jensen Huang, CEO of Nvidia, said "demand for Hopper and anticipation for Blackwell — in full production — are incredible as foundation model makers scale pretraining, post-training and inference."

As for the outlook, Nvidia said fourth quarter revenue will be about $37.5 billion, give or take 2%. Analysts were modeling fourth quarter earnings of 82 cents a share on revenue of $37.03 billion.

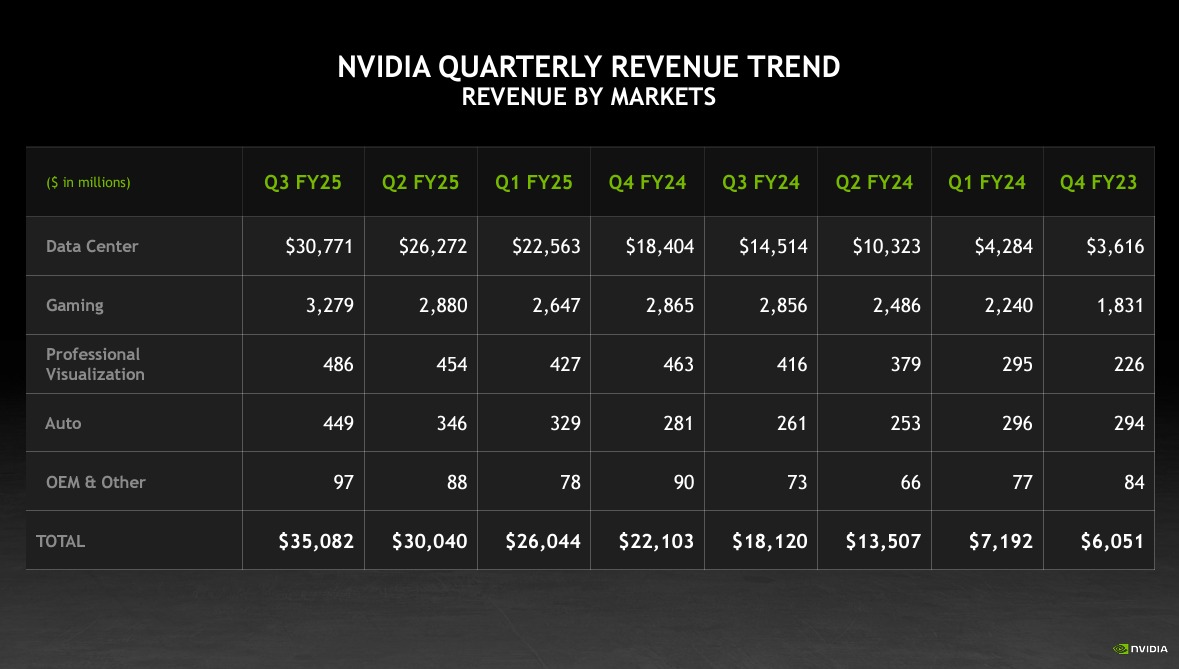

By the numbers for the third quarter:

- Nvidia data center revenue was $30.8 billion, up 112% from a year ago. The company gained as multiple cloud servers launched Nvidia Hopper H200 instances in the quarter.

- Cloud providers were 50% of data center revenue with the remainder being consumer Internet companies and enterprises.

- Networking revenue was $3.1 billion, up 20% from a year ago.

- Gaming and PC revenue was $3.33 billion, up 15% from a year ago.

- Visualization revenue was $486 million, up 17% from a year ago.

- Automotive and robotics revenue was $449 million, up 30% from a year ago.

More:

- Nvidia's uncanny knack for staying ahead

- Nvidia highlights algorithmic research as it moves to FP4

- Nvidia launches NIM Agent Blueprints aims for more turnkey genAI use cases

- Nvidia shows H200 systems generally available, highlights Blackwell MLPerf results

- Nvidia outlines roadmap including Rubin GPU platform, new Arm-based CPU Vera

Key points from the Nvidia conference call:

- "Blackwell is now in the hands of all of our major partners, and they are working to bring up their data centers. We are integrating Blackwell systems into the diverse data center configurations of our customers. Blackwell demand is staggering, and we are racing to scale supply to meet the incredible demand customers are placing on us. Customers are gearing up to deploy Blackwell at scale," said Kress.

- Costs matter. "Nvidia Blackwell architecture with NVLink switch enables up to 30x faster inference performance and a new level of inference, scaling, throughput and response time that is excellent for running new reasoning inference," said Kress, who noted that it takes 64 Blackwell GPUs to deliver the compute of 250 H100s.

- Nvidia AI Enterprise revenue is double what it was last year with a large pipeline. Kress put annual revenue at $1.5 billion.

- China will "remain very competitive" as a market.

- Foundation model scaling is intact. Huang said that "the evidence is that LLMs can continue to scale." However, the industry is learning that there are more efficient ways to scale such as post training, reinforcement learning, and synthetic data. OpenAI's Strawberry model is a version of test time scaling. "We now have three ways of scaling, and we're seeing all three ways of scaling. And as a result of that the demand for our infrastructures is really great," said Huang.

- Huang shot down concerns about Blackwell ramping or issues with overheating in data centers. He also said that concerns about industry indigestion are overblown. Huang said:

"I believe that there will be no digestion until we modernize a trillion dollars of data centers."

Constellation Research analyst Holger Mueller said:

"Nvidia continues to let the good times roll, almost doubling its revenue compared a year ago. To show the scale of the financial acceleration – Nvidia quarterly net income of $19.3B is more than Nvidia nine month total earnings of the last fiscal year ($17.5 bilion). That is unheard of growth and acceleration. Questions were handled well by Jensen Huang and team – especially on the critical supply chain side. Should the company be able to deliver, the next quarter growth will be also an easy exercise. Speaking about the other division – the long announced and even longer expected growth spurt for automotive may have arrived, with 30% revenue growth. Automotive revenue isn't meaningful, but still a positive sign."