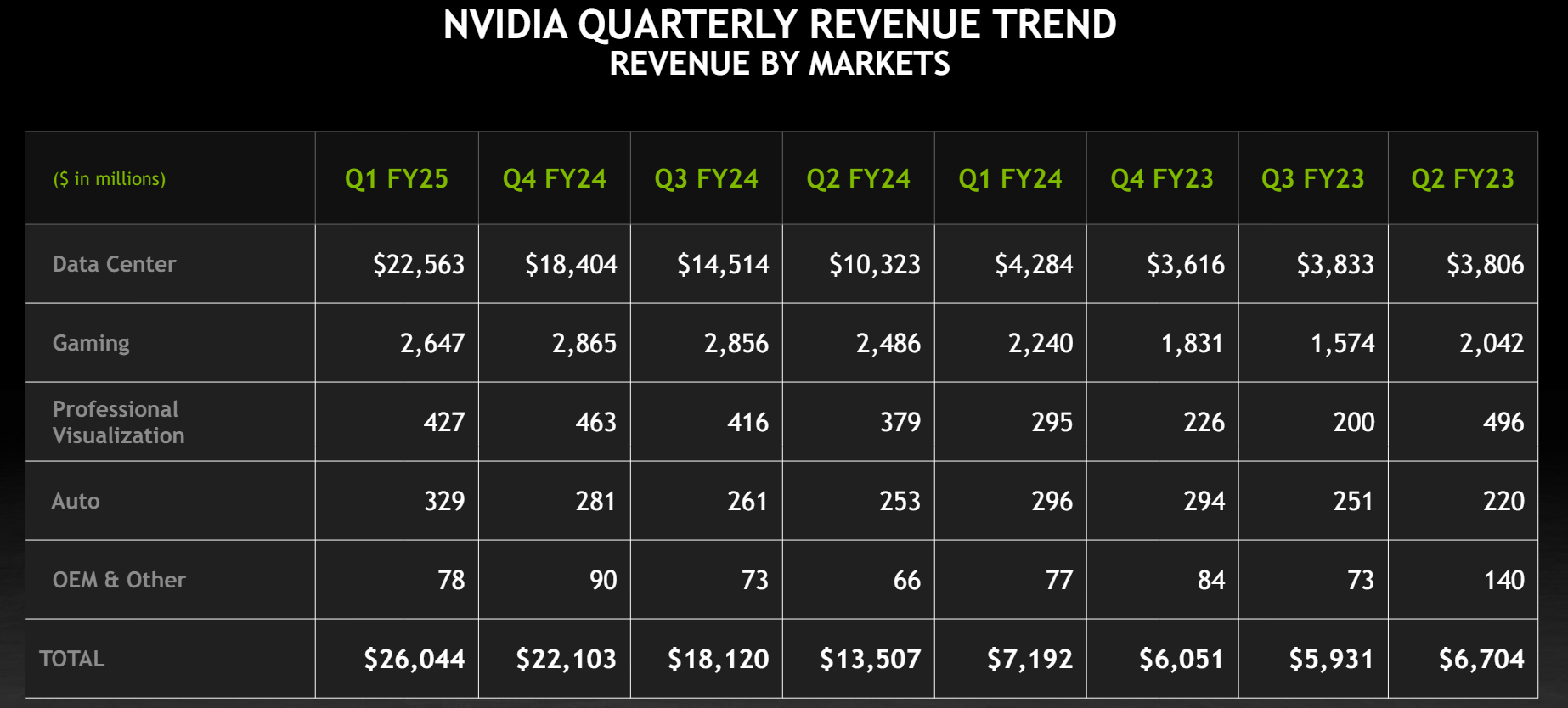

Nvidia reported first quarter sales growth of 262% from a year ago, reported record quarterly data center revenue and split its stock 10-for-1 effective June 7.

The company reported first quarter earnings of $5.98 a share on revenue of $26 billion. Non-GAAP earnings were $6.12 a share. Wall Street was expecting Nvidia to report first quarter earnings of $5.54 a share on revenue of $24.6 billion.

CEO Jensen Huang said the AI factory upgrade cycle has begun. He said:

"Our data center growth was fueled by strong and accelerating demand for generative AI training and inference on the Hopper platform. Beyond cloud service providers, generative AI has expanded to consumer internet companies, and enterprise, sovereign AI, automotive and healthcare customers, creating multiple multibillion-dollar vertical markets."

During the quarter, Nvidia lined up a bevy of partnerships including one with Dell Technologies that advances the AI factory concept. Nvidia CEO Jensen Huang said Dell Technologies AI factory effort will be the largest go-to-market partnership the GPU maker has. "We have to go modernize a trillion dollars of the world's data centers," said Huang.

- Nvidia acquires Run.ai for GPU workload orchestration

- Nvidia Huang lays out big picture: Blackwell GPU platform, NVLink Switch Chip, software, genAI, simulation, ecosystem

- Nvidia today all about bigger GPUs; tomorrow it's software, NIM, AI Enterprise

As for the outlook, Nvidia projected second quarter revenue of $28 billion with non-GAAP gross margins of 75.5%, an outlook that indicates Nvidia has pricing power.

By the unit, data center revenue surged 23% from the first quarter and 427% from a year ago. Gaming and AI PC revenue in the first quarter was $2.6 billion, up 18% from a year ago. Professional visualization revenue in the first quarter was up 45% from a year ago and automotive and robotics sales were up 11%.

In prepared remarks, CFO Colette Kress said:

"Data Center compute revenue was $19.4 billion, up 478% from a year ago and up 29% sequentially. These increases reflect higher shipments of the NVIDIA Hopper GPU computing platform used for training and inferencing with large language models, recommendation engines, and generative AI applications. Networking revenue was $3.2 billion, up 242% from a year ago on strong growth of InfiniBand end-to-end solutions, and down 5% sequentially due to the timing of supply. Strong sequential Data Center growth was driven by all customer types, led by Enterprise and Consumer Internet companies. Large cloud providers continued to drive strong growth as they deploy and ramp NVIDIA AI infrastructure at scale, representing mid-40% of our Data Center revenue."

Constellation Research's take and conference call takeaways

Constellation Research analyst Holger Mueller said:

"Nvidia had another blow out quarter with surreal YoY comparisons. If you want to see the AI boom in a financial statement – look up the Nvidia earnings. But all things come to an end – Nvidia is only guiding to <10% QoQ growth, which half of this quarter's QoQ growth. The question is what is slowing Nvida down – demand or supply? It could also be cloud vendors holding their CAPEX spend in anticipation of Blackwell. One thing is clear for Nvidia to be Nviida – it needs Blackwell to be a success."

Key items from the conference call include:

- Inferencing is in the mid-40s as percent of data center revenue.

- Nvidia is speaking to total cost of ownership, which AMD is actively discussing. Kress said: "Training and inferencing AI on NVIDIA CUDA is driving meaningful acceleration in cloud rental revenue growth, delivering an immediate and strong return on cloud provider's investment. For every $1 spent on NVIDIA AI infrastructure, cloud providers have an opportunity to earn $5 in GPU instant hosting revenue over four years."

- Those cloud returns also pay off for end customers. Kress said cloud rentals remain the lowest cost way to train models as well as inferencing workloads.

- Enterprises are showing strong demand with Meta and Tesla cited as key customers for Nvidia infrastructure.

- Sovereign AI is expected to drive revenue in the "high single-digit billions this year."

- H200 was sampled in the first quarter with shipments in the second. Huang said production shipments will ramp in the third quarter with data centers stood up in the foruth quarter. OpenAI got the first system. "We will see a lot of Blackwell revenue this year," said Huang.

- Kress again cited cost benefits with Nvidia HGX H200 servers: "For example, using Llama 3 with 700 billion parameters, a single NVIDIA HGX H200 server can deliver 24,000 tokens per second, supporting more than 2,400 users at the same time. That means for every $1 spent on NVIDIA HGX H200 servers at current prices per token, an API provider serving Llama 3 tokens can generate $7 in revenue over four years."

- Grace Hopper Superchip is shipping in volume.

- Blackwell systems are reverse compatible so the transition from H100 to H200 will be seamless. That said supply will remain an issue. Huang said: "We expect demand to outstrip supply for some time as we now transition to H200, as we transition to Blackwell. Everybody is anxious to get their infrastructure online. And the reason for that is because they're saving money and making money, and they would like to do that as soon as possible."

- We're 5% into the AI data center buildout. Huang said: "We're in a one-year rhythm. And we want our customers to see our roadmap for as far as they like, but they're early in their build-out anyways and so they had to just keep on building. There's going to be a whole bunch of chips coming at them, and they just got to keep on building and just, if you will, performance average your way into it. So that's the smart thing to do. They need to make money today. They want to save money today. And time is really, really valuable to them."

- Ethernet networking a growth market for Nvidia. Huang said: "For companies that want the ultimate performance, we have InfiniBand computing fabric. InfiniBand is a computing fabric, Ethernet is a network. And InfiniBand, over the years, started out as a computing fabric, became a better and better network. Ethernet is a network and with Spectrum-X, we're going to make it a much better computing fabric. And we're committed -- fully committed to all three links, NVLink computing fabric for single computing domain to InfiniBand computing fabric, to Ethernet networking computing fabric. And so we're going to take all three of them forward at a very fast clip."