Intel said its Gaudi 3 AI accelerator will be available in the second quarter with systems from Dell Technologies, HPE, Lenovo and Supermicro on tap. Intel, along with AMD, is hoping to give Nvidia some competition.

The chipmaker's Gaudi 3 launch, announced at the Intel Vision conference, is the linchpin of Intel's plans to garner AI training and inference workloads and take share from Nvidia.

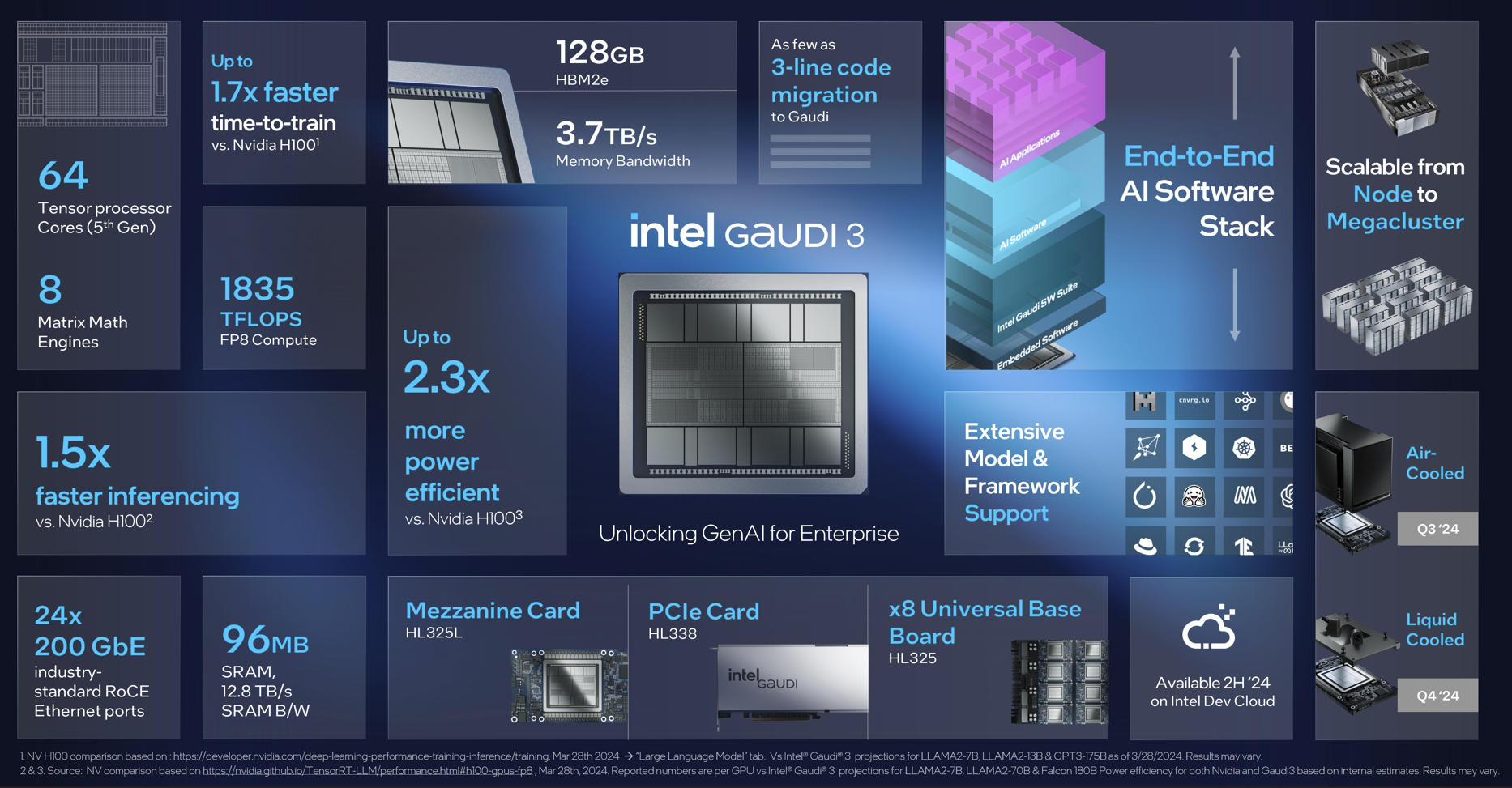

According to Intel, Gaudi 3 has 50% average better inference and 40% better average power efficiency than Nvidia H100 with lower costs. It's worth noting that the Nvidia has outlined its Blackwell GPUs and accelerators that leapfrog H100 performance.

Nevertheless, model training will be a balancing act between speed and compute costs. Enterprises will use a bevy of options for AI workloads including Nvidia, AMD and Intel as well as in-house offerings from AWS with Trainium and Inferentia and Google Cloud TPUs.

- Nvidia Huang lays out big picture: Blackwell GPU platform, NVLink Switch Chip, software, genAI, simulation, ecosystem

- Will generative AI make enterprise data centers cool again?

- AWS presses custom silicon edge with Graviton4, Trainium2 and Inferentia2

- Intel's AI everywhere strategy rides on AI PCs, edge, Xeon CPUs for model training, Gaudi3 in 2024

- AMD Q4 solid

Key points about Gaudi 3:

- Intel Gaudi 3 is manufactured on 5 nm process and uses its engines in parallel for deep learning compute and scale.

- Gaudi 3 has a compute engine of 64 AI-custom and programmable tensor processor cores and eight matrix multiplication engines.

- Memory boost for generative AI processing.

- 24 GB Ethernet ports integrated into Gaudi 3 for networking speed.

- PyTorch framework integration and optimized Hugging Face models.

- Gaudi 3 PCIe add-in cards.

To go along with the Gaudi 3 launch, Intel said it will create an open platform for enterprise AI along with SAP, RedHat, VMware and other companies. It is also working with the Ultra Ethernet Consortium and will launch a series of network interface cards and AI connectivity chiplets.