IBM reported a better-than-expected second quarter fueled by software revenue growth.

Big Blue reported second quarter net income of $1.8 billion, or $1.96 a share, on revenue of $15.8 billion, up 2% from a year ago. Non-GAAP earnings were $2.43 a share.

Wall Street was looking for IBM to report second quarter earnings of $2.17 a share on revenue of $15.62 billion.

As for the outlook, IBM projected annual revenue growth in the mid-single digit range with free cash flow topping $12 billion.

By the numbers for the second quarter:

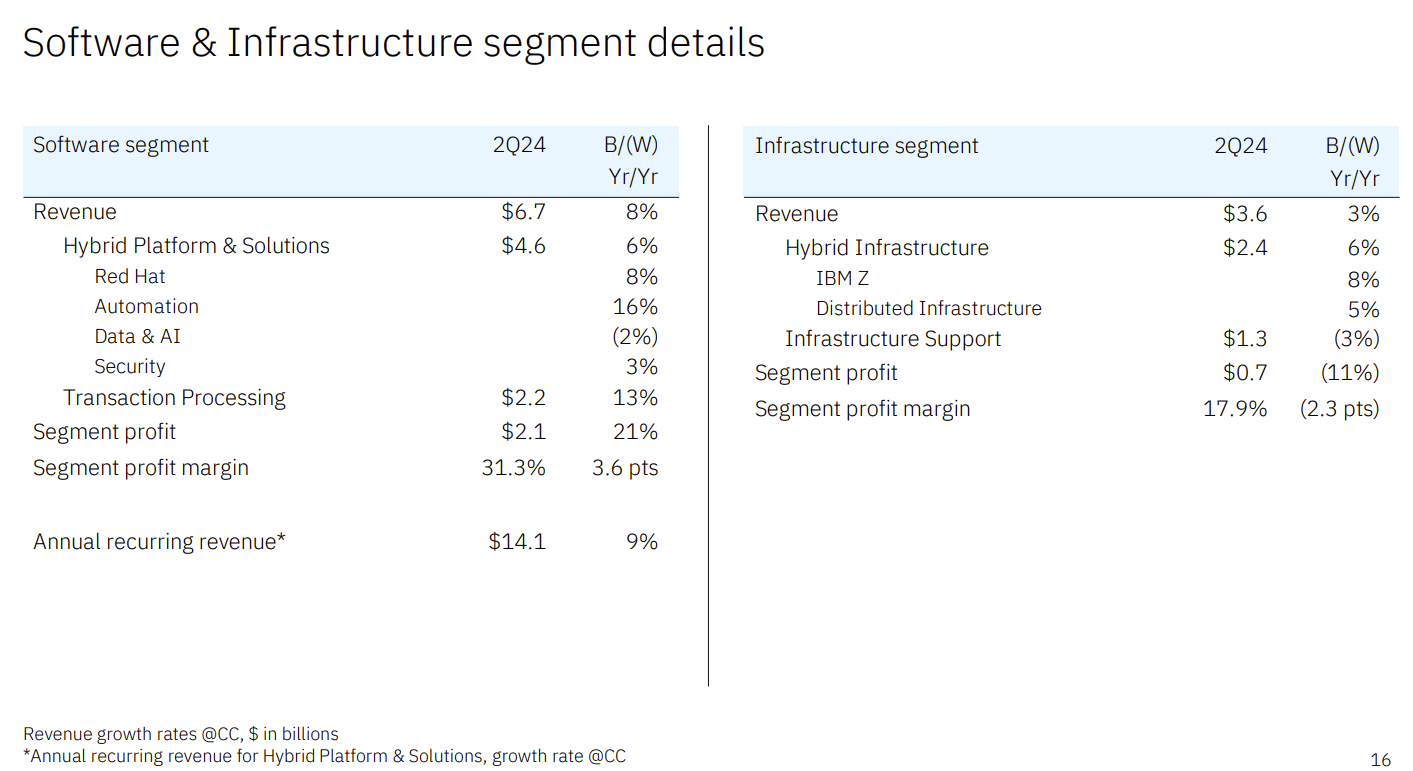

- Software revenue was $6.7 billion, up 7%. Red Hat revenue was up 7% with automation sales growth of 15%. Data and AI revenue in the quarter fell 3%.

- Consulting revenue was $5.2 billion, down 1% from a year ago.

- Infrastructure revenue was $3.6 billion, up 0.7% from a year ago. IBM Z revenue was up 6%.

IBM CEO Arvind Krishna said the company saw strength in hybrid cloud demand and software.

"Technology spending remains robust as it continues to serve as a key competitive advantage allowing businesses to scale, drive efficiencies and fuel growth. As we stated last quarter, factors such as interest rates and inflation impacted timing of decision making and discretionary spend in consulting. Overall, we remain confident in the positive macro-outlook for technology spending but acknowledge this impact."

Krishna added that watsonx and IBM's generative AI has been infused across its business. He said genAI has been used in consulting, Red Hat and even IBMz. IBM is also focusing on offering models suited to enterprises.

- IBM open sources Granite models, integrates watsonx.governance with AWS SageMaker | Palo Alto Networks buys IBM's QRadar security offerings in broad alliance

He said:

"Choosing the right AI model is crucial for success in scaling AI. While large general-purpose models are great for starting on AI use cases, clients are finding that smaller models are essential for cost effective AI strategies. Smaller models are also much easier to customize and tune. IBM's Granite models, ranging from 3 billion to 34 billion parameters and trained on 116 programming languages, consistently achieve top performance for a variety of coding tasks. To put cost in perspective, these fit-for-purpose models can be approximately 90% less expensive than large models."

IBM's book of business related to generative AI is now $2 billion inception to date.