Enterprise leaders should be forgiven for nursing a case of whiplash over the latest large language model (LLM) developments. Almost daily, there's some advance that generates headlines. Instead of chasing every little benchmark, here's a crib sheet of what we're learning from the never-ending game of LLM leapfrog.

Google is hitting its stride

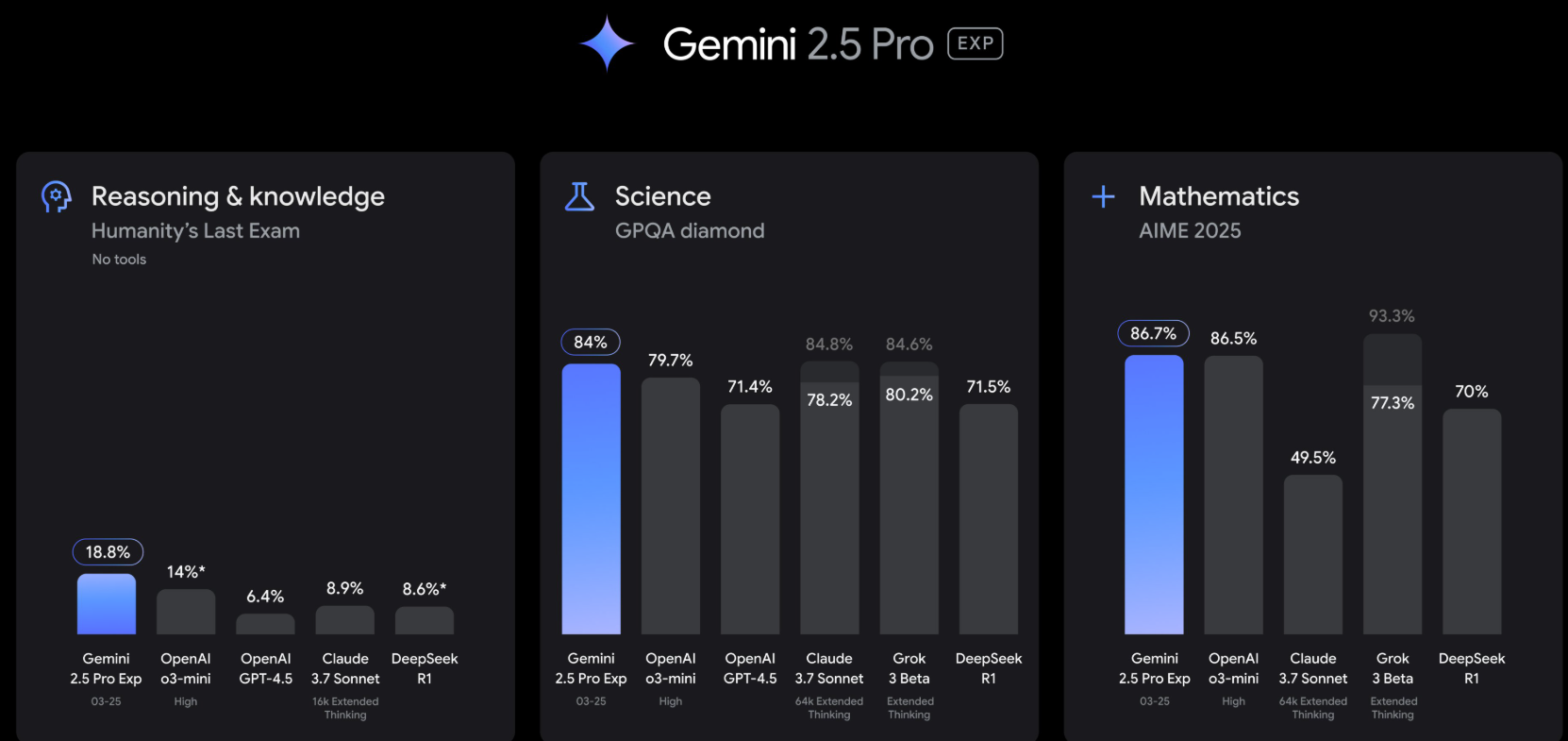

With the launch of Gemini 2.5 Pro, an experimental thinking model that performs well and has been enhanced with post-training, it's clear Google DeepMind is firing well.

Let's face it, Google was caught off guard by OpenAI and has been playing catch up. It's safe to say that Google has caught up. Gemini 2.5 innovation will be built into future models from Google and rest assured those capabilities will show up at Google Cloud Next in April.

But Google's approach to models goes further than just one-offs. I've been testing Google's deep research tools on Google Advanced vs. OpenAI's ChatGPT's Deep Research. Both have slightly different twists, but compress research time nicely. Google has the mojo to delight. For instance, an instant audio podcast summarizing a report is a nice touch.

When compared with Anthropic's Claude, Gemini more than holds its own. Simply put, Google is leveraging its strengths when it comes to models.

OpenAI is betting being more human is the way

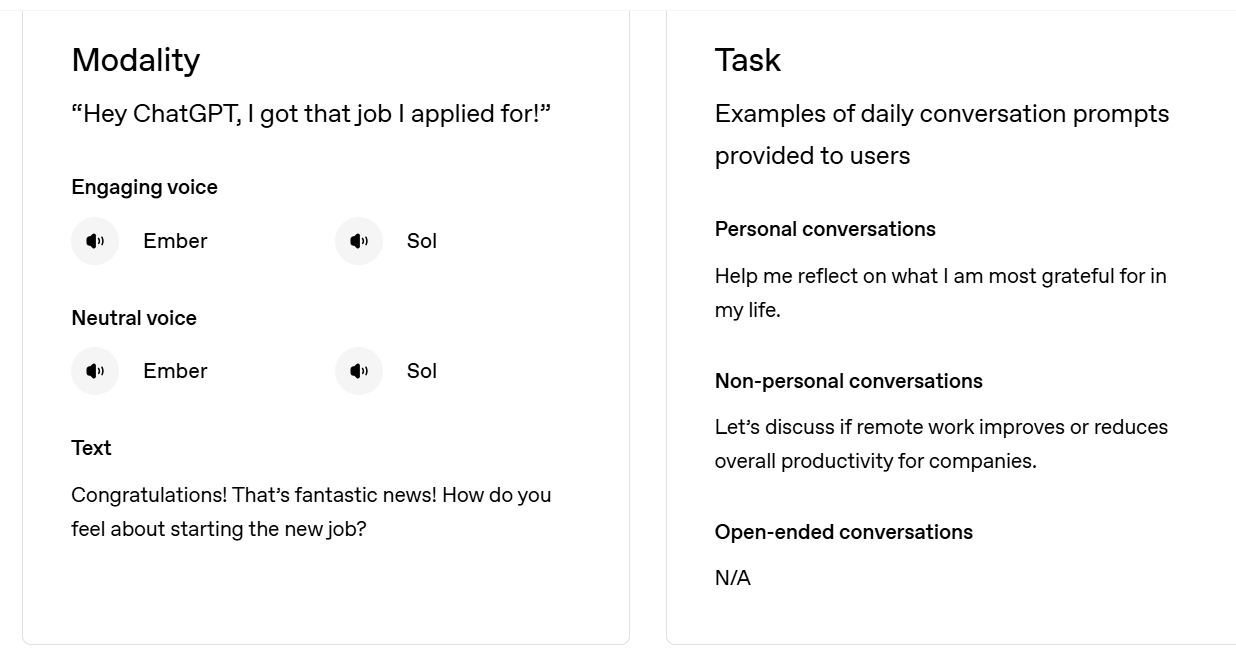

It isn't a Google Gemini launch without an OpenAI launch. OpenAI announced native image generation in ChatGPT hours after Gemini 2.5 Pro was announced. OpenAI had also launched new ChatGPT voice mode updates to give the model "a more engaging and natural tone."

Those additions fell in the bucket that wasn’t necessarily worth my time, but obscure a broader theme from OpenAI. The company is leaning into emotional intelligence and models that act more human.

The pivot is notable because OpenAI's bet is that if you create models that are more relatable--and perform well--you'll have a stickier product. Strategically, that bet makes a lot of sense. I'm an OpenAI ChatGPT subscriber and have seen nothing from rivals that would entice me to dump my $20 monthly subscription.

What remains to be seen is whether subscribing to LLMs is more like streaming where you have more than one service or it's truly zero sum. I haven't had to answer that question since Google's Gemini is baked into my Pixel 9 purchase for a few more months.

DeepSeek vs. Alibaba's Qwen is flooding the market with inexpensive and very capable models

In the US, the LLM game is a scrum between OpenAI, Anthropic, Meta's Llama, Google Gemini and a bevy of others. China is roaring back with DeepSeek vs. Alibaba's Qwen.

- DeepSeek: What CxOs and enterprises need to know

- DeepSeek's real legacy: Shifting the AI conversation to returns, value, edge

- Palantir CTO: Engineering on DeepSeek 'is exquisite'

- China vs. US AI war: Fact, fiction or missing the point?

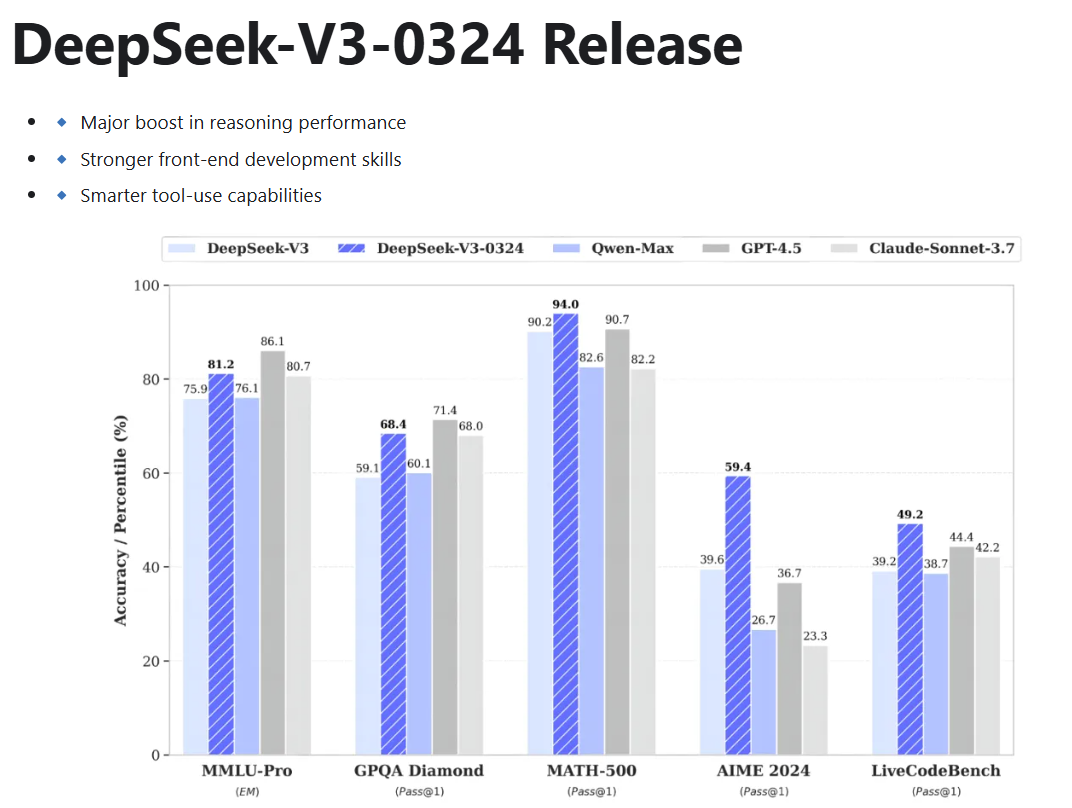

You'd be forgiven for forgetting DeepSeek's launch this week--it was so like 2 days ago. DeepSeek released DeepSeek-V3-0324 under the MIT open source license. Alibaba released Qwen2.5-VL-32B about the same time.

DeepSeek has been mentioned on countless earnings conference calls and its potential impact on AI infrastructure spending. Nvidia CEO Jensen Huang is asked about DeepSeek nearly every few minutes. Huang's argument is that DeepSeek is additive to the industry and the need for AI infrastructure.

"This is an extraordinary moment in time. There's a profound and deep misunderstanding of DeepSeek. It's actually a profound and deeply exciting moment, and incredible the world has moved to towards reasoning. But that is even then, just the tip of the iceberg," said Huang.

While DeepSeek is China's AI front-runner now, I wouldn't count out Qwen by any stretch especially with a distribution channel like Alibaba Cloud. What China's champions really did was move the conversation toward reasoning.

This is a game of mindshare

Chasing headlines almost daily about the latest model advances is a fool's errand. There will be the latest and greatest advance almost daily.

What this drumbeat of advances really highlight is that foundational models are a game of mindshare. Let's play a game: What foundational models are we noticing less? The short answer is Anthropic's Claude, and the second less obvious answer is Grok.

Anthropic is clearly more enterprise than OpenAI and is doing interesting things that align more to corporate use cases. Via partnerships with Amazon Web Services and Google Cloud, Anthropic is well positioned. In the daily news flow, Anthropic is likely to be overlooked with announcements that Claude can now search the web.

Nevertheless, Anthropic has mindshare where it matters--corporations. Anthropic is valued at $61.5 billion and is playing a slightly different game with its economic index and focused approach.

- Anthropic outlines most popular Claude use cases

- Anthropic CEO Amodei on where LLMs are headed, enterprise use cases, scaling

There can only be a few consumer AI platforms and OpenAI is in that pole position to threaten Google.

The most overlooked model award must go to Grok. There isn't a day that goes by where Constellation Research analyst Holger Mueller isn't showing me something Grok delivered that is impressive. Grok 3 features DeepSearch, Think and Edit Image. In my tests, Grok 3 is almost as good if not better than the better-known models.

Grok 3 would probably have more mindshare if it weren't simply overlooked due to Elon Musk's other endeavors.

Enterprise whiplash an issue

CxOs can really waste a lot of time focusing on these new model advances. As these models advance at such a rapid clip, the one thing that's clear is that you'll need an abstraction layer to swap models out. Pick your platforms carefully since every vendor (Salesforce, ServiceNow, Microsoft, SAP to name just a few) want to be your go-to platform for enterprise AI.

Here's where Amazon Web Services' approach with SageMaker and Bedrock make so much sense. Google Cloud also has a lot of model choice as does Microsoft Azure, which is best known for its OpenAI partnership but has diversified nicely. Microsoft launched Researcher and Analyst, two reasoning agents built on OpenAI’s Deep Research model and Microsoft 365 Copilot.

You can also expect data platforms such as Snowflake and DataBricks to be big players in model choice. Add it up and there's only one mantra for enterprise CxOs: Stay focused and don't get locked into one set of models.

- Snowflake adds OpenAI models to Cortex AI via expanded Microsoft partnership, integration

- Snowflake, Anthropic ink LLM partnership, delivers strong Q3, acquires Datavolo

- Databricks launches DBRX LLM for easier enterprise customization

All of the enterprise energy should be on orchestrating these models and ultimately the AI agents they'll power.

- AI infrastructure spending is upending enterprise financial modeling

- AI? Whatever. It's all about the first party data

- Enterprise AI: Here are the trends to know right now

Commoditization is happening rapidly

The funny part of this model war is that it's unclear how they'll be monetized. Open source models--led by Meta's Llama family--are on par with the proprietary LLMs. Those models are being tailored by companies like Nvidia that'll tweak for enterprise use cases.

DeepSeek is blowing up the financial model and AWS' Nova family is likely to be good enough just like its custom silicon chips are. HuggingFace's trending models tell the tale. DeepSeek, Qwen, Nvidia's new models and Google's Gemma are dominant. But that's just today. One thing is certain: The likely price for models is free.