Copilot and generative AI assistant implementations are getting so complicated that the consultants are marching in.

As tech vendors roll out various copilots and assistants across applications sprawl is going to become a real issue in a hurry. Enterprise software vendors all have AI helpers across their suites and platforms often with an per user surcharge.

Implementing these layers of copilots is going to require consultants as enterprises inevitably customize.

It all sounds a bit ERP to me.

Consider:

- IBM Consulting launched IBM Copilot Runway, which will help create, customize, deploy and manage copilots including Copilot for Microsoft 365. Consultants will focus on use cases and various processes.

- SAP and IBM will also collaborate on models for various use cases.

- Accenture has a similar deal to deploy Microsoft Azure OpenAI Service and hone use cases.

- Anthropic has partnered with AWS and Google Cloud with a dash of Accenture.

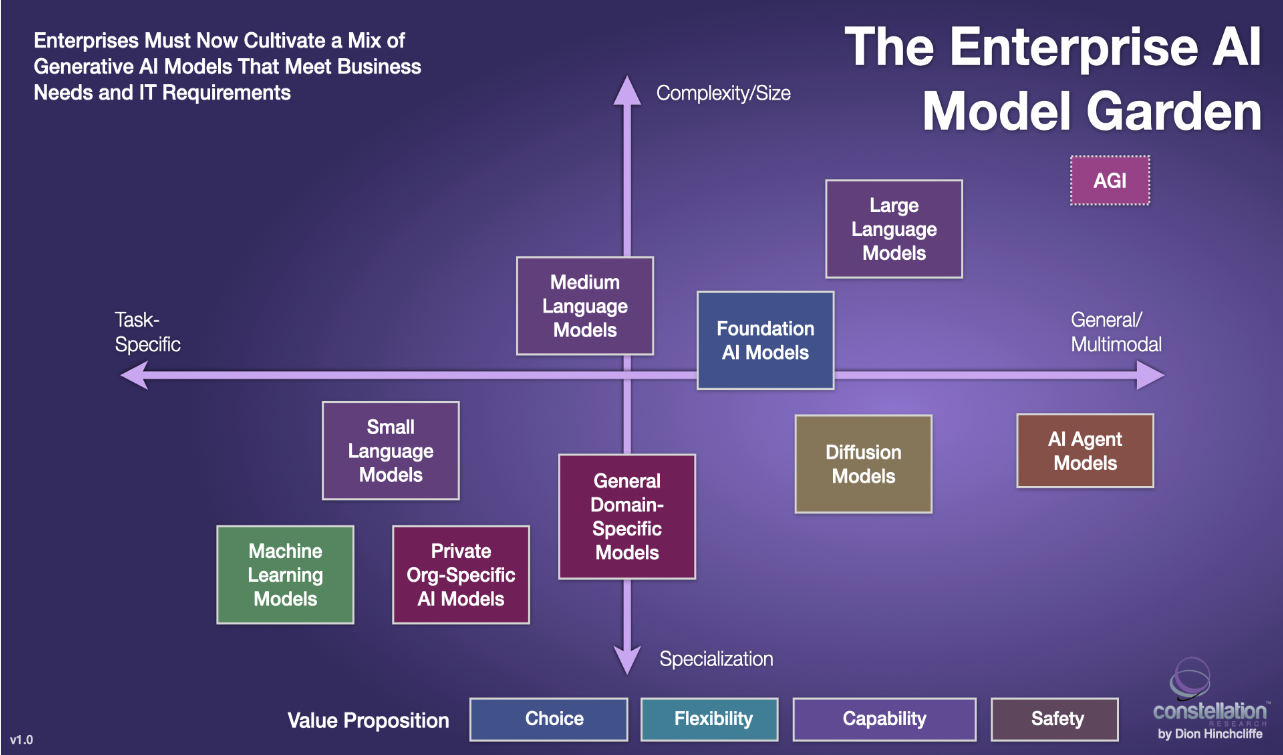

All of these consultants will come in handy. How do you decide between horizontal copilots and agents vs. use case and industry focused ones? The copilot game will be all about architecture, ModelOps and the ability to swap large language models as needed.

However, I raise an eyebrow when I see consulting firms swarm to the copilot pot of gold. That swarm of consultants mean that enterprises are struggling to execute the generative AI dream and implementation costs are going to go higher. The other axiom of enterprise technology: If there's a way to make projects overly complicated we will.

This post first appeared in the Constellation Insight newsletter, which features bespoke content weekly and is brought to you by Hitachi Vantara.

Here are a few reasons why copilot implementations are going to be a challenge:

Multiple models. Yes, you want model choice. Yes, you want to swap models as they mature and leapfrog each other. But enterprises in copilot implementations can pick a model today that's outdated a month later. Anthropic's Claude 3 was an enterprise fave. Then Meta's Llama 3 became an option. And OpenAI launched GPT-4o which may up the ante even more. LLM development is moving fast enough to give enterprises analysis paralysis.

Abstraction layers are developing, but haven't matured. Amazon Bedrock holds a lot of promise because enterprises can swap models. The platform is developing quickly, but the LLMs are moving faster.

Copilot sprawl. With every vendor, platform and service including a copilot, agent or assistant orchestration is going to be a big issue. Let's just take an average large enterprise stack.

- Multicloud: Google Cloud Gemini and Amazon Q

- ERP: SAP and Joule

- CRM: Salesforce with Einstein Copilot

- Productivity: Microsoft 365 with Copilot

- Productivity part 2: A few departments with Google Workspace and Gemini

- Platform: ServiceNow with Now Assist

- Custom applications: OpenAI ChatGPT, Anthropic Claude, Meta Llama 3, Databricks DBRX

Assuming you take on the AI assistants from just half of your vendors you are going to be crushed with sprawl and expenses.

Costs. The upcharge for various AI assistants was already starting to take a tool. Now you'll be adding consultants to the mix.

ROI. Generative AI returns to date have been judged based on productivity metrics. As these implementations see higher costs those returns will be shaved. Another wrinkle that may hamper returns: A CXO on our most recent BT150 call noted that he was struggling to make his premium copilot work across meetings and applications.

It's always about the data strategy. There's a reason that AI is being deployed successfully in regulated industries: These enterprises have to have their data in order for compliance. Other firms have to develop their data management and governance mojo to even consider genAI.

If all else fails you could just ask the latest LLM how you should manage the sprawl.

See more:

- Generative AI spending will move beyond the IT budget

- Enterprises Must Now Cultivate a Capable and Diverse AI Model Garden

- Secrets to a Successful AI Strategy

- Return on Transformation Investments (RTI)

- Financial services firms see genAI use cases leading to efficiency boom

- Foundation model debate: Choices, small vs. large, commoditization