Cerebras Systems, a startup focused on building AI systems and processors, launched Cerebras Inference, which the company claims is 20 times faster than Nvidia GPU-based instances in hyperscale clouds.

The startup has filed confidential plans to go public in the second half of 2024. Cerebras Inference is available via Cerebras Cloud or as an on-premises system. The Cerebras news lands as Nvidia is highlighting how it is optimizing software to boost performance of its GPUs and integrated stack. Meanwhile, AMD bought ZT Systems to build out its AI infrastructure designs.

According to Cerebras, Cerebras Inference delivers 1,800 tokens per second with Llama 3.1 8B and 450 tokens per second for Llama 3.1 70B. Cerebras Inference starts at 10 cents per million tokens.

In a blog post, Cerebras outlined how Cerebras Inference is different than existing architectures. While much of the focus for generative AI revolves around training models, inference is going to constitute a large chunk of workloads. Those inference workloads will be allocated based on business needs and price/performance. For instance, AWS with its Trainium and Inferentia chips, Google Cloud with its TPUs and other players such as AMD may look appealing vs. Nvidia.

Constellation Research analyst Holger Mueller said:

"We are in the custom hardware acceleration phase of AI – and we see what can be done by Cerebras. Holding complete models in SRAM provides better performance for inference and is likely going to change what the go-to inference architecture is going to be. And this development is one more data point illustrating how training and inference platforms are diverging on the spec side. The risk for custom inference platforms is getting smaller by the quarter, as the nature of the transformer model outputs remains the model of choice for genAI, while the inference market keeps doubling quarter after quarter: There needs to platforms that run all the inference."

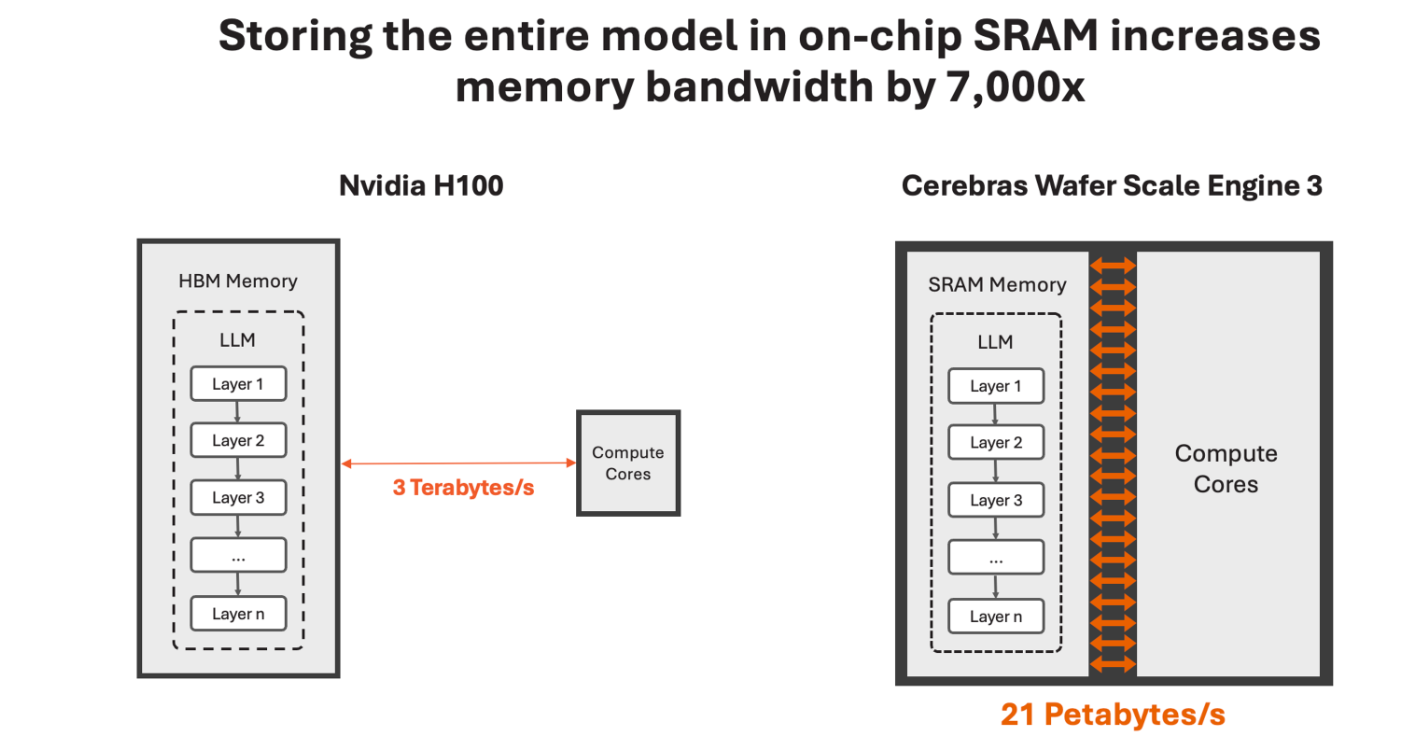

Cerebras in its post compared its Cerebras Wafer Scale Engine 3, its AI processor, to Nvidia H100 systems. Note that Nvidia is rolling out H200 systems and Blackwell later. Cerebras' CS-3 system is an integrated AI stack that can be scaled out.

Cerebras' inference service is available on a free tier, which offers API access and generous usage limits; a developer tier with models priced at 10 cents and 60 cents per million tokens and an enterprise tier that includes fine-tuned models, custom service level agreements and dedicated support.

Meanwhile, the company has also been building out its executive team. Cerebras added Paul Auvil and Glenda Dorchak its board of directors. Auvil was recently CFO of VMware and Proofpoint. Dorchak is a former IBM, Intel and Spansion executive. Both were added for their technology and corporate governance experience. In addition, Cerebras named Bob Komin CFO. He previously served as CFO of Sunrun, Flurry and Tellme Networks.

Related:

- Nvidia outlines roadmap including Rubin GPU platform, new Arm-based CPU Vera

- AMD expands its data center, AI infrastructure push with $4.9 billion purchase of ZT Systems

- DigitalOcean highlights the rise of boutique AI cloud providers

- Equinix, Digital Realty: AI workloads to pick up cloud baton amid data center boom

- The generative AI buildout, overcapacity and what history tells us

- AI infrastructure is the new innovation hotbed with smartphone-like release cadence