Amazon Web Services is focused on enabling generative AI throughout its platform in a bid to move projects from pilots to production. The strategy is to meet enterprises where they are with practical features including fine-tuning of large language models (LLMs), guardrails and additons to Amazon Bedrock.

At AWS Summit in New York, the company set out to layer genAI throughout its tech stack along with services that will make it more part of the everyday workflows. To make genAI move from proof of concept to production, AWS is rolling out a series of services. Bayer is rolling out Amazon Q across its organization and Amazon's One Medical is doing the same. AWS, which has launched 326 genAI features since 2023, also touted use cases at Ferrari to ingest data from vehicles and create new designs and models.

GenAI’s prioritization phase: Enterprises wading through thousands of use cases | AWS annual revenue run rate hits $100 billion as growth accelerates | AWS names Garman CEO effective June 3

Add it up and AWS' big goal was to highlight its genAI stack n a way that equates to a series of productivity gains and business wins. Matt Wood, VP of AI Products at AWS, said: "It's better to focus on what's not going to change."

In other words, genAI will have to deliver business value to move from pilot to production. Wood said genAI is early in its development with leaps in business value appearing in the next 12 to 18 months. "It's becoming apparent that genAI will be woven throughout all of our applications," said Wood.

Here's a look at what AWS announced:

Q Apps goes to GA

Q Apps will be generally available. AWS said Q Apps will enable customers to build apps that can execute tasks via a natural language interface. Q Apps can then be shared with others if useful. AWS pitch for Q Apps focused more on optimizing processes. For instance, Q Apps can distill CRM data to generate insights that go into new RFPs saving hours spent digging through documents and content repositories.

AWS launches Amazon Q, makes its case to be your generative AI stack

The shift with Q Apps is that AWS is moving from finding an answer to a question to figuring out what the problem is. What remains to be seen is whether a horizontal interface such as Q effectively becomes the engagement layer to other enterprise applications.

Q Apps can turn Amazon Q conversations into apps, leverage enterprise data and inherit security and governance controls.

AWS is enabling developers to generate custom code inside Amazon Q Developer since Q can become familiar with an enterprise's coding practices, terminology and comments. "Picture a world where developers are running multiple agents in parallel," said Wood.

Within Amazon SageMaker Q will be used to move more quickly through different steps for fine tuning models or preparing data. AWS is adding the ability to build ML models in natural language, add recommendations in SageMaker Studio notebooks and generate code and resolve bugs with a notebook.

The upshot is that customers are starting to use Amazon Q as a vehicle to build assistive systems. Wood said Q can be used for large applications as well as ones for small teams. Smartsheet uses Amazon Q chatbots to connect teams, write account plans and complete other tasks.

Bedrock improvements

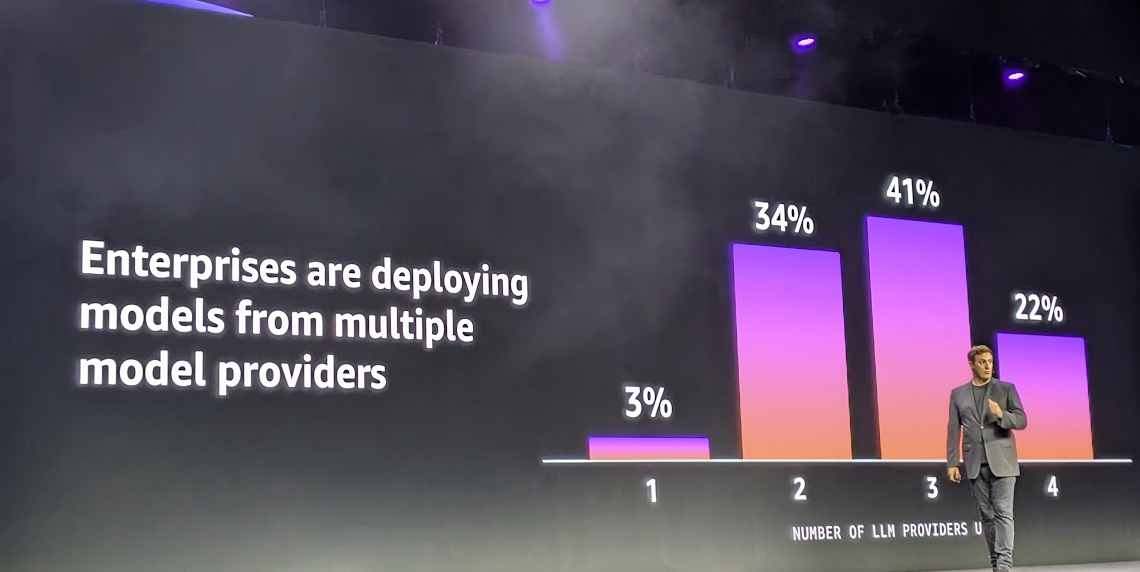

Bedrock will add a new image model from Stability AI to complement the addition of Anthropic's Claude 3.5 Sonnet. Wood said that 41% of large enterprises were using 3 or more large language models.

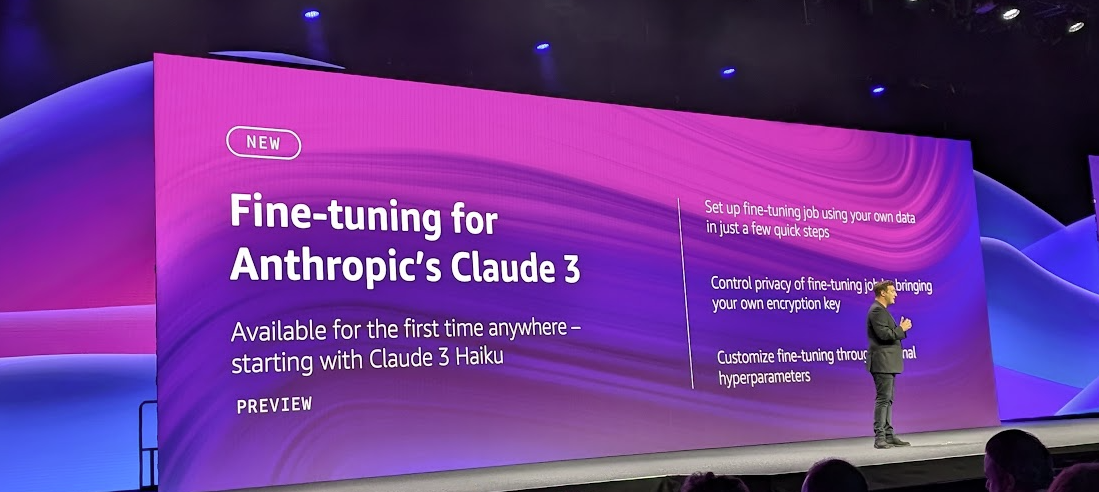

Claude 3 Haiku will also be fine-tunable via Bedrock. The ability to fine tune models in Bedrock will be a win for enterprises since fine tuning a smaller model is more cost effective and useful. Fine-tuning for other Claude models will follow. Wood said a few quick steps will enable enterprises to tune with internal data, control privacy with encryption keys and customize with hyperparameters.

- Amazon Bedrock integrated into SAP AI Core, SAP to use AWS chips

- AWS' Matt Wood on model choice, orchestration, Q and evaluating LLMs

- Amazon CEO Jassy's shareholder letter talks AWS' approach to generative AI

Bedrock will also get more retrieval augmented generation (RAG) capabilities within Bedrock including more connectors to Salesforce, Confluent and Microsoft SharePoint for more enterprise data and knowledge bases. Those connectors, wrapped under Knowledge Bases for Amazon Bedrock, are designed to enhance foundational models as well as speed up vector searches and Amazon memoryDB integrations.

"Customers are using RAG for a wide range of use cases," said Wood. "We're expanding the type of data you can connect beyond S3 into additional data connectors and Web data from user provided public URLs."

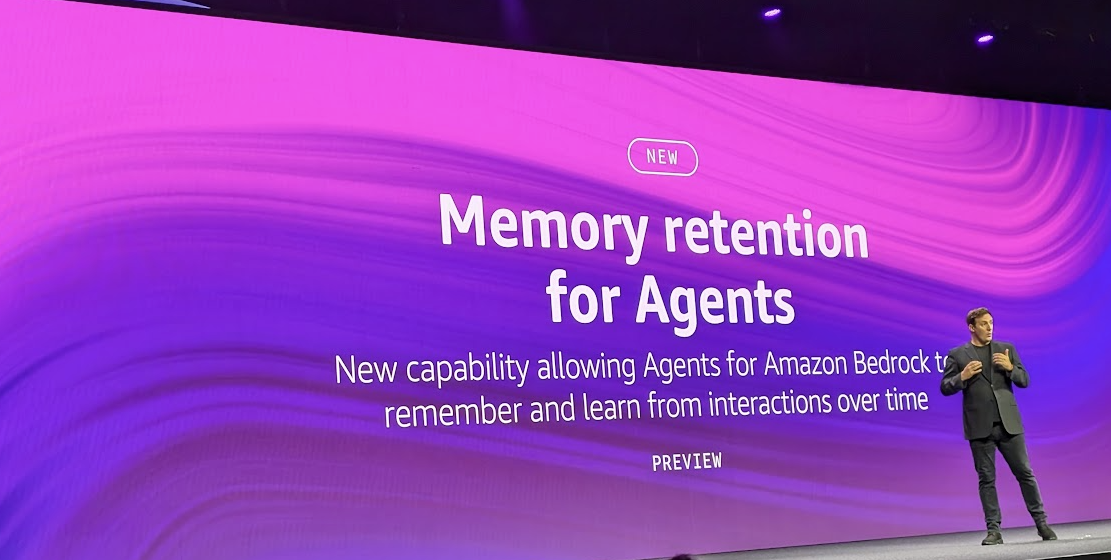

AWS said it is also adding more capabilities to Agents for Amazon Bedrock including the ability to retain memory over multiple interactions. This ability is being integrated into customer facing use cases at Delta, United and Booking.com.

"You can carry over the context from different agent workflows," said Wood.

Wood added that Agents for Amazon Bedrock will be able to generate code, analyze data and generate graphs.

Guardrails

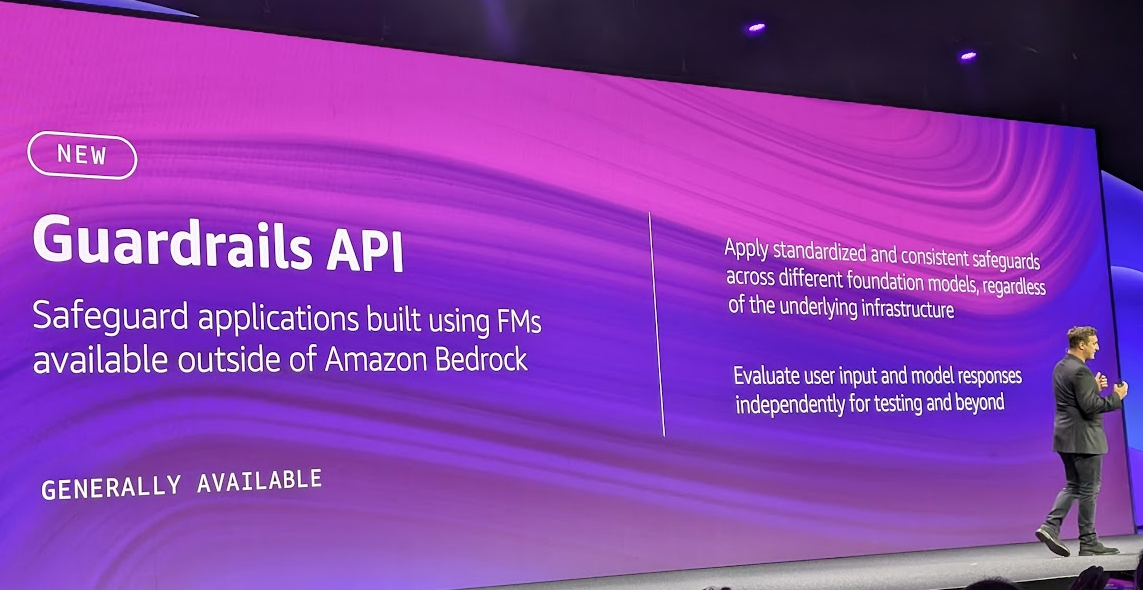

The company is also launching guardrails beyond Bedrock that are independent of models, Guardrails can ground based on context, search for a subset of acceptable answers via knowledge base connectors and increase response rates. AWS is betting that the ability to add a guardrail layer that's LLM and application agnostic will be a win.

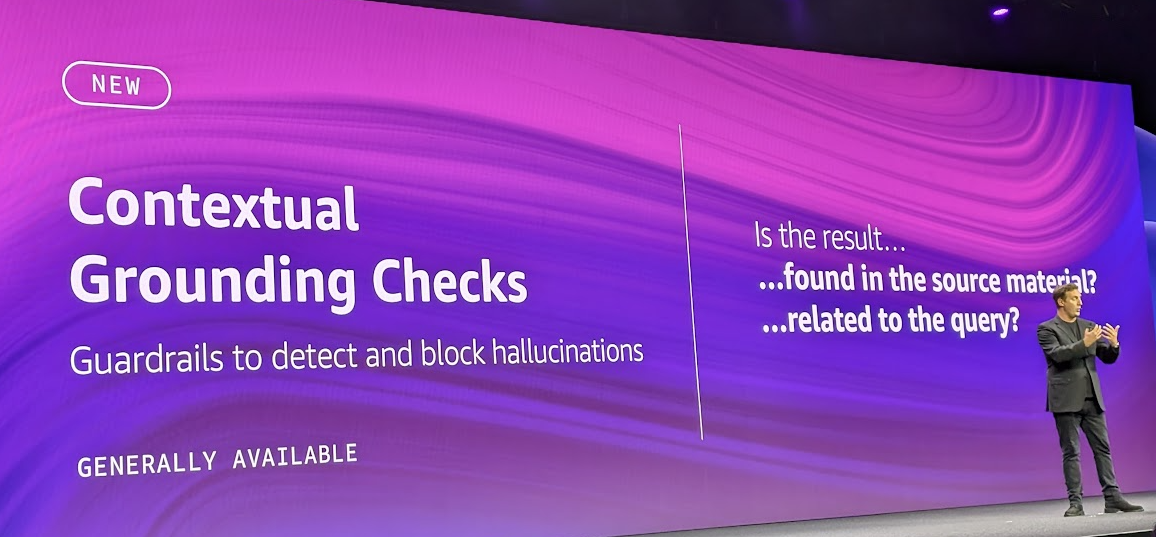

AWS launched Contextual Grounding Check in Guardrails to ground answers via knowledge bases and corporate data. The grounding check asks if the RAG result is found in source material and whether it's related to the query.

AWS is also adding a new interface for prompt flows and prompt management. These prompt tools will be specific to model families but will enable faster upgrades.

Amazon is matching 100% of the electricity consumed by global operations with renewable energy. The play here for AWS is offsetting sustainability concerns due to genAI workloads.