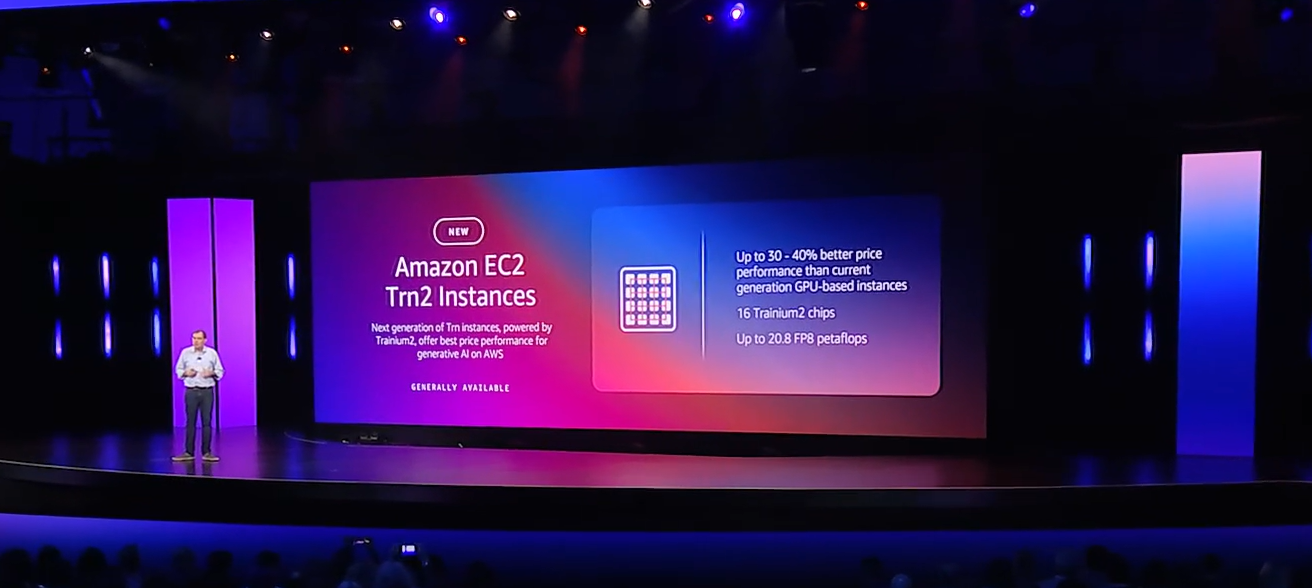

AWS launched new instances based on its Trainium2 processor, which offers 4 times the performance of Trainium1 with twice as more energy efficiency. AWS also prepped for larger training workloads with Trainium2 UltraServers that will be pooled into a cluster.

The cloud giant also set the table for AWS Trainium3.

Trainium2 provides more than 20.8 petaflops of FP8 compute, up from 6 petaflops with the first Trainium. EFA networking in Trainium2 is 3.2 Tbps, up from 1.6 Tbps and HBM is 1.5 TB, up from 512 GB. Memory bandwidth for Trainium2 is 45 TB/s, up from 9.8 TB/s.

AWS CEO Matt Garman said at the re:Invent 2024 keynote that Adobe, Poolside, Databricks, Qualcomm and Anthropic were among the companies working on Trainium2 instances.

Garman was also joined on stage by Apple, who is working on Trainium2 for training workloads. He said:

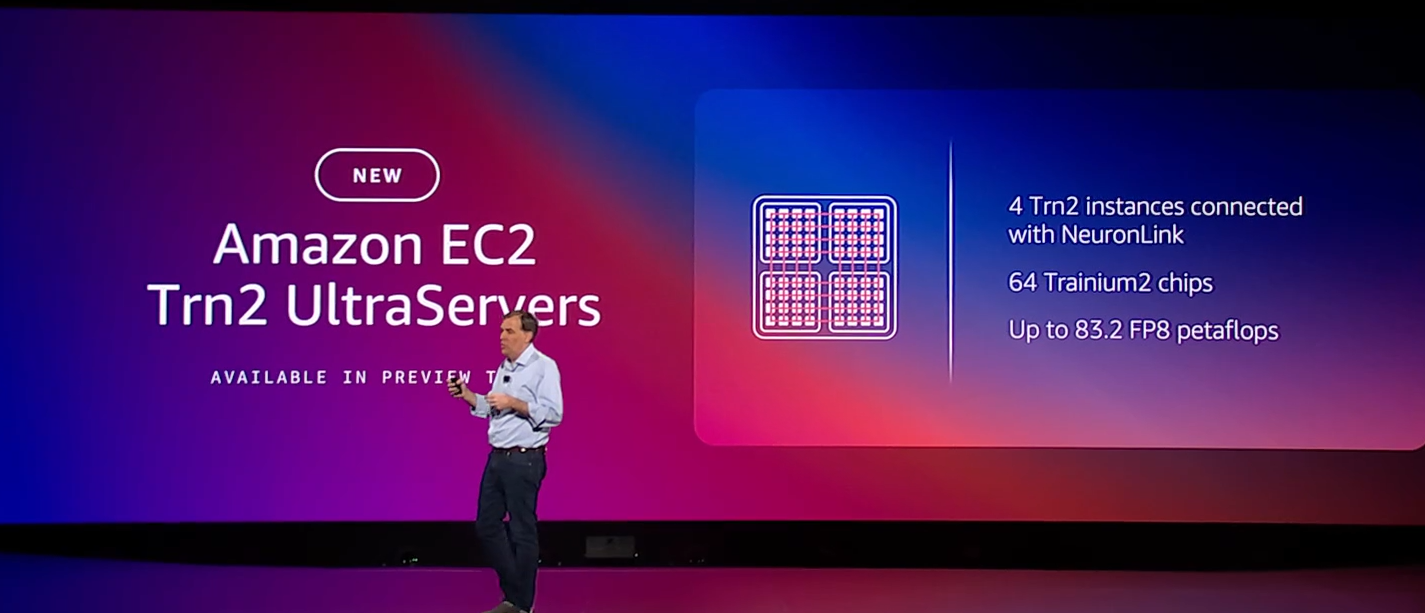

"Effectively, an UltraServer connects four Trainium2 instances, so 64 Trainium2 chips, all interconnected by the high-speed, low-latency neural link connectivity. This gives you a single ultra node with over 83 petaflops of compute from this single compute node. Now you can load one of these really large models all into a single node and deliver much better latency, much better performance for customers without having to break it up over multiple nodes."

AWS was early to using custom silicon for training and inference and is looking to provide less expensive options than Nvidia. Apple is already using Trainium and Inferentia instances to power its own models, writing tools, Siri improvements and other additions.

The cloud giant's product cadence is designed in part to enable customers to easily shift training and inference workloads to optimize costs.

More:

- AWS outlines new data center, server, cooling designs for AI workloads

- AWS re:Invent 2024: Four AWS customer vignettes with Merck, Capital One, Sprinkr, Goldman Sachs

- Oracle Database@AWS hits limited preview

Garman said that AWS is stringing together Trainium instances in a cluster that will be able to provide compute for the largest models. Apple said it is currently evaluating Trainium2.

AWS' custom silicon strategy also revolves around Graviton instances as well as Inferentia. Customers on a panel at re:Invent highlighted how they were using AWS processors.

Although AWS has custom silicon, it is also rolling out instances based on other chips. Garman noted that AWS would launch new P6 instances on Nvidia's Blackwell GPUs with availability early 2025. AWS is also launching new AMD instances.

The bet is that AWS’ custom silicon will ultimately yield better price and performance for enterprise AI.

Constellation Research analyst Holger Mueller said:

"AWS is making good progress building more powerful combinations of its Trainum chips. It is showing that they have solved potential heating, electromagnetic interference and cooling issues. You can expect that Trainium will be scaled to 64 and potentially 128 chips per instance. But it all needs to be put in perspective as Google Cloud is on version 6 of its custom silicon. The announcement puts Amazon ahead of Microsoft."