AWS outlined a series of improvements to its S3 service to manage metadata automatically, leverage Apache Iceberg tables and optimize for analytics workloads with Amazon S3 Tables. Also on the data front, AWS moved to reduce latency for its databases.

At AWS re:Invent 2024, CEO Matt Garman said services like S3 and Amazon Aurora DSQL are designed to set up enterprises to make data lakes, analytics and AI more seamless. "We'll continually optimize that query performance for you and the cost as your data lake scales," said Garman.

- AWS scales up Trainium2 with UltraServer, touts Apple, Anthropic as customers

- AWS outlines new data center, server, cooling designs for AI workloads

- AWS re:Invent 2024: Four AWS customer vignettes with Merck, Capital One, Sprinkr, Goldman Sachs

- Oracle Database@AWS hits limited preview

Garman's storage and data talk featured JPMorgan Chase CIO Lori Beer to talk about how the bank is using AWS for its data infrastructure. The upshot is that AWS is aiming to enable its enterprise customers to set up data services for AI. "Our goal is to leverage genAI at scale," said Beer.

Here's the rundown of the storage and database enhancements at AWS.

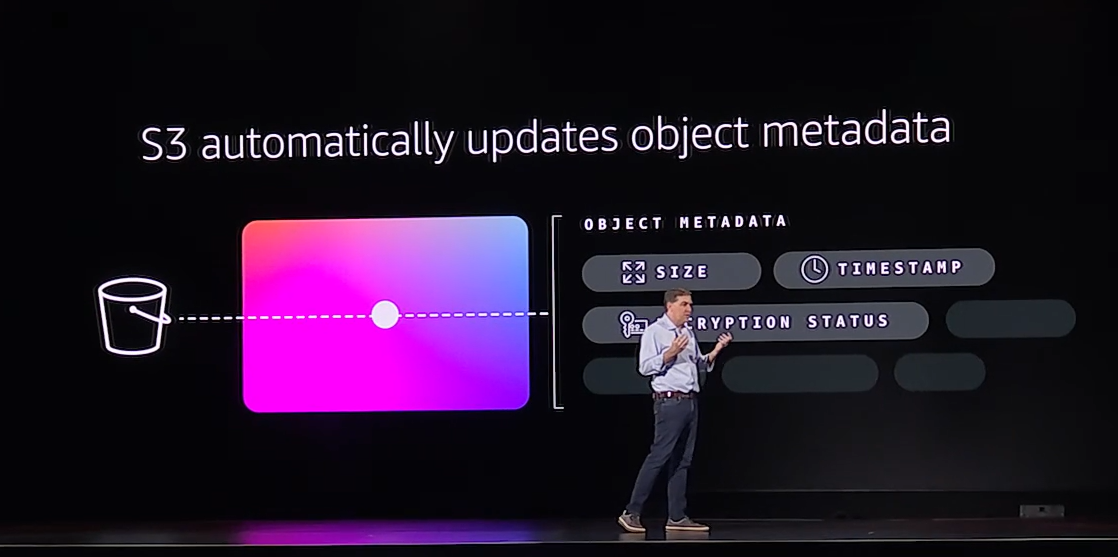

- S3 will automatically generate metadata when captured as S3 objects. This service is in preview and data will be stored in managed Apache Iceberg tables. This move sets S3 up to improve inference workloads and data sharing with services like Bedrock.

- Amazon S3 Tables will provide storage that's optimized for tabular data including daily purchase transactions, sensor data and other information.

- AWS retooled its database engine. Aurora DSQL is designed to be the fastest distributed SQL database that handles management, delivers low latency reads and writes. Aurora DSQL is also Postgres compatible.

- DynamoDB will also get global tables and the same low latency setup.