AMD CFO Jean Hu said cloud providers and enterprises are starting to look toward total cost of ownership when it comes to inference and training workloads for artificial intelligence workloads.

Speaking at J.P. Morgan’s annual technology conference, Hu said data center demand for the company’s GPUs, accelerators and server processors was strong. She added that in the second half demand will be stronger than in the first. One big reason for that surge may be the news coming out of Microsoft Build 2024.

Microsoft announced the general availability of the ND MI300X VM series, which features AMD's Instinct MI300X Accelerator. The ND MI300X VM combines eight AMD MI300X Instinct accelerators. AMD is looking to give Nvidia's GPU franchise competition. On a briefing with analysts, Scott Guthrie, Executive Vice President of Cloud and AI at Microsoft, said AMD's accelerators were the most cost-effective GPUs available based on what the company is seeing with its Azure AI Service.

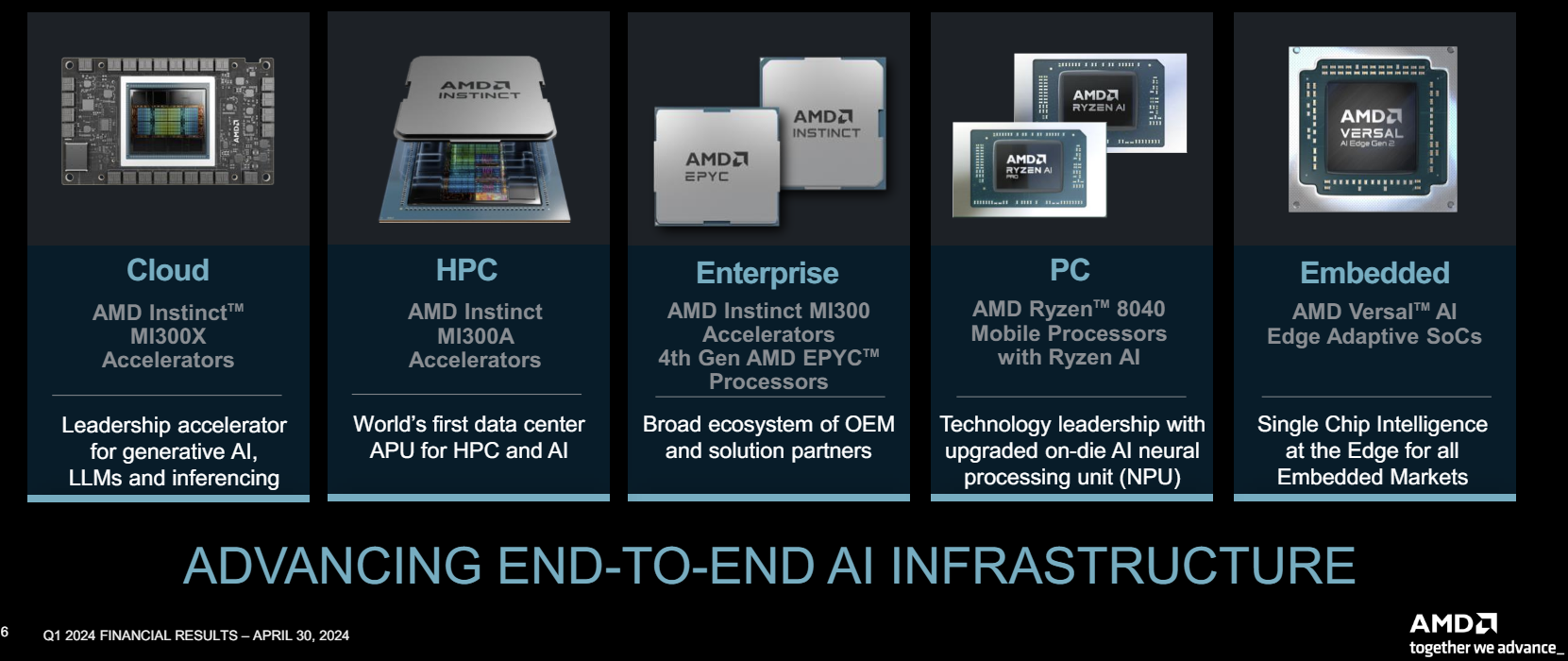

AMD Q1 delivers data center, AI sales surge of 80%

Hu said total cost of ownership is becoming critical as companies scale generative AI use cases.

Here are the key takeaways from Hu's talk.

The Microsoft Azure deal. actually,MI300 side, MI300X and ROCm software together actually power the Microsoft's virtual machine both for the internal workload, the ChatGPT, what open source to use, and also external workload, third-party workload," said Hu. "It's the best price performance to power the ChatGPT for inference. So that's really a proof point for not only MI300X from hardware, how competitive we are, but also from ROCm software, the maturity, how we have worked with our customers to come up with the best price performance."

She added that software investments have also been critical for TCO as AMD leverages open-source frameworks.

GPU workloads will also pull demand for CPUs. Cloud providers have more than 900 public AM instances driving adoption. Hu said that enterprises are also adopting AMD server processes because they need to make room for GPUs. Hu said:

"All the CIOs in enterprise are facing a couple of challenges. The first is more workloads. The data is more, application is more, so they do need to have a more general compute. At the same time, they need to start think about how they can accommodate AI adoption in enterprise. They are facing the challenges of running out of power and space. If you look at our Gen 4 family of processors, we literally can provide the same compute with 45% less servers."

AMD's Gen 5 server processors, Turin, will also launch with revenue ramping in 2025.

MI300 demand. "We have more than 100 customer engagements ongoing right now," said Hu. "The customer list includes, of course, Microsoft, Meta, Oracle, and those hyperscale customers, but we also have a broad set of enterprise customers we are working with." Dell Technologies AI factory roadmap has two tracks--one solely Nvidia and one that will include AMD infrastructure as well as others.

Roadmaps. Hu said AMD has talked with customers about roadmaps for GPUs and collecting feedback. She added that AMD tends to be conservative about announcing roadmaps, but you can expect it to be competitive. "We will have a preview of our roadmap in the coming weeks," she said.

On-premise AI workloads. Hu noted that AMD is working with a bevy of hyperscalers, but enterprises are a critical customer base.

"When we talk to our enterprise customers, they do start to think about that question. Do I do it on premise? Do I send it to cloud? That is a strategic approach they have to think through. We are uniquely positioned because on the server side, we're working with our customers. We're helping them with how they deploy servers. It has become significant leverage for us. AI PC, the server side and the GPU side, that's a part of our go-to-market model right now."

Hu said inferencing workloads are strong and training is scaling. "Both training and inference are important to us," said Hu.