AMD launched its 5th Gen EPYC processor as well as its latest Instinct MI325X accelerators as it aims to gain AI workloads from inference to model training. The big takeaway is that AMD is well equipped to give Nvidia competition for AI workloads.

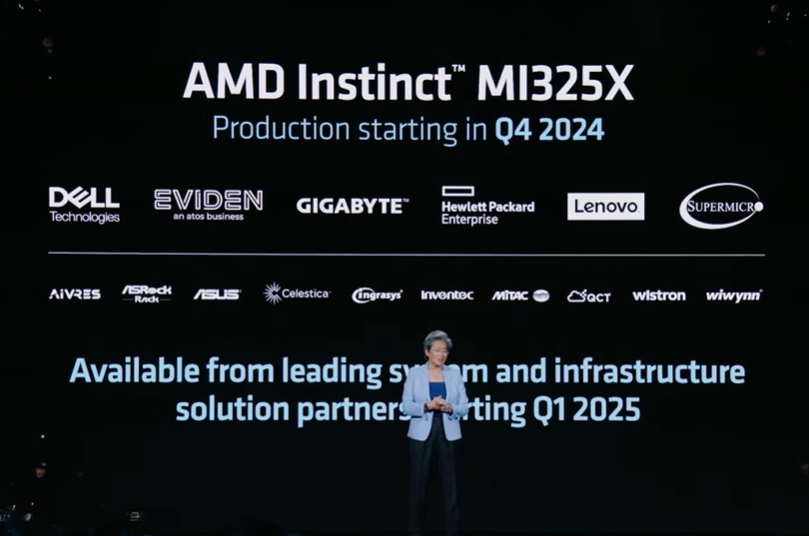

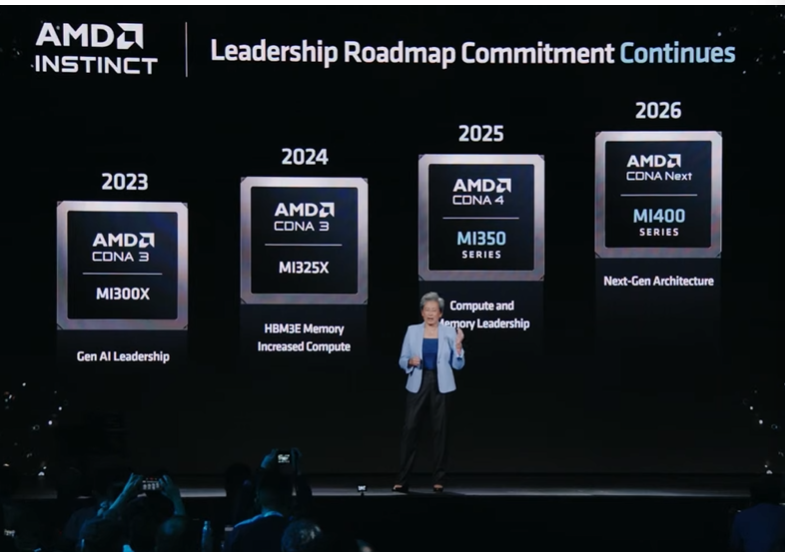

The chipmaker said its MI325X platform will begin production in the fourth quarter with favorable performance vs. Nvidia's H200 GPUs. AMD also outlined its annual cadence as well as the roadmap head into 2025.

Lisa Su, CEO of AMD, gave a closely watched keynote at its Advancing AI 2024 event. The launch of AMD's new enterprise CPUs and GPUs are critical given the chipmaker is the best positioned to compete with Nvidia, which has dominated AI infrastructure. Su said that the data center AI accelerator market can hit $500 billion by 2028. "The data center and AI represent significant growth opportunities for AMD, and we are building strong momentum for our EPYC and AMD Instinct processors across a growing set of customers," she said.

AMD Instinct MI325X and what's ahead

Su said the next-gen Instinct GPU will have 256GB HBM3I, 6TB/s and better performance overall compared to the previous MI300.

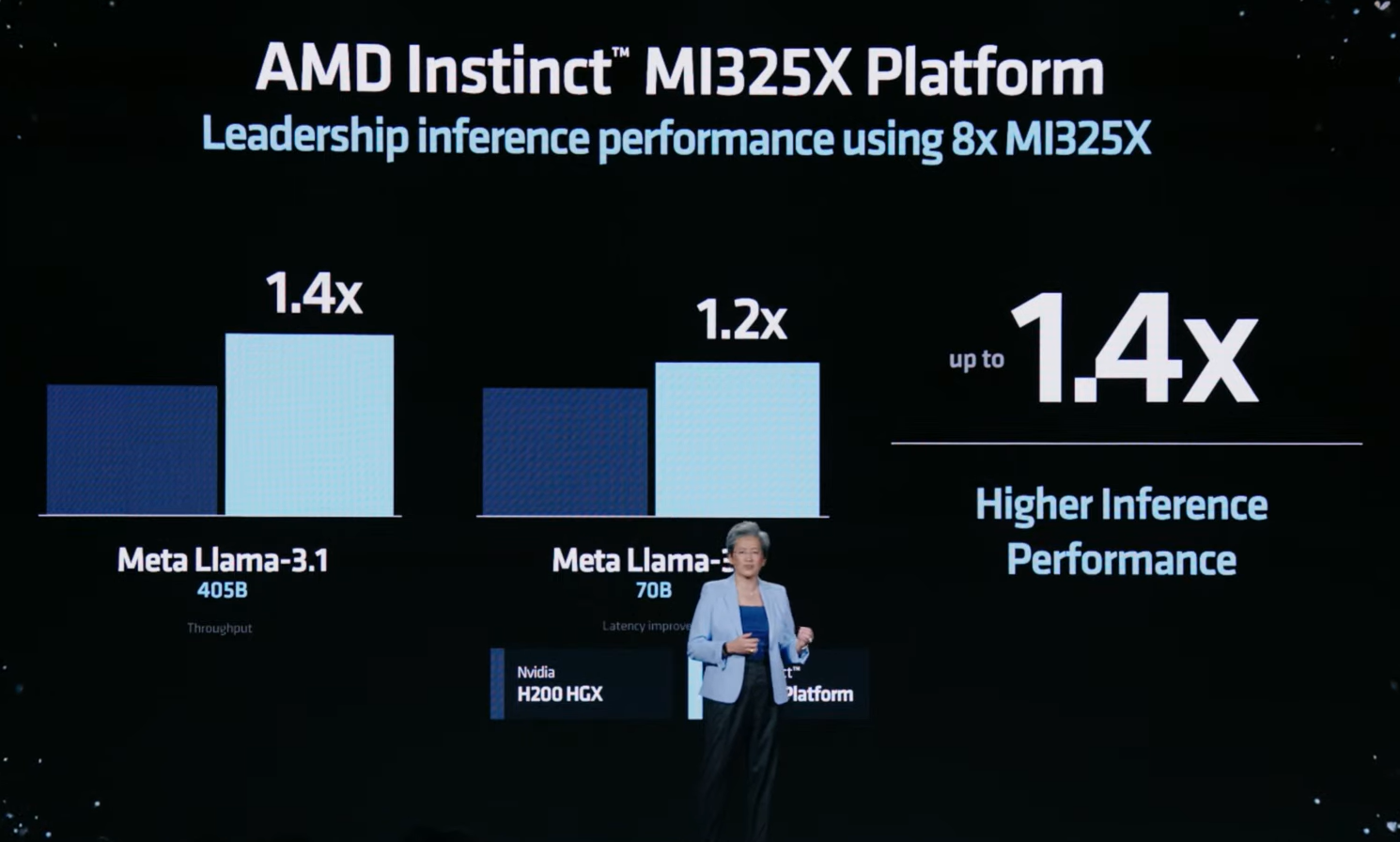

AMD added that the MI325X platform outperforms Nvidia H200 HGX for Meta Llama inference workloads and matches it for 8GPU training.

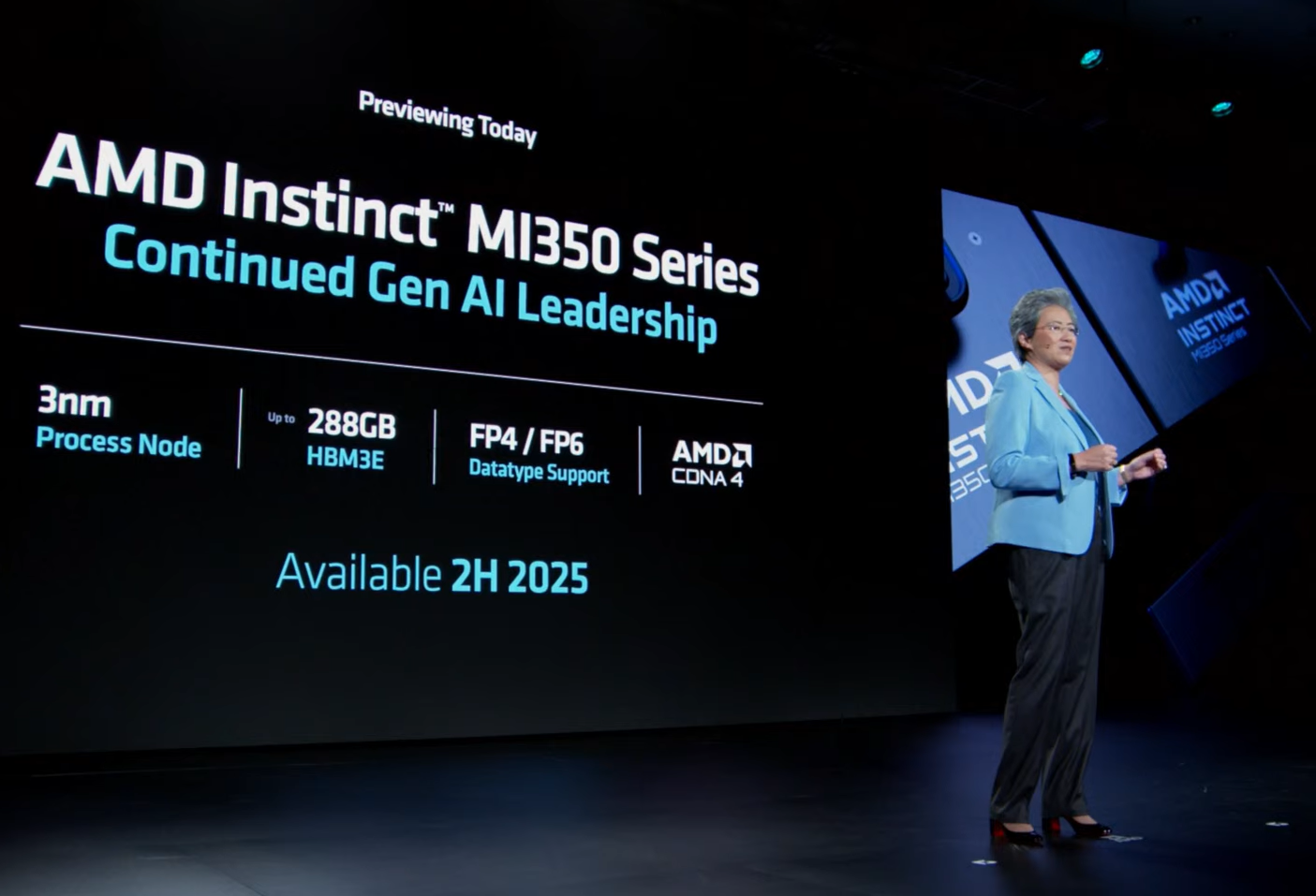

Su also said that its AMD Instinct MI355X Accelerator is in preview for launch in the first half of 2025.

For AMD, the game is getting its GPUs in the hyperscale clouds--Google Cloud, Microsoft Azure and Oracle were on stage with Su live or by video--as well as with key infrastructure providers such as Dell Technologies, HPE and SuperMicro. These infrastructure providers are creating data center designs that can accommodate AMD and Nvidia with future proofed infrastructure. AMD also highlighted partners such as Databricks.

- AMD sees AI inference, training workloads increasing in enterprise

- AMD: Strong Q2, raised Q3 guidance, data center revenue doubles

- AMD outlines new GPUs, Instinct accelerators with annual cadence

- With Oracle Cloud win, AMD MI300X gains traction as Nvidia counterweight

- AMD acquires Silo AI for $665M as it builds out AI ecosystem, genAI stack

- AMD expands its data center, AI infrastructure push with $4.9 billion purchase of ZT Systems

Making the EPYC case for the enterprise

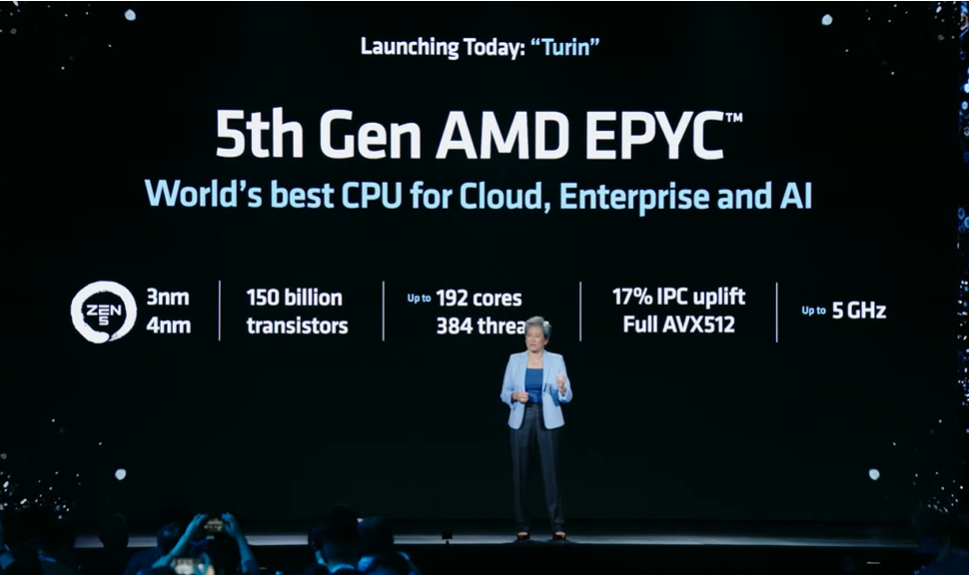

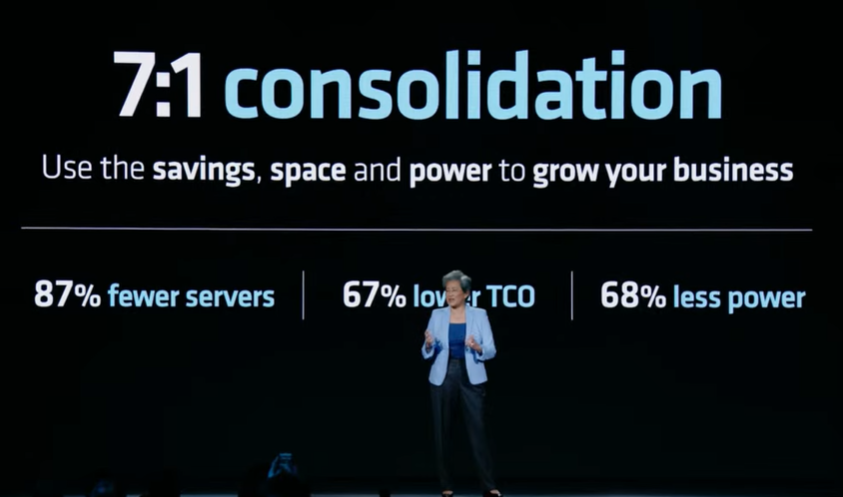

Su's keynote focused on a key theme for the 5th Gen EPYC processor in the data center: Enterprise returns due to lower total cost of ownership as well as taking on inference workloads.

The latest EPYC server processor is billed as the best CPU for cloud, enterprise and AI workloads. The processor, formerly code-named Turin, has 150 billion transistors, up to 192 cores and up to 5GHz built on 3nm and 4nm technology.

For the enterprise, Su said the latest EPYC has up to 1.6x performance per core in virtualized infrastructure and up to 4x throughput performance for open-source databases and video transcoding.

As for inference workloads, Su said the latest EPYC processor has up to 3.8x the AI performance for machine learning and end-to-end AI.

The broader portfolio

- Although Instinct and EPYC were the headliners, AMD had a bevy of other offerings to round out its AI portfolio. Here's a look:

- AMD's CDNA Next architecture will be available in 2026. AMD also touted its AMD ROCm software stack.

- AMD also expanded its DPU processor lineup with AMD Pensando Salina DPU and AMD Pensando Pollara 400, the first Ultra Ethernet Consortium ready NIC.

- AMD launched AMD Ryzen AI PRO 300 Series processors, powering Microsoft Copilot+ laptops.