The launch of Nvidia's Project Digits, a desktop AI supercomputer starting at $3,000 for data scientists and AI researchers, is just one example of a broader theme: AI training and inferencing is going to be decentralized to some degree.

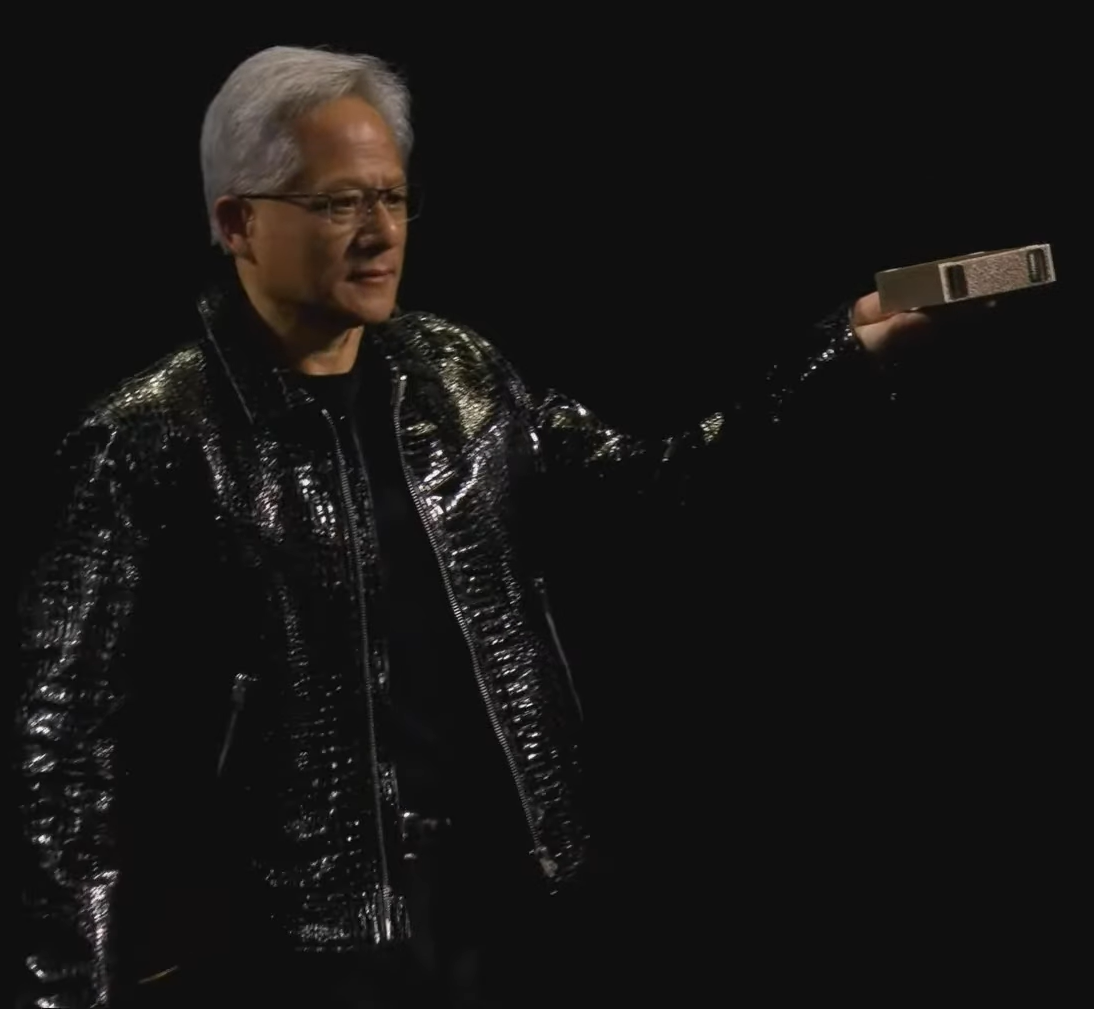

Nvidia's news--delivered by CEO Jensen Huang at CES 2025--highlights a key use case revolving around offloading AI development with a high-powered system. The general idea is that users can develop and run inference on models on the desktop and then deploy to the cloud or data center.

For enterprises pondering on-premises data centers and wrestling with cloud costs, Nvidia's Project Digits isn't a bad idea--especially when you can network two of those systems together to run up to 405-billion parameter models.

"With Project Digits, developers can harness the power of Llama locally, unlocking new possibilities for innovation and collaboration," said Ahmad Al-Dahle, Head of GenAI at Meta.

Constellation Research analyst Holger Mueller said:

"Digits poses lots of challenges, but if Nvidia overcomes them it has repercussions across the whole AI stack. Bigger has been better for AI and the question has always been this: Is it cost effective and can it be run on remote locations? Digits ends that discussion. It is Blackwell everywhere (and with that the soon to be announced Blackwell successor) as well."

But Nvidia's desktop supercomputer is just part of the mix.

Dell Technologies said it will be among the first to roll out systems powered by AMD's latest Ryzen processors. AMD launched Ryzen AI Max Pro, Ryzen AI 300 Pro and AMD Ryzen 200 Pro Series processors for business PCs. HP also outlined the HP ZBook Ultra G1a 14 mini workstation.

With the move, AMD is putting high-performance computing into thin laptops and looking to capture AI workloads on workstations. Ryzen AI Max Pro Series processors are designed for large engineering and models.

Simply put, there should be enough AI compute in the field that can be networked and leveraged.

Intel also updated its Intel Core lineup with a focus on business AI applications. For good measure, Qualcomm outlined its Snapdragon X series, which will bring AI and Copilot+ PCs to the masses at a price around $600.

- Santa isn't bringing you an AI PC in 2024

- PC industry's big dream: AI enabled PCs spur upgrade cycle

- PC upgrade cycle to be driven by AI, but calling timing has been difficult

How will this develop?

These systems at CES could be construed as yet another effort to prod consumers and businesses to upgrade PCs. The AI PC upgrade cycle will happen, but it has clearly been delayed. Instead, look upstream to Project Digits, which will have a real AI workload purpose. Yes, enterprises will be more dependent on Nvidia but being able to develop and tweak models locally and then use data center resources is likely to be cheaper and arguably more sustainable.

With all the cool kids--data scientists and AI wonks--buying supercomputer workstations the rest of us will likely boost specs just to have the horsepower. Of course, the masses won't use that horsepower, but if a savvy enterprise can capture the compute at the edge there's real returns ahead.

There's already signs that enterprises are starting PC upgrades and more future-proof AI friendly systems are likely to seal the deal. For consumers, the AI PC upgrade cycle will be slower, but you can expect Qualcomm to capture some real share.