Don't look now, but AI-optimized servers and infrastructure may be the most trendy innovation corner of technology. And the release cycle looks like it was ripped out of the Apple and Samsung playbooks.

For those that need a refresher since the smartphone industry has been boring in recent years, here's the cadence the sector used to revolve around.

- Apple announces new software plans at WWDC.

- Apple launches new iPhone with integrated stack, new processors and updates that are billed monumental but actually have been used in Samsung devices for years.

- Tech buyers gobble up whatever iProduct comes out.

- Rinse and repeat year after year and collect money with a dash of complimentary products and services.

For you Android folks, you can swap Samsung or Google instead of Apple.

That playbook is still being utilized, but let's face it: Smartphones are a bit of a yawner these days. The new hotspot is AI infrastructure, specifically AI servers.

Nvidia also happens to be the new Apple with an integrated stack of hardware, software and ecosystem to build AI factories and train large language models (LLMs).

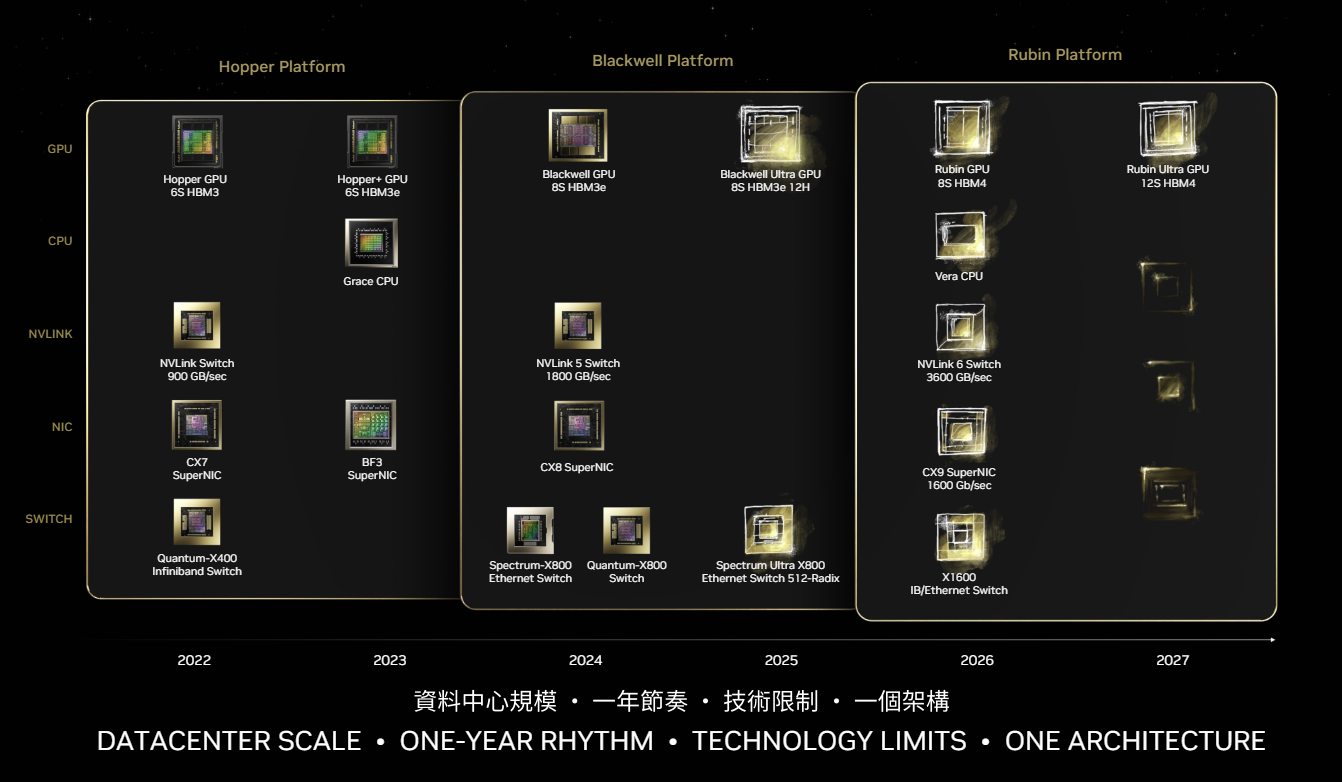

At Computex, Nvidia said it will move to an annual cycle of GPUs and accelerators along with a bunch of other AI-optimized hardware. "Our company has a one-year rhythm. Our basic philosophy is very simple: build the entire data center scale, disaggregate and sell to you parts on a one-year rhythm," said Nvidia CEO Jensen Huang.

This post first appeared in the Constellation Insight newsletter, which features bespoke content weekly and is brought to you by Hitachi Vantara.

Constellation Research analyst Holger Mueller said: "Nvidia is ratcheting up the game by going from one design in 2 years to one design per year. This cadence is a formidable challenge for R&D, QA and sourcing in a supply chain that's already constrained. We will see if AI hardware is immune from new offerings stopping the sale of the current offerings."

Just a few hours later after Nvidia’s keynote, AMD CEO Lisa Su entered the Computex ring with her own one-year cadence and roadmap. AMD, the perennial No. 2 chipmaker in most categories, is going to rake in gobs of money being the alternative to Nvidia.

The GPU may just be the new smartphone that drives tech spending. This AI stack also has a downstream effect on Nvidia partners such as Huang fave Dell Technologies as well as Supermicro and HPE.

You'd never know it from the stock fall in the last week, but Dell has been kinda cleaning up the AI server category. Supermicro is doing well too. These OEMs will ride along with the annual GPU cadence. And now HPE is joining the parade as enterprises buy AI systems.

Jeff Clarke, Chief Operating Officer at Dell Technologies, said the backlog for AI-optimized servers was up 30% in the first quarter to $3.8 billion. The problem is the margins on those servers aren't up to snuff yet.

Lenovo is also seeing strong demand. Kirk Skaugen, President of Lenovo's Infrastructure Solutions Group, said the company's "visible qualified pipeline" was up 55% in its fiscal fourth quarter to more than $7 billion. Note that visible pipeline isn't backlog.

"In the fourth quarter, our AI server revenue was up 46%, year-to-year. On-prem and not just cloud is accelerating because we're starting to see not just large language model training, but retraining and inferencing," said Skaugen. "We'll be in time to market with the next-generation NVIDIA H200 with Blackwell. This is going to put a $250,000 server today roughly with eight GPUs, will now sell in a rack like you're saying up to probably $3 million in a rack."

Clarke noted that Dell Technologies will sell storage, services and networking around its AI-optimized servers. Liquid cooling systems will also be in a hot area. He said:

"We think there's a large amount of storage that sits around these things. These models that are being trained require lots of data. That data has got to be stored and fed into the GPU at a high bandwidth, which ties in network. The opportunity around unstructured data is immense here, and we think that opportunity continues to exist. We think the opportunity around NICs and switches and building out the fabric to connect individual GPUs to one another to take each node, racks of racks across the data center to connect it, that high bandwidth fabric is absolutely there. We think the deployment of this gear in the data center is a huge opportunity."

Super Micro Computer CFO David Weigand has a similar take. "We've been working on AI for a long time, and it has driven our revenues the past two years. And now with large language models and ChatGPT, its growth has obviously expanded exponentially. And so, we think that will continue and go on," said Weigand. "We think it's going to be both higher volume and higher pricing as well, because there is no doubt about the fact that accelerated computing is here to stay."

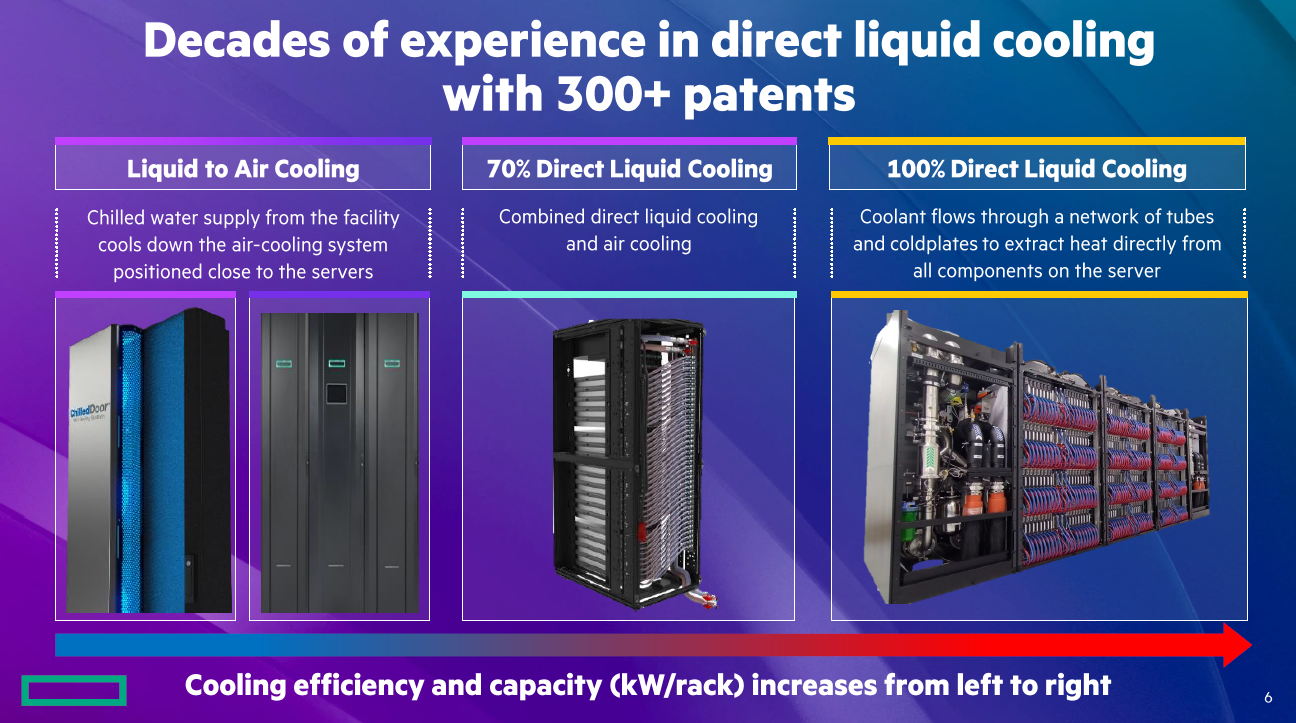

HPE's latest financial results topped estimates and CEO Antonio Neri said enterprises are buying AI systems. HPE's plan is to differentiate with systems like liquid cooling, one of three ways to cool systems. HPE also has traction with enterprise accounts and saw AI system revenue surge accordingly. Neri said the company's cooling systems will be a differentiator as Nvidia Blackwell systems gain traction.

Neri said: "We have what I call 100% or liquid cooling. And this is a unique differentiation because we have been doing 100% direct liquid cooling for a long time. Today, there are six systems in deployment and three of them are for generative AI. As we go to the next generation of silicon and Blackwell systems will require 100% direct liquid cooling. That's a unique opportunity for us because you need not only the IP and the capabilities to cool the infrastructure, but also the manufacturing side."

Indeed, there's a reason we're watching Huang and Su keynotes and dozing off as yesterday's innovation juggernauts speak at various conferences. AI infrastructure is just more fun.

And just to bring this analogy-ridden analysis home, it's worth noting that Intel spoke at Computex too. Yes, Intel is playing from behind on AI accelerators and processors, but is playing a role in the market. The role? Midmarket player for the most cost-conscious tech buyer.

If Nvidia is Apple. And if AMD is more like Google/Samsung. Then Intel is positioned to play Motorola and the not-quite premium phone role when it comes to AI. At Computex, Intel CEO Pat Gelsinger went through the cadence of AI PC possibilities and new Xeon server chips. And then Intel said this about the company's Gaudi 3 accelerators, which should be available in the third quarter.

"A standard AI kit including eight Intel Gaudi 2 accelerators with a universal baseboard (UBB) offered to system providers at $65,000 is estimated to be one-third the cost of comparable competitive platforms. A kit including eight Intel Gaudi 3 accelerators with a UBB will list at $125,000, estimated to be two-thirds the cost of comparable competitive platforms."

ASUS, Foxconn, Gigabyte, Inventec, Quanta and Wistron join Dell, HPE, Lenovo and Supermicro with plans to offer Intel Gaudi 3 systems.

Bottom line: AI systems will create a big revenue pie even if most of the spoils go to Nvidia. "I'm very pragmatic about these things. Today in generative AI, the market leader is Nvidia and that's where we have aligned our strategy. That's where we have aligned our offerings," said Neri. "Other systems will come in 2025 with other accelerators."

More on genAI:

- Don't forget the non-technical, human costs to generative AI projects

- GenAI boom eludes enterprise software...for now

- The real reason Windows AI PCs will be interesting

- Copilot, genAI agent implementations are about to get complicated

- Generative AI spending will move beyond the IT budget

- Enterprises Must Now Cultivate a Capable and Diverse AI Model Garden

- Secrets to a Successful AI Strategy

- Return on Transformation Investments (RTI)

- Financial services firms see genAI use cases leading to efficiency boom

- Foundation model debate: Choices, small vs. large, commoditization