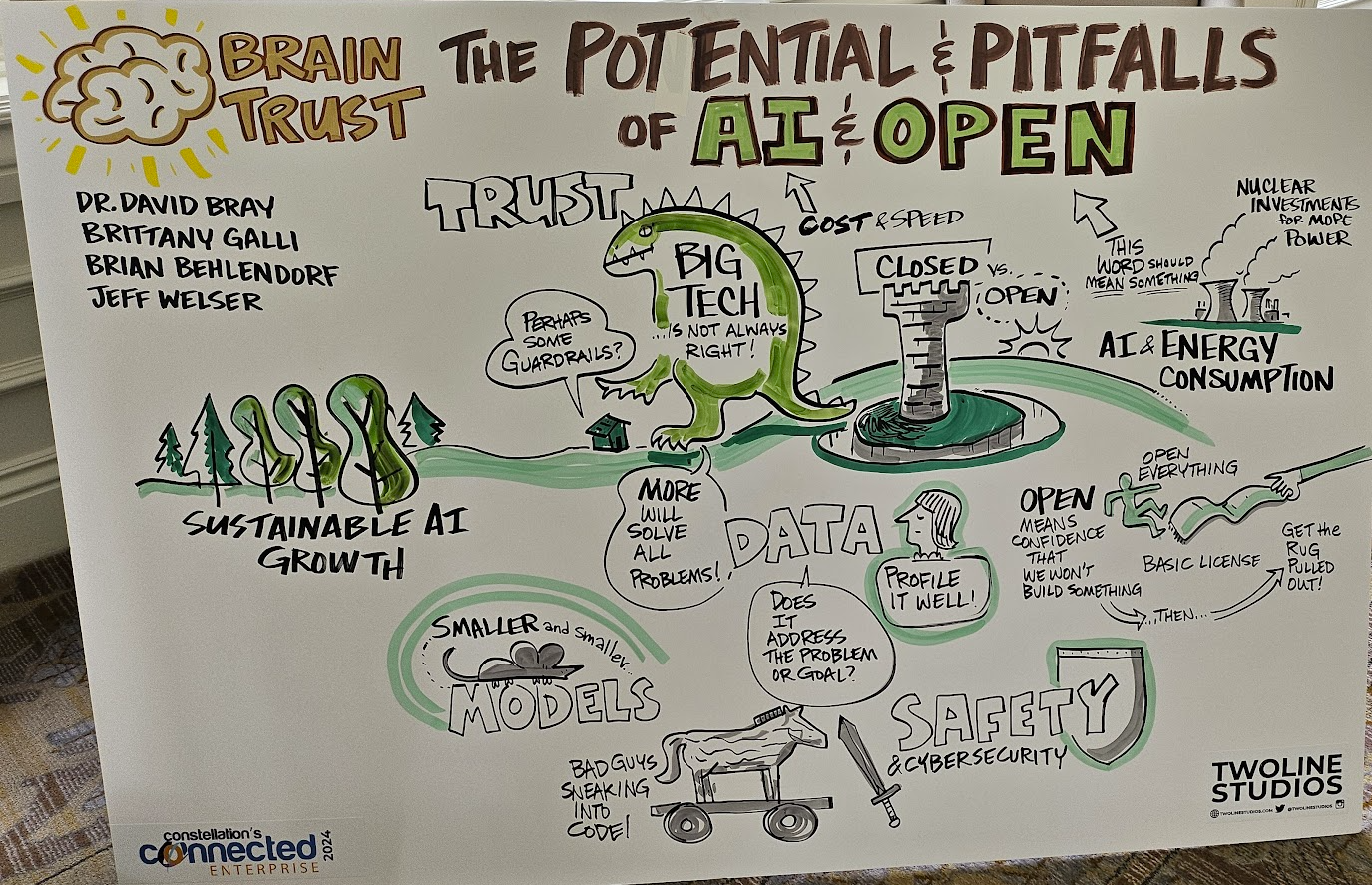

The cost of artificial intelligence inference and training will fall and enterprises need to question the current groupthink that revolves around a never-ending data center buildout cycle.

Speaking on a panel at Constellation Research's Connected Enterprise conference, Brian Behlendorf, CTO at the Open Wallet Foundation and Chief AI Strategist at The Linux Foundation said "there's a lot of irrational exuberance about the amount of investment that's going to be required both to train models and do inference on them."

Behlendorf said the capacity buildout is going to lead to indigestion.

"I see a lot of enterprises that are cutting other programs, laying off staff and doing everything to conserve capital to be able to collect all the data in the world and build dumb models that they don't really know what they're going to do."

"Yet, the cost of training AI is going to come down dramatically. There are a raft of 10x improvements in training and inference costs, purely in software. We're also finding better structured data ends lead to higher quality models at smaller token sizes."

Behlendorf added that he expects commodity GPU hardware systems to emerge in the next few years. He noted that the idea that the industry is going to need nuclear reactors and an ongoing data center buildout cycle to train large language models is foolhardy.

"A more sober analysis is that you need to build capacity inside your organization at a personnel level and skills level on how to use these technologies and hold on the massive expansion of data centers," he said.

More from CCE 2024:

- How leaders need to think about AI, genAI

- 2025 in preview: What Constellation Research’s analysts say

- Takeaways on successful AI, generative AI projects

The theme of the panel revolved around open-source models and their role in generative AI, but panelists agreed that costs will come down due open technologies. The upshot is that the Nvidia-OpenAI hammerlock on generative AI isn't going to last.

Other key points from the panel include:

Data hoarding doesn't work. Much of the genAI buildout revolves around the idea that data demand is insatiable. You can't have enough data is the common view. Jana Eggers, CEO of Nara Logics, disagreed:

"More data isn't going to solve your problem and the tech industry hasn't quite gotten it yet. Boards think that you should just go out and acquire more data."

Eggers said that enterprises need to profile the data they have and what's being acquired. Quality matters more than quantity. "Enterprises aren't even doing the basic checks on their own data or open data," said Eggers. "At the very start we tell our customers to profile their data."

It remains to be seen how long the view that data hoarding pays lasts.

Open models will lower costs, but hygiene will be an issue. Brittany Galli, CEO of BFG Ventures, said open models will improve efficiencies in AI, but hygiene will be a problem. "There's a ton of bad data and it's causing a lot of problems. You think that open models equal more transparency and higher efficiency, but the problem is hygiene," said Galli. "There is no perfect model that's going to be more accurate and unbiased. It's going to take time.

Invest smartly because you have to invest in AI. "I think we're to that point with AI that we know it's needed and you have to build or buy or get run over," said Galli. "There are no other options."

Be aware what's really open about models and frameworks. hlendorf said AI builders need to read the fine print. "We need to apply rigor to the use of the word open around AI," he said. Behlendorf said so-called open models often have a series of restrictions and lack transparency.

Models will become more efficient too and smaller. Jeff Welser, Vice President of IBM Research at the Almaden Lab, said smaller models and a wide selection of them will increase efficiency. "One reason you'll want open models is that you don't want to train them. You can choose to train for a specific portion or use case and then string them together," he said.

- How autonomous vehicles could change how cities are designed

- SuperNova Award interview: How IBM used Adobe Firefly to speed up ideation and iteration

- BT150's Ashwin Rangan on CIO evolution, technology curves and what it means for genAI deployments

- BT150 Spotlight: Sunitha Ray on the difference between enterprise AI and genAI