Generative AI projects in the enterprise have moved beyond the pilot stage with many use cases going into production. Scaling has been a bit of a challenge, but the maturation of how CxOs are approaching genAI is underway.

There's no census of generative AI projects in the enterprise, but directionally you can follow the trend. Datadog in a recent report found that GPU instances (a good proxy for genAI) are now 14% of cloud computing costs, up from 10% a year ago. Datadog's report was based on AWS instances so that percentage may be higher once Google Cloud, Microsoft Azure and increasingly Oracle Cloud is considered.

In recent weeks, I've made the rounds and heard from various enterprise customers talking about genAI. Here's a look at what's happening at the midway point of 2024 with genAI projects and what'll hopefully be a few best practices to ponder.

The big decisions are being made now by the business with technology close behind as a consideration. Use cases will proliferate, but they'll be scrutinized based on cost savings and revenue growth.

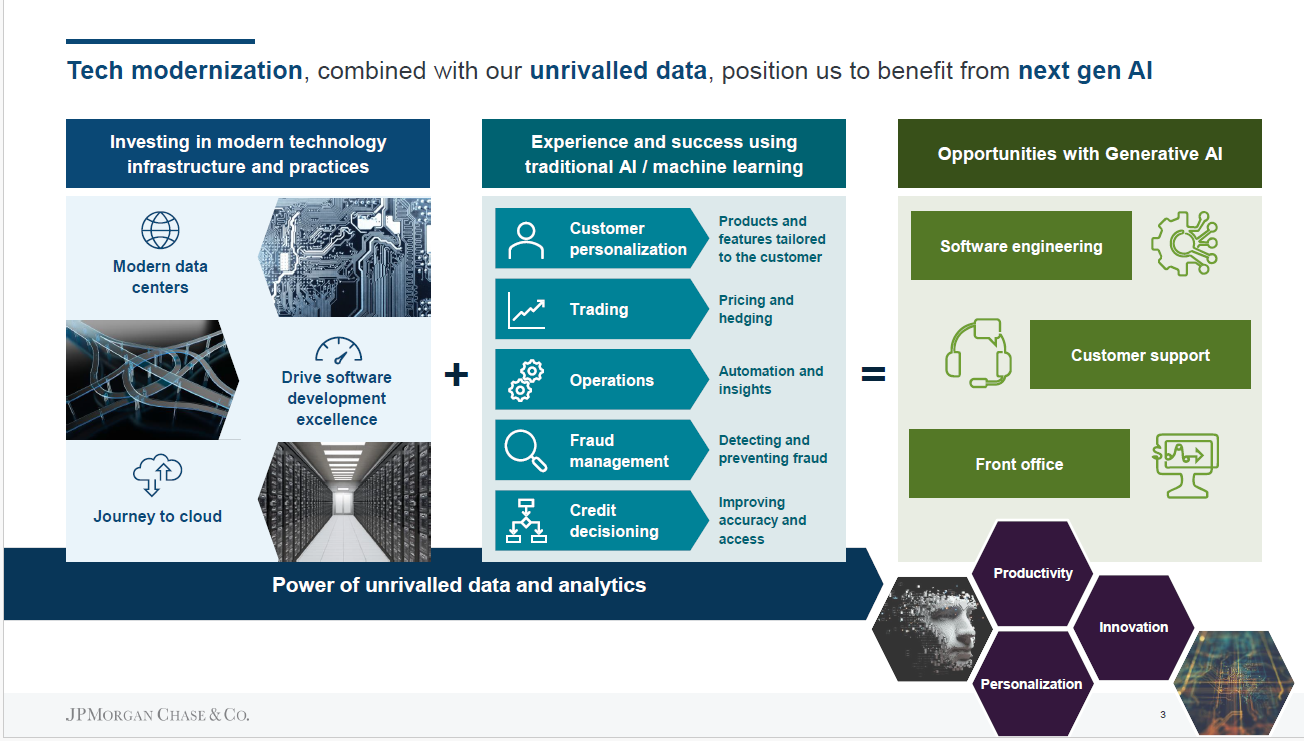

"It's not understanding AI. It's understanding how it works," said Jamie Dimon, CEO of JPMorgan Chase. By the end of the year, Dimon estimated that JPMorgan Chase will have about 800 AI use cases across the company as management teams become better at deploying AI. He added:

"We use it for prospect, marketing, offers, travel, notetaking, idea generation, hedging, equity hedging, and the equity trading floors, anticipating when people call in what they're calling it for, answering customer, just on the wholesale side, but answering customer requests. And then we have – and we're going to be building agents that not just answers the question, it takes action sometimes. And this is just going to blow people's mind. It will affect every job, every application, every database and it will make people highly more efficient."

Build a library of use cases. Vikram Nafde, CIO of Webster Bank, said at a recent AWS analyst meeting that it makes sense to build a library of genAI use cases. This library is effectively a playbook that can scale use cases across an enterprise. He said:

"Almost everyone has 10 use cases to try. I have hundreds of use cases. I've created this library of use cases. How do I prioritize? How do I engage my inner business? How do I engage more? Which are the ones that are worth an experiment? There are other costs and not only in terms of money or resource, but like people process and so forth."

Nafde said genAI use cases are viewed through a broader lens. Sometimes, a genAI use case is just a phase of a broader project. Use cases also must play well with multiple datasets and AI technologies and are vetted by an enterprise-wide AI council. Related: Target launches Store Companion, genAI app for employees

GenAI is a tool to solve problems, but isn't more than that. One change in the last year is that generative AI is being seen more as a tool to use to solve business problems instead of this magical technology. Unum CTO Gautam Roy said at a recent AWS analyst event:

"We don't think about AI or genAI as something separate. We are going to use it to solve problems. The first question we ask is what problem are we solving? Not everything is changed by AI. Sometimes it's a process change. Maybe it's an education or training issue. It may not be a technology issue. We ask what the problem is we are solving and then use innovation and technology to solve it."

This post first appeared in the Constellation Insight newsletter, which features bespoke content weekly and is brought to you by Hitachi Vantara.

Data platforms are converging as data, AI, business intelligence converge. Databricks is working to show it has the data warehousing chops to complement its AI and data platform. Snowflake is leveraging its data warehouse prowess to get into AI.

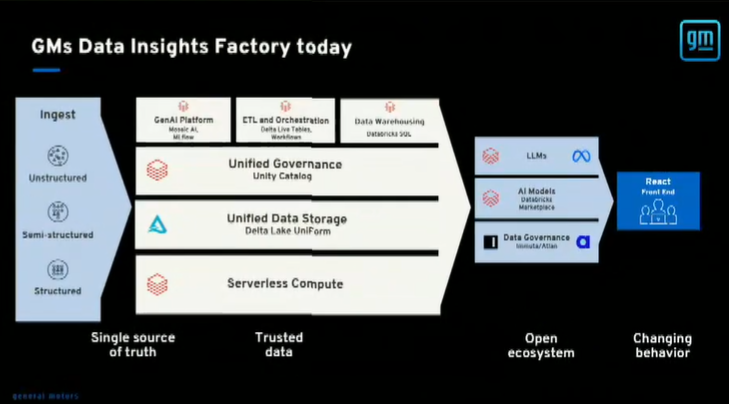

Speaking at Databricks Summit, Brian Ames, senior manager of production AI and data products at General Motors, said the company has stood up its data factory and plans to layer in generative AI capabilities in the next year. "GM has a ton of data. That's not the problem. We had a beautiful on prem infrastructure. Why change? Well, two reasons. Number one was data efficiency. More importantly, the world changed. And GM understood that if we didn't have AI and ML in our arsenal, we could find ourselves at a competitive disadvantage," he said.

Smaller enterprises will be using AI everywhere via platforms. Dimon noted that smaller banks will be using AI too through AWS, Fiserv and FIS. You can assume the same thing for other smaller enterprises across verticals.

Generative AI projects require a lot of human labor that's often overlooked, said Lori Walters, Vice President, Claims and Operations Data Science at The Hartford. "We spend a lot of time talking about the cost to build, about the training costs and the inference cost. But what we're seeing is the human capital associated with genAI is significant. Do not underestimate it," she said.

AI is just part of the management team now. Multiple enterprises have created centralized roles to oversee AI. Often, the executive in charge of AI is also in charge of data and analytics. Again, JPMorgan Chase's approach to AI is instructive. There's a central organization, but each business in the banking giant has AI initiatives.

Daniel Pinto, Chief Operating Officer and President at JPMorgan Chase, said the company has moved to transform its data so it's usable for AI and analytics. There will also be a central platform to leverage that data across business units. "AI and, particularly large language models, will be transformational here," said Pinto.

Compute is moving beyond just the cloud. Judging from the results from hardware vendors, on-premise AI optimized servers are selling well. Enterprises are becoming much more sophisticated about how they leverage various compute instances to optimize price/performance. This trend has created some interesting partnerships, notably Oracle and Google Cloud.

Optionality is the word. Along with the on-premise, private cloud and cloud options, enterprises will mix multiple models and processors. In a recent briefing, AWS Vice President of Product Matt Wood said: "Optionality is disproportionately important for generative AI. This is true today, because there's so much change in so much flux."

GenAI projects will have to be self-funded. At a recent Cognizant analyst meeting, there were a bevy of customers talking about transformation, technical debt and returns. Cognizant CEO Ravi Kumar said the technology services firm's customers are preparing for generative AI, but need to do work in quantifying productivity gains to justify costs. Kumar's take was echoed repeatedly by the firm's customers. "Discretionary spending in tech over the last 25 years has happened in the low interest rate regime where there was no cost of capital. Today you need a business use case for new projects," said Kumar.

I don't doubt that business case argument for genAI. Enterprise software companies have been talking about the delay in genAI profit euphoria in their most recent quarters. No enterprise is going to pay multiple vendors copilot taxes unless there are returns attached.

- Copilot, genAI agent implementations are about to get complicated

- Generative AI spending will move beyond the IT budget

- Enterprises Must Now Cultivate a Capable and Diverse AI Model Garden

- Secrets to a Successful AI Strategy

- Return on Transformation Investments (RTI)

The transformation journey to the cloud isn't done and may need to be accelerated to reap genAI rewards. Pinto said the JPMorgan Chase is revamping its technology stack.

"It's been a big, long journey, and it's a journey of modernizing our technology stacks from the layers that interact with our clients to all the deeper layers for processing. And we have made quite a lot of progress in both by moving some applications to the cloud, by moving some applications to the new data centers, and creating a tool for our developers that is a better experience. And we are making progress. The productivity of this organization today is by far higher than it was several years ago, and still a long way to go. We have optimized the infrastructure that we use, the cost per unit of processing and storage."

- JPMorgan Chase: Digital Transformation, AI and Data Strategy Sets Up Generative AI

- JPMorgan Chase: Why we're the biggest tech spender in banking

- JPMorgan Chase CEO Dimon: AI projects pay for themselves, private cloud buildout critical

-

GenAI projects may be sucked up into the transformation, digital core vortex

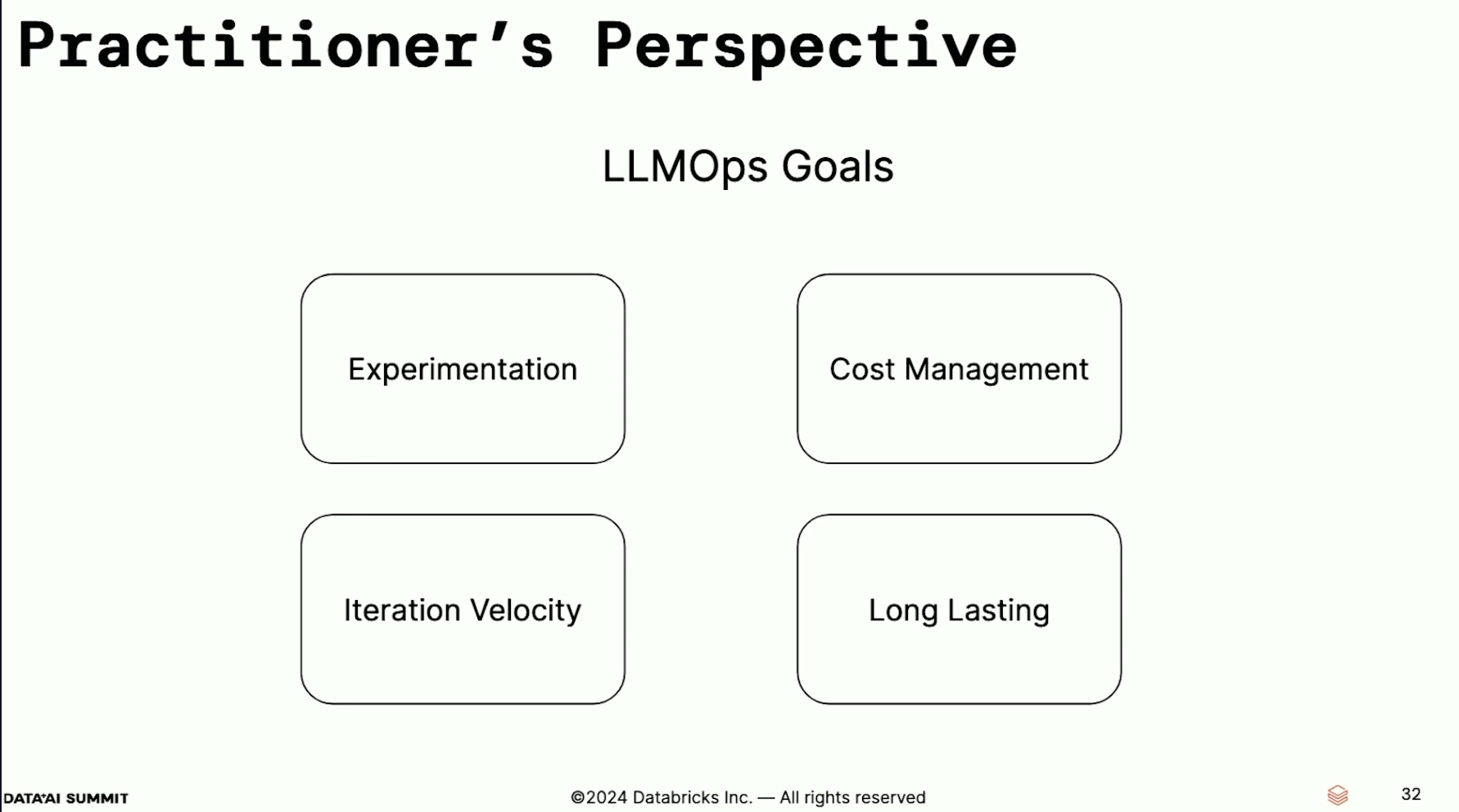

LLMOps is emerging and will converge with MLOps in the future. Hien Luu, Senior Engineering Manager at DoorDash and responsible for building out scalable genAI at the company, gave a talk at Databricks' Summit on LLMOps. Luu said LLMOps is becoming critical due to costs because working with LLMs and GPUs isn't cheap. He expects that MLOps and LLMOps platforms will converge.

Focus on long-lasting use cases and business value instead of infrastructure. Luu's big advice is: "Things are going to evolve rapidly so keep that in mind. Identify your goals and needs based on your company's specific environments and use cases. For now, focus less on infrastructure and more on long-lasting value components."

GenAI can speed up your analytics and data insights. Volume, surfacing insights, inflexibility and time are all analytics challenges, said Danielle Heymann, Senior Data Scientist at the National Institutes of Health. Speaking at Databricks Summit, Heymann said genAI is being explored to streamline data handling, uncover patterns, adjust and evolve and accelerate processing time and conduct quality and assurance functions. NIH National Institute of Child Health and Human Development are using genAI to process grant applications and streamline review processes. GenAI is being used to classify data and conduct QA with a bot.

More on genAI dynamics:

- OpenAI and Microsoft: Symbiotic or future frenemies?

- AI infrastructure is the new innovation hotbed with smartphone-like release cadence

- Don't forget the non-technical, human costs to generative AI projects

- GenAI boom eludes enterprise software...for now

- The real reason Windows AI PCs will be interesting

- Copilot, genAI agent implementations are about to get complicated

- Generative AI spending will move beyond the IT budget

- Enterprises Must Now Cultivate a Capable and Diverse AI Model Garden

- Financial services firms see genAI use cases leading to efficiency boom