Amazon Web Services launched AWS AI Factories, said Trainium 3 was generally available and outlined plans for Trainium 4.

The focus on custom silicon for AWS lands as it emphasizes that is still a strong partner to Nvidia--and outlined new instances for the latest Nvidia GPUs.

During a keynote at re:Invent 2025, AWS CEO Matt Garman followed a string of big infrastructure announcements including plans to invest $50 billion for US government high performance computing and AI data centers, the launch of Project Rainier for Anthropic and a deal with OpenAI.

Garman said AI infrastructure will require new building blocks and processes to create agents. "AI assistants are starting to give way to AI agents that can perform tasks and automate on your behalf. This is where we're starting to see material business returns from your AI investments," said Garman. "I believe that the advent of the of AI agents has brought us to an inflection point in AI's trajectory. It's turning from a technical wonder into something that delivers us real value. This change is going to have as much impact on your business as the internet or the cloud."

AWS has added 3.8 gigawatts of capacity added with Trainium growth of more than 150%. AWS has increased its network backbone by 50%. “In the last year alone, we've added 3.8 gigawatts of data center capacity, more than anyone in the world. And we have the world's largest private network, which has increased 50% over the last 12 months to now be more than 9 million kilometers of terrestrial and subsea cable,” said Garman during his keynote.

More from re:Invent 2025

- AWS adds AI agent policy, evaluation tools to Amazon Bedrock AgentCore

- AWS launches Amazon Nova Forge, Nova 2 Omni

- AWS launches AI factory service, Trainium 4 with Trainium 4 on deck

- AWS Transform aims for custom code, enterprise tech debt

- AWS, Google Cloud engineer interconnect between their clouds

- AWS Marketplace adds solutions-based offers, Agent Mode

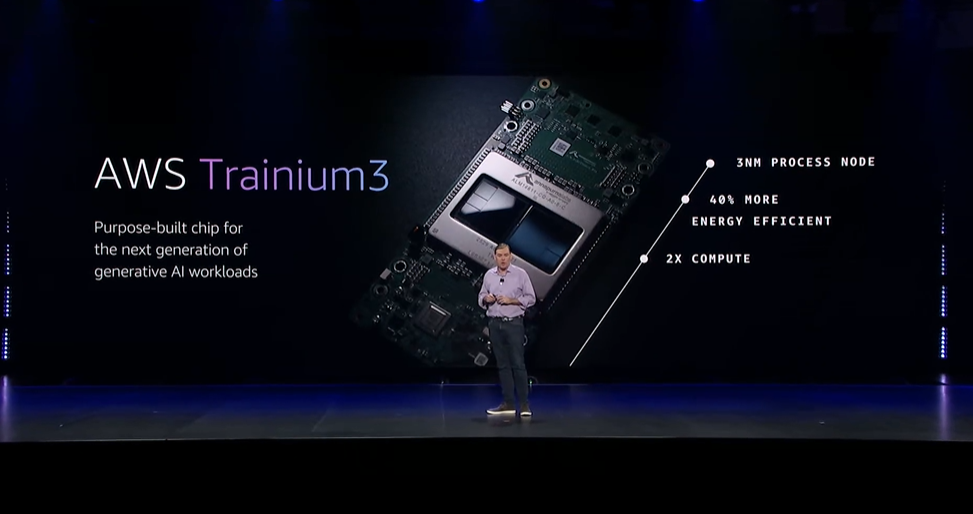

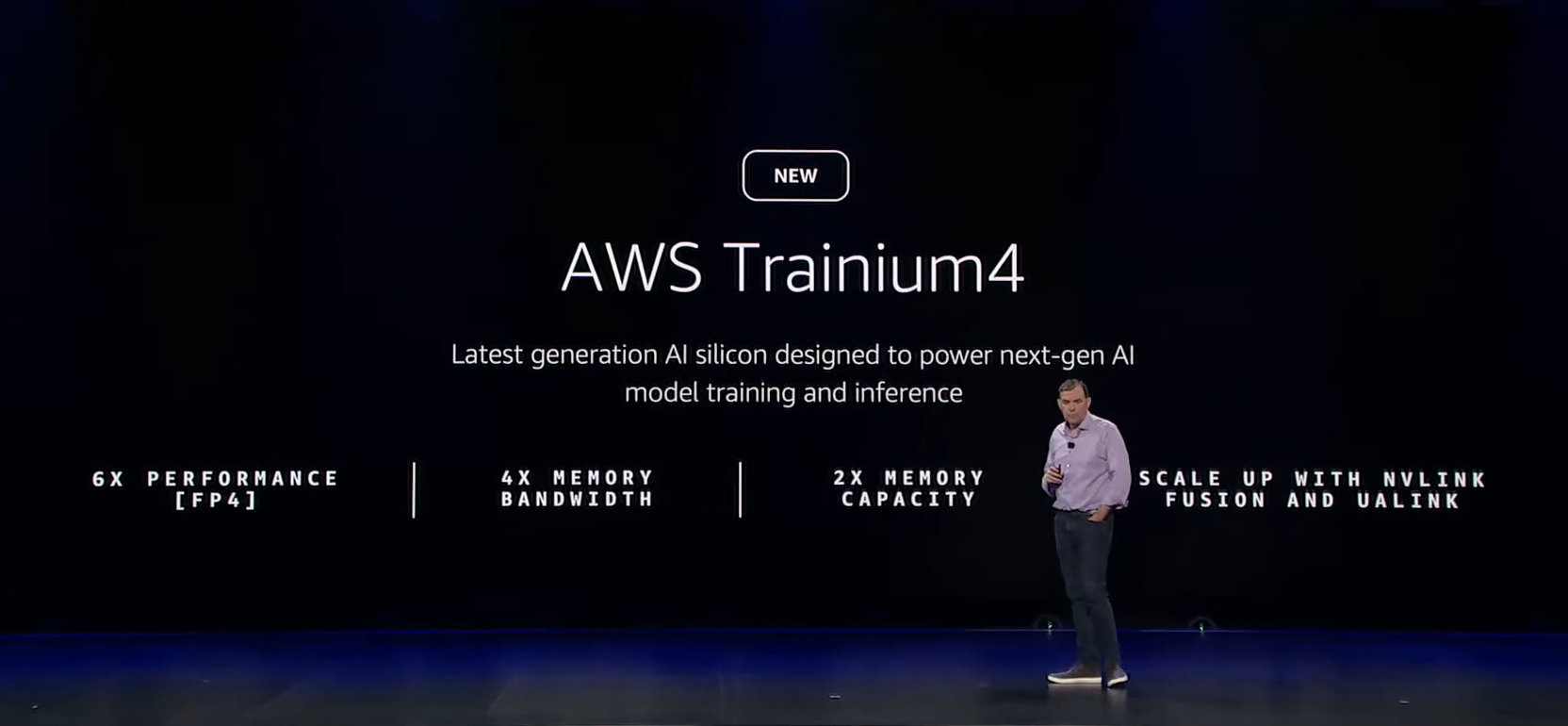

While the launch of Trainium 3 was telegraphed on Amazon’s earnings call, Trainium 4 was also previewed. There was also a messaging twist in that AWS noted that Trainium, which was originally launched as an AI model training chip, is also being used heavily for inference.

Garman said AWS has already deployed more than 1 million Trainium processors and is selling them as fast as they can be produced.

Among the details:

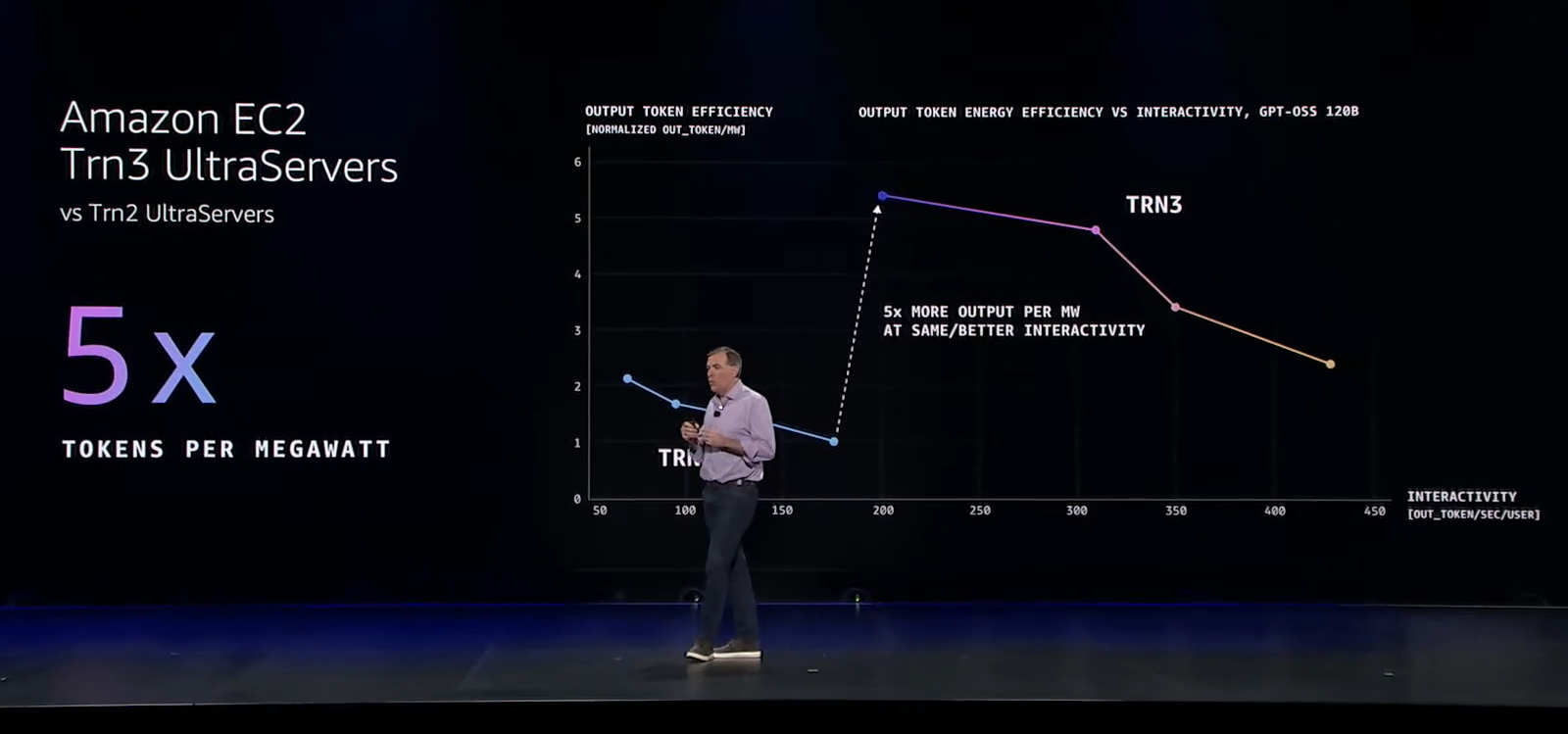

- Trainium 3 and UltraServers will offer the best price-performance for large-scale AI training and inference. Compared to Trainium 2, AWS Trainium 3 and UltraServers will have 4.4x more compute, 3.9x higher memory bandwidth and 3.5x higher tokens/megawatts.

- Garman said AWS has seen big performance gains by installing Trainium 3 in its UltraServers.

- Trainium 4 will build on Trainium 3 with 6x the performance (fp4), 4x the memory bandwidth and 2x the memory capacity.

AWS' custom silicon will in part power AWS AI Factories, which also launched at re:Invent. AWS AI Factories are customer-specific AI infrastructure built, scaled and managed by AWS.

The general idea behind AWS AI Factories is that the cloud provider can take the expertise from the projects behind the Anthropic, OpenAI and Humane deals and democratize AI factories for large enterprises and the public sector.

“We're enabling customers to deploy dedicated AI infrastructure for AWS in their own data centers for exclusive use for them,” said Garman. “AWS AI factories operate like a private AWS region, letting customers leverage their own data center space and power capacity that they've already acquired. We also give them access to leading AWS AI infrastructure and services.”

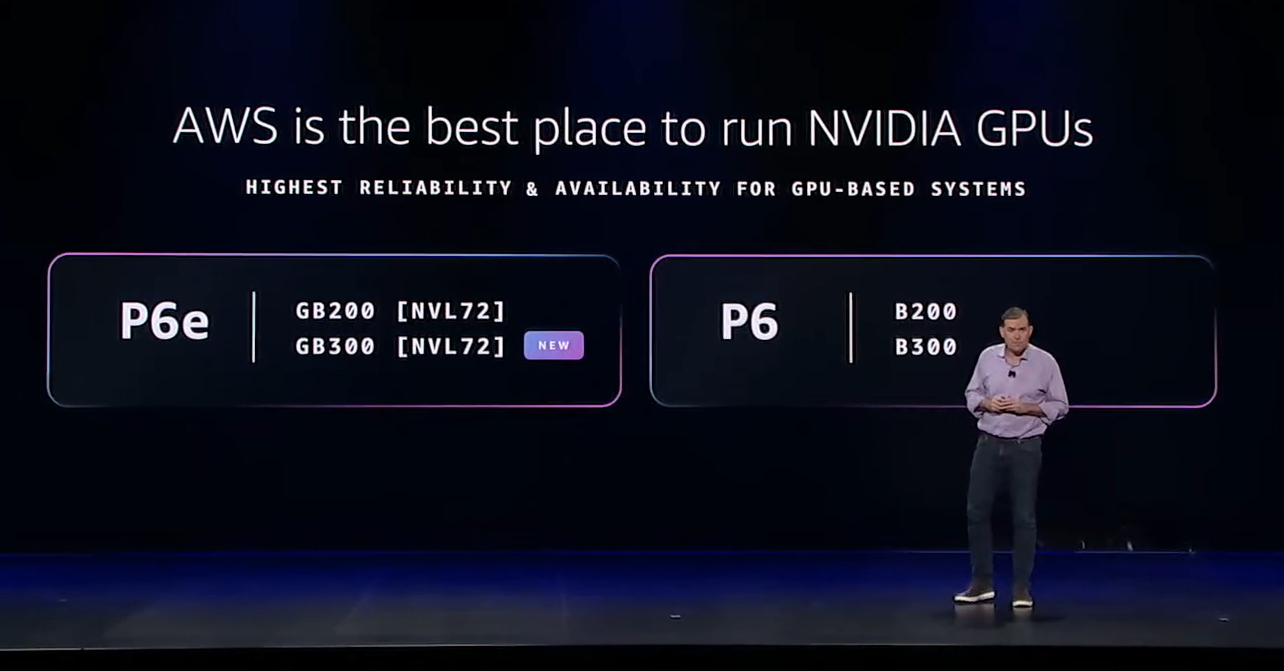

Now AWS' Garman was sure to make sure Nvidia instances were handy. The pitch is that AWS is the best place to run Nvidia for reliability, uptime and availability.

AWS launched P6e instances based on Nvidia's GB200 and GB300 AI accelerators. These instances are an upgrade over P5 instances based on B200 and B300.