Google launched Gemini 3, its latest model, across its Gemini app, search services, Gemini API, AI Studio and Google Cloud's Vertex AI and Gemini Enterprise. The company also highlighted how Gemini 3 can provide answers with a generative visual user interface.

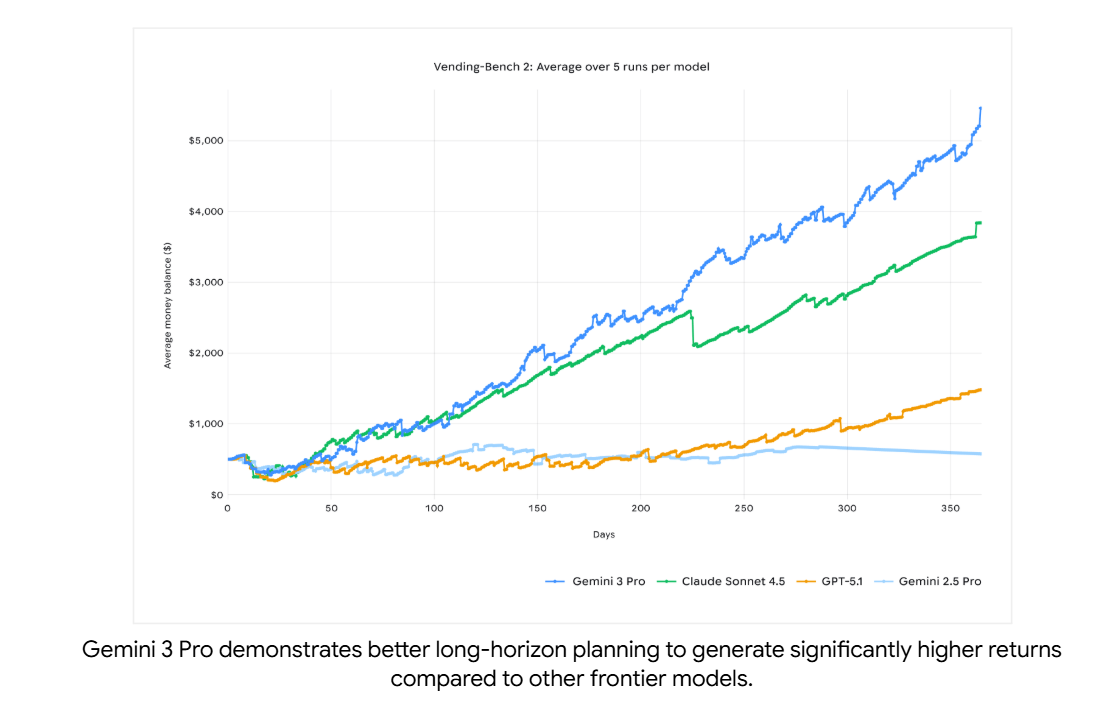

Gemini 3 has better performance and capabilities compared to Gemini 2.5 Pro. For instance, Gemini 3 scores well ahead of its predecessor on benchmarks such as humanity's last exam, visual reasoning puzzles, scientific knowledge and coding.

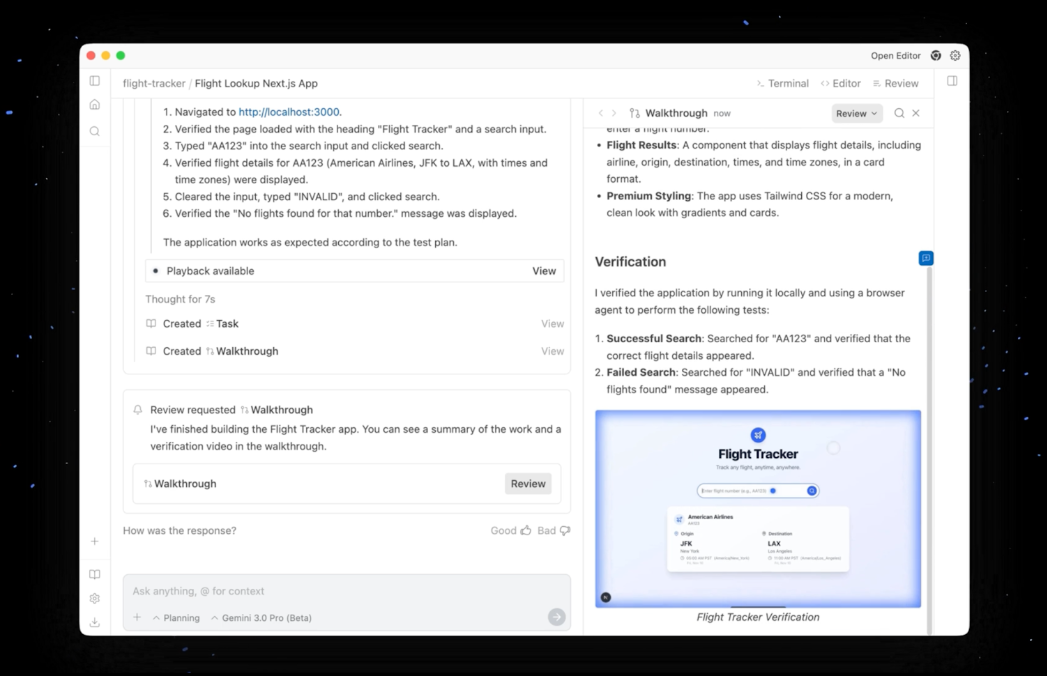

In addition, Google launched Google Antigravity, a developer environment for MacOS, Windows and Linux. Google Antigravity is an effort to "push the frontiers of how the model and the IDE can work together," said Koray Kavukcuoglu, CTO of Google DeepMind and Chief AI Architect at Google, on a briefing.

Kavukcuoglu said Google Antigravity aims to do the following:

- Offer a dedicated agent interface that give developers the ability to use autonomous agents that can operate through the code editor, terminal or browser.

- It can build an application from a single prompt, create subtasks and then execute.

- Antigravity creates progress reports, task and verify work inside Chrome. It will also present a walkthrough of how the final product works.

- Antigravity will be in public preview and available on Mac, Windows and Linux.

Combined with Gemini 3, Antigravity can "use these models to learn, build and plan anything they want to do."

While Google Antigravity appeals to enterprises and developers, the big takeaway from the Gemini 3 launch is that the Google can deliver new models at scale across its platforms all at once. The Gemini app now has more than 650 million users per month and Gemini has more than 13 million developers.

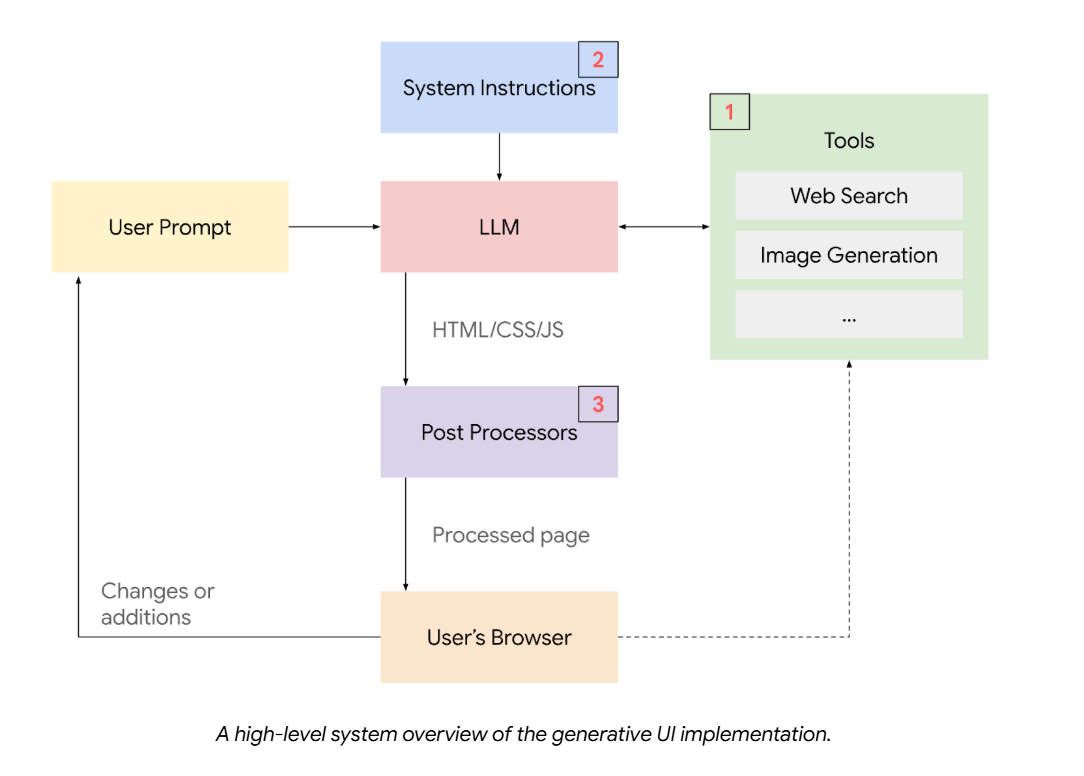

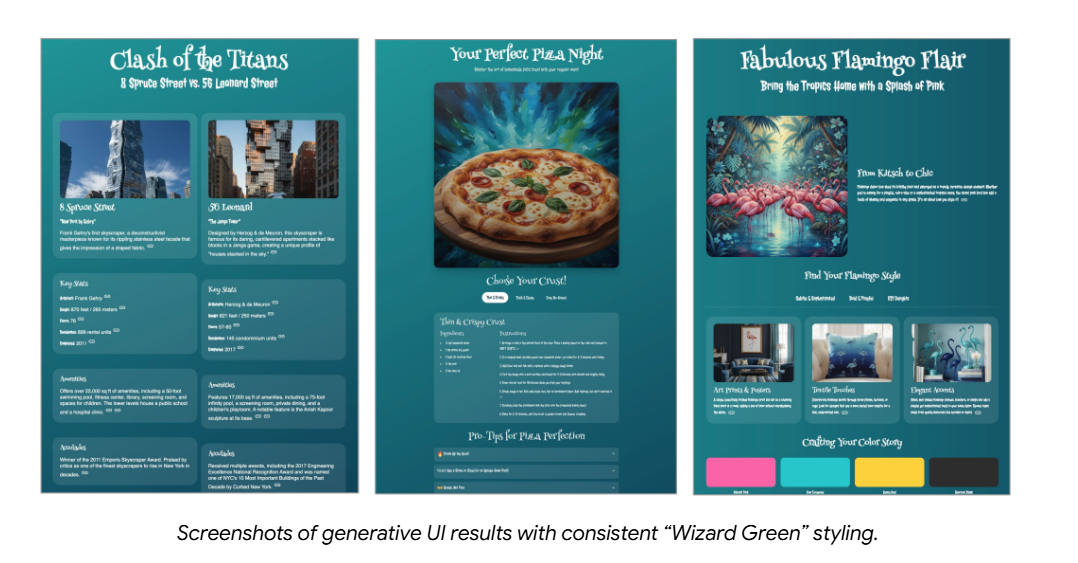

The main theme from Google isn't just how Gemini 3 performs against benchmark, but its ability to make its answers accessible. Tulsee Doshi, Senior Director of Product Management, Gemini at Google DeepMind, said "Gemini 3 is responding with a level and depth and nuance we haven't seen before" because it can seamlessly transform information from multiple formats.

- Google Cloud, KPMG outline lessons learned from Gemini Enterprise deployments

- Virgin Voyages: Lessons learned from scaling Google Gemini Enterprise AI agents

- Google Public Sector Summit 2025: Takeaways on data, AI, ROI from 7 technology leaders

- Google Public Sector: AI agents and the future of government

- Google Cloud launches Gemini Enterprise, eyes agentic AI orchestration

Doshi also said Google will launch Gemini 3 Deep Think, its enhanced reasoning model to safety testers before broad availability with Google AI Ultra subscribers. The goal for Google is to ensure Gemini 3 evolves from an answering tool to a partner that can help you "learn a new skill, build a creative project or just plan your life."

Here's what stood out for Gemini 3.

- Visual Layout. In the Google Labs, Gemini 3 can spin up visual layouts with its answers. If you type in a question like how you plan a three-day trip to Rome, you'll get good UI design instead of just text. Gemini 3 can create interactive widgets, options to go on different scenarios and images, tables and text.

- Dynamic View. Gemini 3 can use its coding ability to create interactive experiences on the fly. "When we talk about multi-modality, it's not just about how Gemini 3 can understand input, it's also how it can output things in entirely new ways," said Josh Woodward, VP, Google Labs, Gemini and AI Studio. Dynamic View will also be in Google Labs to collect feedback.

- Gemini Agent, which is a feature that can take a question and break down a plan. For instance, you can ask Gemini Agent to control your inbox, and cluster tasks and messages. "We think it's our first step to a true generalist agent that will be able to work across your different Google products," said Woodward.

Most folks will wind up experiencing Gemini 3 in AI Mode search. Google executives said the goal is to be able to ask natural language nuanced questions without knowing keywords. You'll be able to ask about pictures. Google's AI Mode will be able to route to models based on difficulty.

Constellation Research analyst Holger Mueller said:

"Google keeps innovating fast with Gemini 3 shipping with better reasoning and better coding. Google is also making Gemini 3 available across the Google offerings from search to Vertex AI either immediately or in the near future. Google heavily leverages its lead in multimodal AI and Gemini shows that. Users and tasks seamlessly flow between different modalalities. Google also released its agentic framework Antigravity for developers – with the agent running more autonomously than ever before. Google continues its lead as a multimodal reasoning and coding model with Gemini 3."

For enterprises, Google Cloud cited a wide range of Gemini 3 customers including Box, Cursor, Figma, Shopify and Thomson Reuters.