Large language models (LLMs) have reached the phase where advances are incremental as they quickly become commodities. Simply put, it's the age of good enough LLMs where the innovation will come from orchestrating them and customizing them for use cases.

This development is great for enterprises, which will be able to buy perfectly serviceable private label, rightsized and optimized models without worrying about falling behind in 10 minutes. Foundation models are reaching the point where for some use cases there won't be much improvement with upgrades. Do you really care if a new LLM is 0.06% better in math or reasoning relative to another you plan to use in the call center on the cheap?

Use cases for documentation, summarization, extracting information and spinning up content aren't going to see big improvements with model advances. In other words, thousands of enterprise use cases can leverage generic LLMs. The model giants are almost confirming that they’ve hit the wall since they’re competing on personality, snark and faux empathy in LLMs.

The enterprise value is going to be delivered by the orchestration, frameworks and architecture that mix and match LLMs based on specialties.

Here's a look at some developments that point to the age of incremental for LLMs.

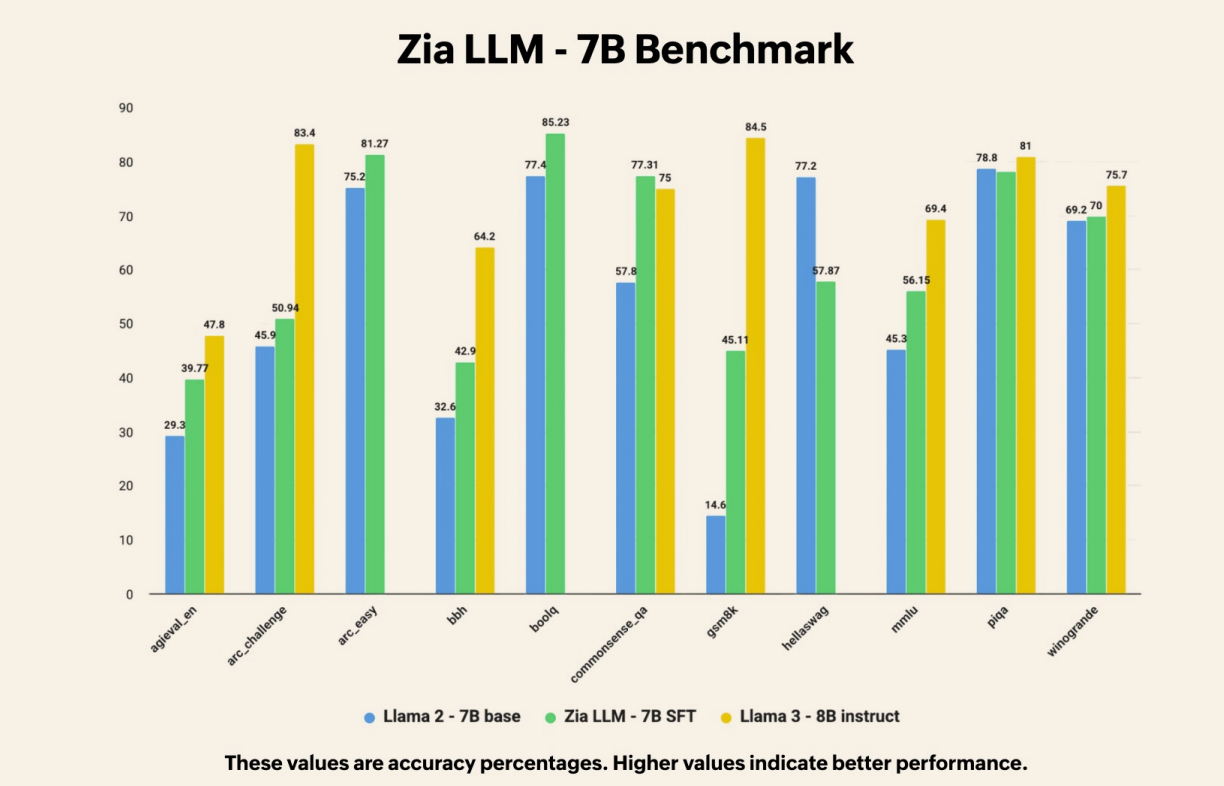

Zoho launches Zia LLM, which is essentially a disruptive private label LLM family. Zoho is a company that makes you really question your SaaS bill. And now Zoho is infusing its platform with its Zia LLM, AI agents and orchestration tools. Zoho said by controlling its own LLM layer it can optimize use cases, control costs and pass along savings to customers.

Raju Vegesna, Chief Evangelist at Zoho, said the company isn't initially charging for its LLM or agents until it has a better view of usage and operational costs. "If there a big operational resource needed for intensive tasks we may price it, but for now we don't know what it looks like so we're not charging for anything," he said.

Just like consumers are going to private label brands to better control costs, enterprises are going to do the same. The path of least resistance for Zoho customers will be to leverage Zia LLMs.

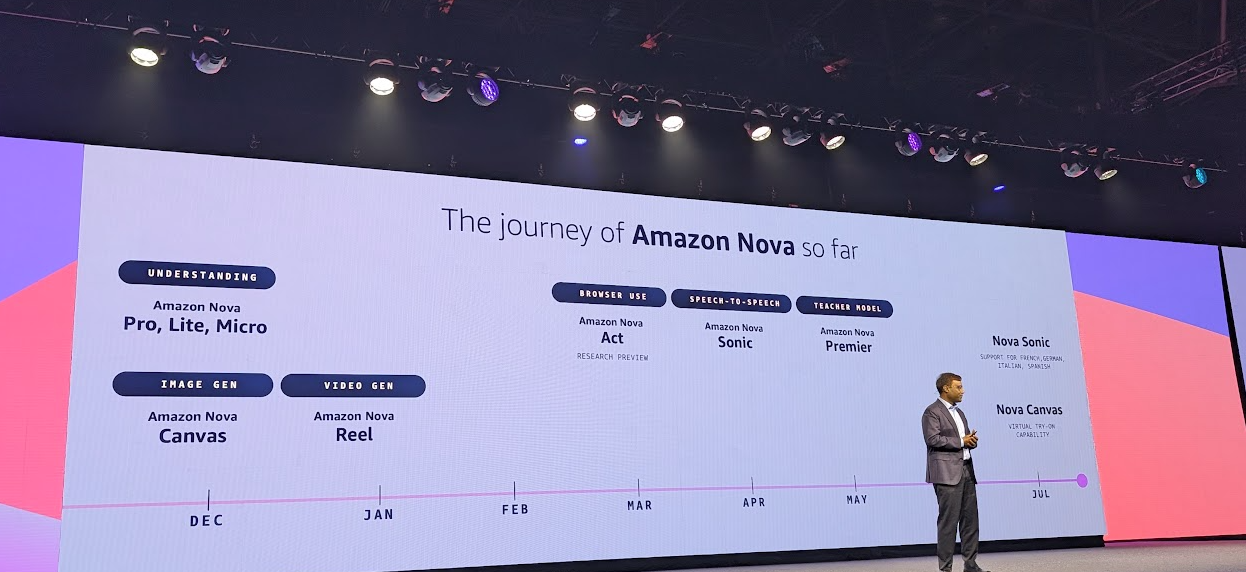

Amazon Web Services launches customization tools for its Nova models. Nova is a family of AWS in-house LLMs. I overheard one analyst nearly taunt AWS for having models that don't make headlines. That snark misses the point if Nova offers good enough performance, commoditizes that LLM layer and controls costs.

The Nova news was part of AWS Summit New York that focused on fundamentals, architecture and meeting enterprise customers where they are. As most of us know, the enterprise adoption curve is significantly slower than the vendor hype cycle.

- AWS' practical AI agent pitch to CxOs: Fundamentals, ROI matter

- AWS launches Bedrock Agent Core, custom Nova models

- Intuit starts to scale AI agents via AWS

- AWS launches Kiro, an IDE powered by AI agents

AWS said Nova has 10,000 customers and the cloud provider plans to land more with optimization recipes, model distillation and customization tools to balance price and performance. Nova will also get customization on-demand pricing for inference.

With those two private label moves out of the way there's are developments out of China and Japan worth noting.

Moonshot AI, a Chinese AI startup backed by Alibaba, launched Kimi K2, a mixture of experts model that features 1 trillion total parameters and 32 billion activated parameters in a mixture-of-experts architecture. Moonshot AI is offering a foundation model for researchers and developers and a tuned version optimized for chat and AI agents.

Kimi K2 is outperforming Anthropic and OpenAI models in some benchmarks and beating DeepSeek-V3. The real kicker is that Moonshot is delivering models that cost a fraction of what US proprietary models for training and inference.

Research from Japanese AI lab Sakana AI indicates that enterprises may want to start thinking of large language models (LLMs) as ensemble casts that can combine knowledge and reasoning to complete tasks.

Sakana AI in a research paper outlined a method called Multi-LLM AB-MCTS (Adaptive Branching Monte Carlo Tree Search) that uses a collection of LLMs to cooperate, perform trial-and-error and leverage strengths to solve complex problems.

Add it up but the new age of incremental for LLMs is why I’m wary of the gap between AI euphoria and enterprise value. It’s not a slam dunk that buying the latest Nvidia GPUs, paying $100 million to AI researchers to create super teams and building massive data centers is going to yield the breakthroughs being pitched.

It appears that LLM giants know where the game is headed as they build ecosystems and complementary applications around their foundation models. For instance, Anthropic is best known for its Claude large language model (LLM), but its enterprise software ambitions are clear as the company builds out its go-to-market team. OpenAI is focusing on ChatGPT advances for headlines, but the big picture is more about AI agents.